Note: This is part 2 of the Make the Leap New Year’s Resolution series. For part 1 please go here.

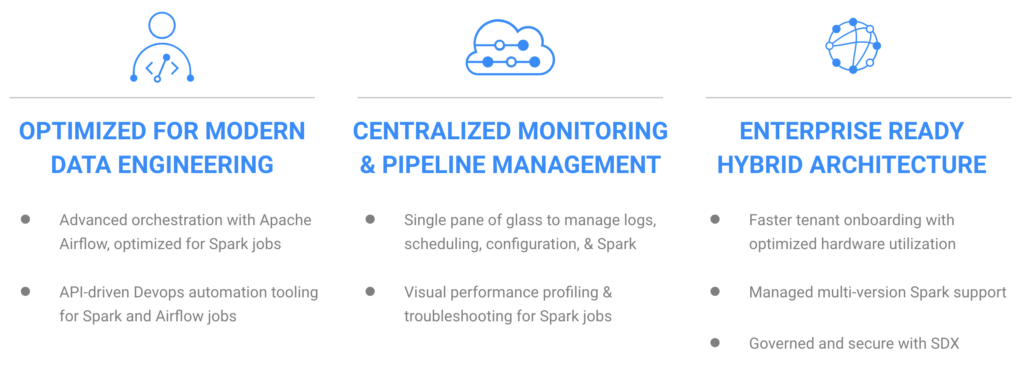

When we introduced Cloudera Data Engineering (CDE) in the Public Cloud in 2020 it was a culmination of many years of working alongside companies as they deployed Apache Spark based ETL workloads at scale. We not only enabled Spark-on-Kubernetes but we built an ecosystem of tooling dedicated to the data engineers and practitioners from first-class job management API & CLI for dev-ops automation to next generation orchestration service with Apache Airflow.

Today, we are excited to announce the next evolutionary step in our Data Engineering service with the introduction of CDE within Private Cloud 1.3 (PVC). This now enables hybrid deployments whereby users can develop once and deploy anywhere whether it’s on-premise or on the public cloud across multiple providers (AWS and Azure). We are paving the path for our enterprise customers that are adapting to the critical shifts in technology and expectations. It’s no longer driven by data volumes, but containerization, separation of storage and compute, and democratization of analytics. The same key tenants powering DE in the public clouds are now available in the data center.

- Centralized interface for managing the life cycle of data pipelines — scheduling, deploying, monitoring & debugging, and promotion.

- First-class APIs to support automation and CI/CD use cases for seamless integration.

- Users can deploy complex pipelines with job dependencies and time based schedules, powered by Apache Airflow, with preconfigured security and scaling.

- Integrated security model with Shared Data Experience (SDX) allowing for downstream analytical consumption with centralized security and governance.

CDE on PVC Overview

With the introduction of PVC 1.3.0 the CDP platform can run across both OpenShift and ECS (Experiences Compute Service) giving customers greater flexibility in their deployment configuration.

CDE like the other data services (Data Warehouse and Machine Learning for example) deploys within the same kubernetes cluster and is managed through the same security and governance model. Data engineering workloads are deployed as containers into virtual clusters connecting up to the storage cluster (CDP Base), accessing data and running all the compute workloads in the private cloud cluster, which is a Kubernetes cluster.

The control plane contains apps for all the data services, ML, DW and DE, that are used by the end user to deploy workloads on the OCP or ECS cluster. The ability to provision and deprovision workspaces for each of these workloads allows users to multiplex their compute hardware across various workloads and thus obtain better utilization. Additionally, the control plane contains apps for logging & monitoring, an administration UI, the key tab service, the environment service, authentication and authorization.

The key tenants of private cloud we continue to embrace with CDE:

- Separation of compute and storage allowing for independent scaling of the two

- Auto scaling workloads on the fly leading to better hardware utilization

- Supporting multiple versions of the execution engines, ending the cycle of major platform upgrades that have been a huge challenge for our customers.

- Isolating noisy workloads into their own execution spaces allowing users to guarantee more predictable SLAs across the board

And all this without having to rip and replace the technology that powers their applications as would be involved if they chose to migrate to other vendors.

Usage Patterns

You can make the leap with CDE to hybrid by exploiting a few key patterns, some more commonly seen than others. Each unlocking value in the data engineering workflows enterprises can start taking advantage of.

Bursting to the public cloud

Probably the most commonly exploited pattern, bursting workloads from on-premise to the public cloud has many advantages when done right.

CDP provides the only true hybrid platform to not only seamlessly shift workloads (compute) but also any relevant data using Replication Manager. And with the common Shared Data Experience (SDX) data pipelines can operate within the same security and governance model – reducing operational overhead – while allowing new data born-in-the-cloud to be added flexibly and securely.

Tapping into elastic compute capacity has always been attractive as it allows business to scale on-demand without the protracted procurement cycles of on-premise hardware. This hasn’t been more pronounced than with the COVID-19 pandemic as work from home has required more data to be collected for security purposes but also to enable more productivity. Besides scaling up, the cloud allows simple scale down especially as we shift back to the office and the excess compute capacity is not required. The key is that CDP, as a hybrid data platform, allows this shift to be fluid. Users can develop their DE pipelines once and deploy anywhere without spending many months porting applications to and from cloud platforms requiring code change, more testing and verification.

Agile multi-tenancy

When new teams want to deploy use-cases or proof-of-concepts (PoC), onboarding their workloads on traditional clusters is notoriously difficult in many ways. Capacity planning has to be done to ensure their workloads do not impact existing workloads. If not enough resources are available, new hardware for both compute and storage needs to be procured which can be an arduous undertaking. Assuming that checks out, users & groups have to be set up on the cluster with the required resource limits – generally done through YARN queues. And then finally the right version of Spark needs to be installed. If Spark 3 is required but not already on the cluster, a maintenance window is required to have that installed.

DE on PVC alleviates many of these challenges. First, by separating out compute from storage, new use-cases can easily scale out compute resources independent of storage thereby simplifying capacity planning. And since CDE runs Spark-on-Kubernetes, an autoscaling virtual cluster can be brought up in a matter of minutes as a new isolated tenant, on the same shared compute substrate. This allows efficient resource utilization without impacting any other workloads, whether they be Spark jobs or downstream analytic processing.

Even more importantly, running mixed versions of Spark and setting quota limits per workload is a few drop down configurations. CDE provides Spark as a multi-tenant ready service, with efficiency, isolation, and agility to give data engineers the compute capacity to deploy their workloads in a matter of minutes instead of weeks or months.

Scalable orchestration engine

Whether on-premise or in the public cloud, a flexible and scalable orchestration engine is critical when developing and modernizing data pipelines. We see this at many customers as they struggle with not only setting up but continuously managing their own orchestration and scheduling service. That’s why we chose to provide Apache Airflow as a managed service within CDE.

It’s integrated with CDE and the PVC platform, which means it comes with security and scalability out-of-the-box, reducing the typical administrative overhead. Whether it is a simple time based scheduling or complex multistep pipelines, Airflow within CDE allows you to upload custom DAGs using a combination of Cloudera operators (namely Spark and Hive) along with core Airflow operators (like python and bash). And for those looking for even more customization, plugins can be used to extend Airflow core functionality so it can serve as a full-fledged enterprise scheduler.

Ready to take the leap?

The old ways of the past with cloud vendor lock-ins on compute and storage are over. Data Engineering should not be limited by one cloud vendor or data locality. Business needs are continuously evolving, requiring data architectures and platforms that are flexible, hybrid, and multi-cloud.

Take advantage of developing once and deploying anywhere with the Cloudera Data Platform, the only truly hybrid & multi-cloud platform. Onboard new tenants with single click deployments, use the next generation orchestration service with Apache Airflow, and shift your compute – and more importantly your data – securely to meet the demands of your business with agility.

Sign up for Private Cloud to test drive CDE and the other Data Services to see how it can accelerate your hybrid journey.