In this multipart series, fully explore the tangled ball of thread that is YARN.

YARN (Yet Another Resource Negotiator) is the resource management layer for the Apache Hadoop ecosystem. YARN has been available for several releases, but many users still have fundamental questions about what YARN is, what it’s for, and how it works. This new series of blog posts is designed with the following goals in mind:

- Provide a basic understanding of the components that make up YARN

- Illustrate how a MapReduce job fits into the YARN model of computation. (Note: although Apache Spark integrates with YARN as well, this series will focus on MapReduce specifically. For information about Spark on YARN, see this post.)

- Present an overview of how the YARN scheduler works and provide building-block examples for scheduler configuration

The series comprises the following parts:

- Part 1: Cluster and YARN basics

- Part 2: Global configuration basics

- Part 3: Scheduler concepts

- Part 4: FairScheduler queue basics

- Part 5: Using FairScheduler queue properties

In this initial post, we’ll cover the fundamentals of YARN, which runs processes on a cluster similarly to the way an operating system runs processes on a standalone computer. Subsequent parts will be released every few weeks.

Cluster Basics (Master/Worker)

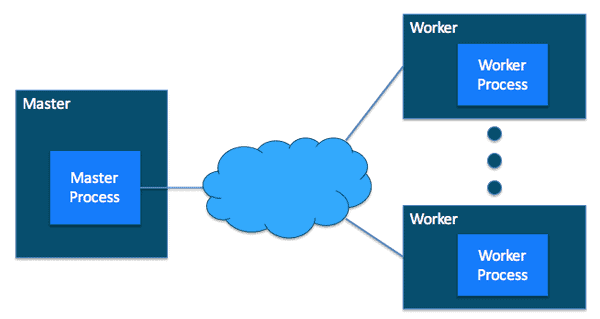

A host is the Hadoop term for a computer (also called a node, in YARN terminology). A cluster is two or more hosts connected by a high-speed local network. Two or more hosts—the Hadoop term for a computer (also called a node in YARN terminology)—connected by a high-speed local network are called a cluster. From the standpoint of Hadoop, there can be several thousand hosts in a cluster.

In Hadoop, there are two types of hosts in the cluster.

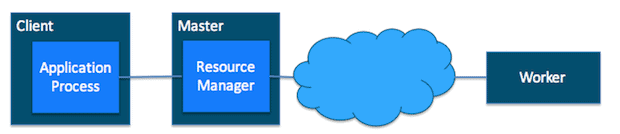

Figure 1: Master host and Worker hosts

Conceptually, a master host is the communication point for a client program. A master host sends the work to the rest of the cluster, which consists of worker hosts. (In Hadoop, a cluster can technically be a single host. Such a setup is typically used for debugging or simple testing, and is not recommended for a typical Hadoop workload.)

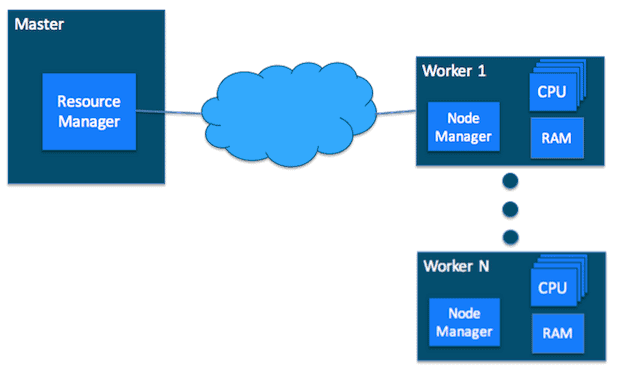

YARN Cluster Basics (Master/ResourceManager, Worker/NodeManager)

In a YARN cluster, there are two types of hosts:

- The ResourceManager is the master daemon that communicates with the client, tracks resources on the cluster, and orchestrates work by assigning tasks to NodeManagers.

- A NodeManager is a worker daemon that launches and tracks processes spawned on worker hosts.

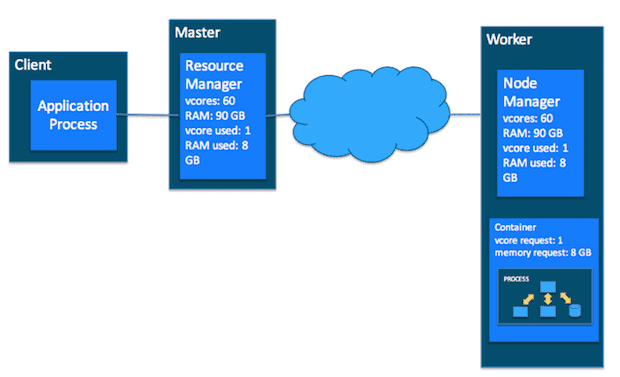

Figure 2: Master host with ResourceManager and Worker hosts with NodeManager

YARN Configuration File

The YARN configuration file is an XML file that contains properties. This file is placed in a well-known location on each host in the cluster and is used to configure the ResourceManager and NodeManager. By default, this file is named yarn-site.xml. The basic properties in this file used to configure YARN are covered in the later sections.

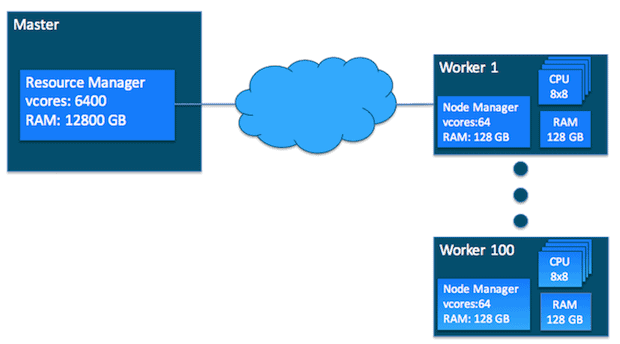

YARN Requires a Global View

YARN currently defines two resources, vcores and memory. Each NodeManager tracks its own local resources and communicates its resource configuration to the ResourceManager, which keeps a running total of the cluster’s available resources. By keeping track of the total, the ResourceManager knows how to allocate resources as they are requested. (Vcore has a special meaning in YARN. You can think of it simply as a “usage share of a CPU core.” If you expect your tasks to be less CPU-intensive (sometimes called I/O-intensive), you can set the ratio of vcores to physical cores higher than 1 to maximize your use of hardware resources.)

Figure 3: ResourceManager global view of the cluster

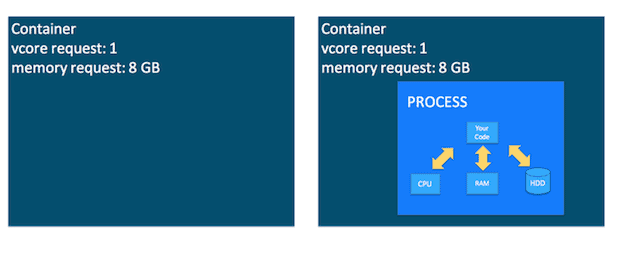

Containers

Containers are an important YARN concept. You can think of a container as a request to hold resources on the YARN cluster. Currently, a container hold request consists of vcore and memory, as shown in Figure 4 (left).

Figure 4: Container as a hold (left), and container as a running process (right)

Once a hold has been granted on a host, the NodeManager launches a process called a task. The right side of Figure 4 shows the task running as a process inside a container. (Part 3 will cover, in more detail, how YARN schedules a container on a particular host.)

YARN Cluster Basics (Running Process/ApplicationMaster)

For the next section, two new YARN terms need to be defined:

- An application is a YARN client program that is made up of one or more tasks (see Figure 5).

- For each running application, a special piece of code called an ApplicationMaster helps coordinate tasks on the YARN cluster. The ApplicationMaster is the first process run after the application starts.

An application running tasks on a YARN cluster consists of the following steps:

- The application starts and talks to the ResourceManager for the cluster:

Figure 5: Application starting up before tasks are assigned to the cluster

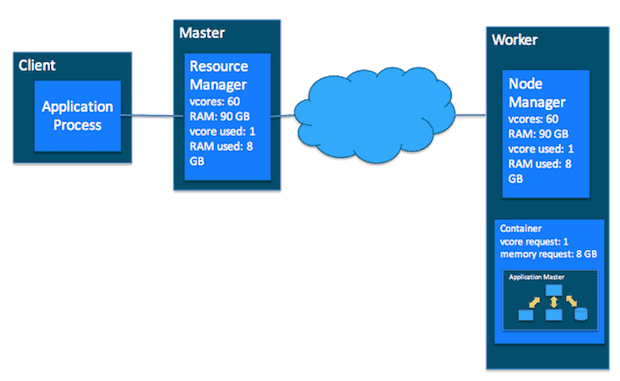

- The ResourceManager makes a single container request on behalf of the application:

Figure 6: Application + allocated container on a cluster

- The ApplicationMaster starts running within that container:

Figure 7: Application + ApplicationMaster running in the container on the cluster

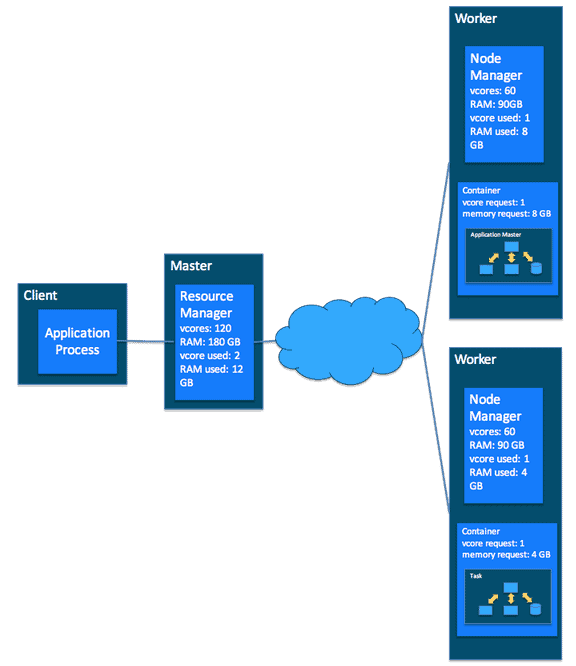

- The ApplicationMaster requests subsequent containers from the ResourceManager that are allocated to run tasks for the application. Those tasks do most of the status communication with the ApplicationMaster allocated in Step 3):

Figure 8: Application + ApplicationMaster + task running in multiple containers running on the cluster

- Once all tasks are finished, the ApplicationMaster exits. The last container is de-allocated from the cluster.

- The application client exits. (The ApplicationMaster launched in a container is more specifically called a managed AM. Unmanaged ApplicationMasters run outside of YARN’s control. Llama is an example of an unmanaged AM.)

MapReduce Basics

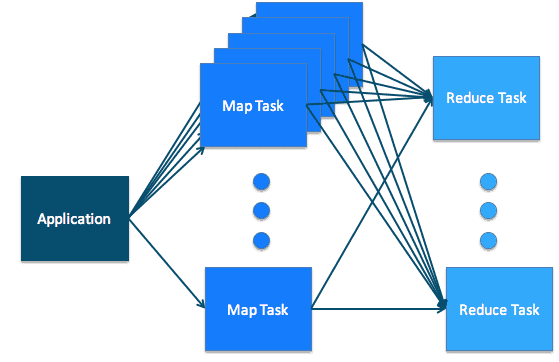

In the MapReduce paradigm, an application consists of Map tasks and Reduce tasks. Map tasks and Reduce tasks align very cleanly with YARN tasks.

Figure 9: Application + Map tasks + Reduce tasks

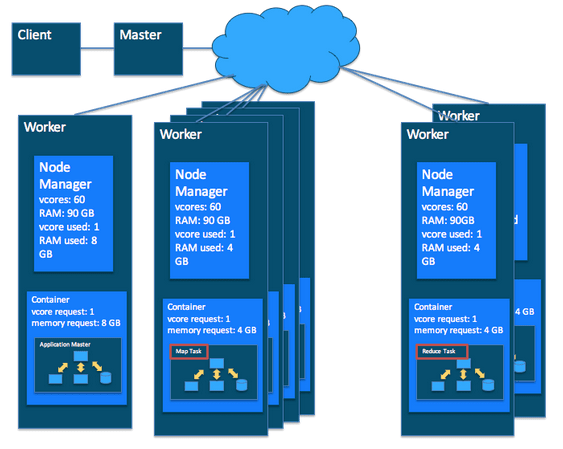

Putting it Together: MapReduce and YARN

Figure 10 illustrates how the map tasks and the reduce tasks map cleanly to the YARN concept of tasks running in a cluster.

Figure 10: Merged MapReduce/YARN Application Running on a Cluster

In a MapReduce application, there are multiple map tasks, each running in a container on a worker host somewhere in the cluster. Similarly, there are multiple reduce tasks, also each running in a container on a worker host.

Simultaneously on the YARN side, the ResourceManager, NodeManager, and ApplicationMaster work together to manage the cluster’s resources and ensure that the tasks, as well as the corresponding application, finish cleanly.

Conclusion

Summarizing the important concepts presented in this section:

- A cluster is made up of two or more hosts connected by an internal high-speed network. Master hosts are a small number of hosts reserved to control the rest of the cluster. Worker hosts are the non-master hosts in the cluster.

- In a cluster with YARN running, the master process is called the ResourceManager and the worker processes are called NodeManagers.

- The configuration file for YARN is named yarn-site.xml. There is a copy on each host in the cluster. It is required by the ResourceManager and NodeManager to run properly. YARN keeps track of two resources on the cluster, vcores and memory. The NodeManager on each host keeps track of the local host’s resources, and the ResourceManager keeps track of the cluster’s total.

- A container in YARN holds resources on the cluster. YARN determines where there is room on a host in the cluster for the size of the hold for the container. Once the container is allocated, those resources are usable by the container.

- An application in YARN comprises three parts:

- The application client, which is how a program is run on the cluster.

- An ApplicationMaster which provides YARN with the ability to perform allocation on behalf of the application.

- One or more tasks that do the actual work (runs in a process) in the container allocated by YARN.

- A MapReduce application consists of map tasks and reduce tasks.

- A MapReduce application running in a YARN cluster looks very much like the MapReduce application paradigm, but with the addition of an ApplicationMaster as a YARN requirement.

Next Time…

Part 2 will cover calculating YARN properties for cluster configuration. In the meantime, consider this further reading:

Ray Chiang is a Software Engineer at Cloudera.

Dennis Dawson is a Senior Technical Writer at Cloudera.

The link for Spark integration with YARN at the beginning of the post at ‘For information about Spark on YARN, see this post.’ is broken.

Since it’s not so easy to find a place to provide feedback for the yarn-tuning-guide.xlsx (I’ve tried previously here: https://youtu.be/lykWFhrGvJ4 , and that one seems to be fixed in the cdh 6.2.0 version of the guide) – I’ve noticed that the form offers to input a size of the drive, and the default is 3T. I changed that to 1.7T, which automatically gets rounded to 2T, which is ok, I guess, and then it does the math correctly (8 drives * 1.7T), and then it shows the result 14, but with a wrong unit (G), instead of T(erabyte).