A new installment in the series about the tangled ball of thread that is YARN

In Part 1 of this series, we covered the fundamentals of clusters of YARN. In Part 2, you’ll learn about other components than can run on a cluster and how they affect YARN cluster configuration.

Ideal YARN Allocation

As shown in the previous post, a YARN cluster can be configured to use up all the resources on the cluster.

Realistic YARN Allocation

In reality, there are two reasons why the full set of resources on a node cannot be allocated to YARN:

- Non-Apache Hadoop services are also required to be running on a node (overhead).

- Other Hadoop-related components require dedicated resources and cannot be shared with YARN (such as when running CDH).

Operating System (Overhead)

Any node needs an operating system in order to work. Running any operating system requires setting aside some resources. Most commonly for Hadoop, this operating system is Linux.

Other Task Overhead and Services (Overhead)

If there are any custom programs that are persistent on the Worker nodes, you should set aside some resources for them.

Cloudera Manager Agents (Administrative)

Cloudera Manager is Cloudera’s cluster management tool for CDH. A Cloudera Manager Agent is a program that runs on each Worker node to track its health and handle other management tasks such as configuration deployment.

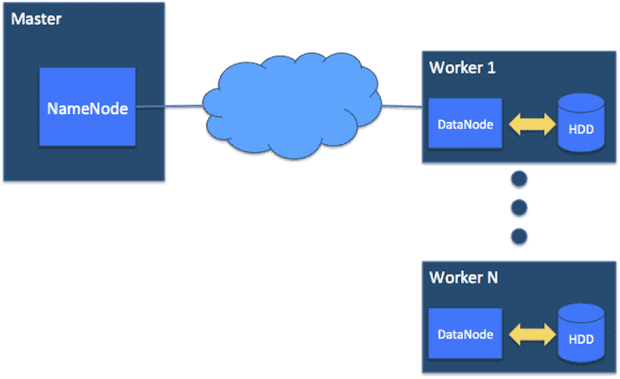

HDFS Cluster (Required) (Master/NameNode, Worker/DataNode)

This post has purposely left out any discussion of HDFS, which is a required Hadoop component. For our purposes here, though, note the following:

- The Master node daemon is called the NameNode.

- The Worker node daemon is called the DataNode.

For Hadoop installations, Cloudera recommends that the HDFS DataNodes and the YARN NodeManagers run on the same set of Worker nodes in the cluster. DataNodes require a basic amount of resources set aside for proper operation. This can be seen in Figure 1 below.

Figure 1: Master node with NameNode. Worker nodes with DataNode.

HBase Cluster Requirements (CDH)

If the cluster is configured to use Apache HBase, resources on each Worker node should be set aside for the RegionServers. The amount of memory set aside can be fairly large.

Impala Cluster Requirements (CDH)

If the cluster is configured to use Impala, resources on each Worker node should be set aside for the Impala daemons. The amount of memory set aside can be fairly large.

YARN NodeManagers (Required)

The NodeManager also needs some resources set aside to operate properly.

Allocating the Rest to YARN

Once resources are allocated to the various components above, the rest can be allocated to YARN. (Note: There are no specific recommendations made in this post, since the hardware specifications for a node continue to improve over time. For examples with specific numbers, please consult this tuning guide; you may also want to consult “Tuning the Cluster for MapReduce v2 (YARN)” in the Cloudera documentation.)

Applying the Configuration

Once the final properties are calculated, they can be entered in yarn-site.xml or the YARN Configuration section of Cloudera Manager. Once these properties are propagated into the cluster, you can verify them.

Verifying YARN Configuration in the RM UI

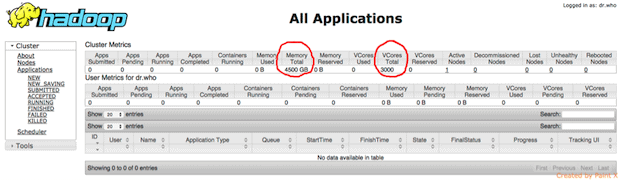

As mentioned before, the ResourceManager has a snapshot of the available resources on the YARN cluster.

Example: Assume you have the following configuration on your 50 Worker nodes:

yarn.nodemanager.resource.memory-mb= 90000yarn.nodemanager.resource.vcores= 60

Doing the math, your cluster totals should be:

- memory: 50*90GB=4500GB=4.5TB

- vcores: 50*60 vcores= 3000 vcores

On the ResourceManager Web UI page, the cluster metrics table shows the total memory and total vcores for the cluster, as seen in Figure 2 below.

Figure 2: Verifying YARN Cluster Resources on ResourceManager Web UI

Container Configuration

At this point, the YARN Cluster is properly set up in terms of Resources. YARN uses these resource limits for allocation, and enforces those limits on the cluster.

- YARN Container Memory Sizing

- Minimum:

yarn.scheduler.minimum-allocation-mb - Maximum:

yarn.scheduler.maximum-allocation-mb

- Minimum:

- YARN Container VCore Sizing

- Minimum:

yarn.scheduler.minimum-allocation-vcores - Maximum:

yarn.scheduler.maximum-allocation-vcores

- Minimum:

- YARN Container Allocation Size Increments

- Memory Increment:

yarn.scheduler.increment-allocation-mb - VCore Increment:

yarn.scheduler.increment-allocation-vcores

- Memory Increment:

Restrictions and recommendations for Container values:

- Memory properties:

- Minimum required value of 0 for

yarn.scheduler.minimum-allocation-mb. - Any of the memory sizing properties must be less than or equal to

yarn.nodemanager.resource.memory-mb. - Maximum value must be greater than or equal to the minimum value.

- Minimum required value of 0 for

- VCore properties:

- Minimum required value of 0 for

yarn.scheduler.minimum-allocation-vcores. - Any of the vcore sizing properties must be less than or equal to

yarn.nodemanager.resource.vcores. - Maximum value must be greater than or equal to the minimum value.

- Recommended value of 1 for

yarn.scheduler.increment-allocation-vcores. Higher values will likely be wasteful.

- Minimum required value of 0 for

Note that in YARN it’s possible to do some very easy misconfiguration. If the Container memory request minimum (yarn.scheduler.minimum-allocation-mb) is larger than the memory available per node (yarn.nodemanager.resource.memory-mb), then it would be impossible for YARN to fulfill that request. A similar argument can be made for the Container vcore request minimum.

MapReduce Configuration

The Map task memory property is mapreduce.map.memory.mb. The Reduce task memory property is mapreduce.reduce.memory.mb. Since both types of tasks must fit within a Container, the value should be less than the Container maximum size. (We won’t get into details about Java and the YARN properties that affect launching the Java Virtual Machine here. They may be covered in a future installment.)

Special Case: Application Memory Memory Configuration

The property yarn.app.mapreduce.am.resource.mb is used to set the memory size for the ApplicationMaster. Since the ApplicationMaster must fit within a container, the property should be less than the Container maximum.

Conclusion

The following things can now be done:

- Understand basic cluster configuration for the case of an imaginary YARN-only cluster.

- Calculate a starting point for a realistic cluster configuration, taking into account multiple resources that prevent allocating all the resources to YARN. This assumes the following categories of overhead:

- Operating system overhead (Linux, Windows)

- Administrative services, such as Cloudera Manager Agents

- Required services, such as HDFS

- Competing Master/Worker services (HBase, Impala)

- Remaining resources going YARN.

- Know where to look to verify the cluster’s configuration.

- Understand that further tuning will be needed based on analysis of the applications running on the cluster. Also, there may be other configuration overhead not listed in this post to compensate for on the cluster’s Worker nodes.

Next Time…

Part 3 will introduce the basics of scheduling within YARN.

Ray Chiang is a Software Engineer at Cloudera.

Dennis Dawson is a Senior Technical Writer at Cloudera.