In Apache Hadoop 2, YARN and MapReduce 2 (MR2) are long-needed upgrades for scheduling, resource management, and execution in Hadoop. At their core, the improvements separate cluster resource management capabilities from MapReduce-specific logic. They enable Hadoop to share resources dynamically between MapReduce and other parallel processing frameworks, such as Cloudera Impala; allow more sensible and finer-grained resource configuration for better cluster utilization; and permit Hadoop to scale to accommodate more and larger jobs.

In this post, users of CDH (Cloudera’s distribution of Hadoop and related projects) who program MapReduce jobs will get a guide to the architectural and user-facing differences between MapReduce 1 (MR1) and MR2. (MR2 is the default processing framework in CDH 5, although MR1 will continue to be supported.) Operators/administrators can read a similar post designed for them here.

Terminology and Architecture

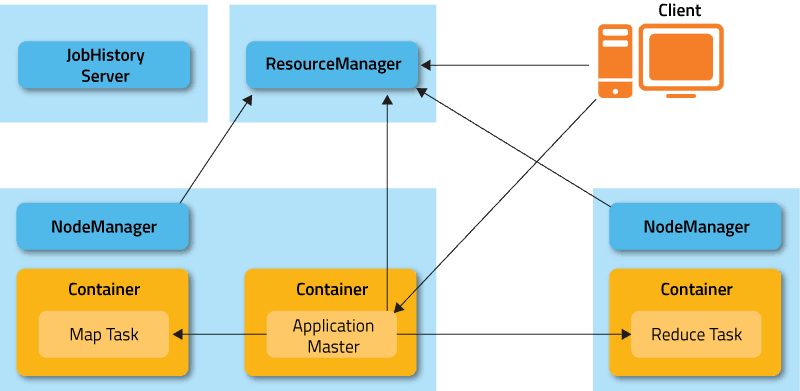

In Hadoop 2, MapReduce is split into two components: The cluster resource management capabilities have become YARN, while the MapReduce-specific capabilities remain MapReduce. In the former MR1 architecture, the cluster was managed by a service called the JobTracker. TaskTracker services lived on each node and would launch tasks on behalf of jobs. The JobTracker would serve information about completed jobs. In MR2, the functions of the JobTracker are divided into three services. The ResourceManager is a persistent YARN service that receives and runs applications (a MapReduce job is an application) on the cluster. It contains the scheduler, which, as in MR1, is pluggable.

The MapReduce-specific capabilities of the JobTracker have moved into the MapReduce Application Master, one of which is started to manage each MapReduce job and terminated when the job completes. The JobTracker’s function of serving information about completed jobs has been moved to the JobHistoryServer. The TaskTracker has been replaced with the NodeManager, a YARN service that manages resources and deployment on a node. NodeManager is responsible for launching containers, each of which can house a map or reduce task.

MR2 architecture is illustrated below.

The new architecture has a couple advantages. First, by breaking up the JobTracker into a few different services, it avoids many of the scaling issues facing MR1. Most important, it makes it possible to run frameworks other than MapReduce on a Hadoop cluster. For example, Impala can also run on YARN and share resources on a cluster with MapReduce.

Writing and Running Jobs

Virtually every job compiled against MR1 in CDH 4 will be able to run without any modifications on an MR2 cluster. We won’t pay much attention here to jobs written/compiled against CDH 3 or Apache’s Hadoop 1 releases, but in general they will require a recompile to run on CDH 5, just as they do for CDH 4.

Java API Compatibility from CDH 4

MR2 supports both the old (“mapred”) and new (“mapreduce”) MapReduce APIs used for MR1, with a few caveats. The difference between the old and new APIs, which concerns user-facing changes, should not be confused with the difference between MR1 and MR2, which concerns changes to the underlying framework. CDH 4 and CDH 5 support the new and old MapReduce APIs as well as both MR1 and MR2. (Now, go back and read this paragraph again, because the naming is often a source of confusion.)

Most applications that use @Public/@Stable APIs will be binary-compatible from CDH 4, meaning that compiled binaries should be able to run without modification on the new framework. Source compatibility may be broken for applications that use a few obscure APIs that are technically public, but rarely needed and primarily exist for internal use. These APIs are detailed below.

| Binary Incompatibilities | Source Incompatibilities | |

| CDH 4 MR1 -> CDH 5 MR1 | None | None |

| CDH 4 MR1 -> CDH 5 MR2 | None | Rare |

| CDH 5 MR1 -> CDH 5 MR2 | None | Rare |

“Source incompatibility” means that code changes will be required to compile. Source incompatibility is orthogonal to binary compatibility — binaries for an application that is binary-compatible, but not source-compatible, will continue to run fine on the new framework, but code changes will be required to regenerate those binaries.

The following are the known source incompatibilities.

- KeyValueLineRecordReader#getProgress and LineRecordReader#getProgress now throw IOExceptions in both the old and new APIs. Their superclass method, RecordReader#getProgress, already did this, but source compatibility will be broken for the rare code that used it without a try/catch block.

- FileOutputCommitter#abortTask now throws an IOException. Its superclass method always did this, but source compatibility will be broken for the rare code that used it without a try/catch block. This was fixed in CDH 4.3 MR1 to be compatible with MR2.

- Job#getDependentJobs, an API marked @Evolving, now returns a List instead of an ArrayList.

Compiling Jobs Against MR2

If you’re using Maven, compiling against MR2 requires including the same artifact, hadoop-client. Changing the version to Hadoop 2 version (for example, using 2.2.0-cdh5.0.0 instead of 2.2.0-mr1-cdh5.0.0) should be enough. If you’re not using Maven, compiling against all the Hadoop jars is recommended. A comprehensive list of Hadoop Maven artifacts is available at here.

Job Configuration

As in MR1, job configuration options can be specified on the command line, in Java code, or in the mapred-site.xml on the client machine in the same way they previously were. Most job configuration options, with rare exceptions, that were available in MR1 work in MR2 as well. For consistency and clarity, many options have been given new names. The older names are deprecated, but will still work for the time being. The exceptions are mapred.child.ulimit and all options relating to JVM reuse, which are no longer supported.

Submitting and Monitoring Jobs with the Command Line

The MapReduce command-line interface remains entirely compatible.Use of the hadoop command-line tool to run MapReduce-related commands (pipes, job, queue, classpath, historyserver, distcp, archive) is deprecated, but still works. The mapred command-line tool is preferred for these commands.

Requesting Resources

An MR2 job submission includes the amount of resources to reserve for each map and reduce task. As in MR1, the amount of memory requested is controlled by the mapreduce.map.memory.mb and mapreduce.reduce.memory.mb properties. MR2 also adds additional parameters that control how much processing power to reserve for each task. The mapreduce.map.cpu.vcores and mapreduce.reduce.cpu.vcores properties express how much parallelism a map or reduce task can utilize. These should be kept at their default of 1 unless your code is explicitly spawning extra compute-intensive threads.

Web UI

In MR1, the JobTracker web UI served detailed information about the state of the cluster and the jobs currently and recently running on it. It also contained the job history page, which served information from disk about older jobs.

The MR2 web UI provides the same information structured in the same way, but has been revamped with a new look and feel. The ResourceManager UI, which includes information about running applications and the state of the cluster, is now located by default at:8088. The job history UI is now located by default at:19888. Jobs can be searched and viewed there just as they could in MR1.

Because the ResourceManager is meant to be agnostic to many of the concepts in MapReduce, it cannot host job information directly. Instead, it proxies to a web UI that can. If the job is running, this is the relevant MapReduce Application Master; if it has completed, this is the JobHistoryServer. In this sense, the user experience is similar to that of MR1, but the information is coming from different places.

Conclusion

I hope you now have a good understanding of the differences between MR1 and MR2, as well as how to make a seamless transition to the latter. As you can see, the fact that both APIs, as well as both frameworks, are supported across CDH 4 and CDH 5 means that MapReduce programmers can move forward to MR2 with very few concerns.

Sandy Ryza is a Software Engineer at Cloudera and a Hadoop Committer.