Cloudera Manager lets you add a YARN service in the same way you would add any other Cloudera Manager-managed service.

In Apache Hadoop 2, YARN and MapReduce 2 (MR2) are long-needed upgrades for scheduling, resource management, and execution in Hadoop. At their core, the improvements separate cluster resource management capabilities from MapReduce-specific logic. They enable Hadoop to share resources dynamically between MapReduce and other parallel processing frameworks, such as Cloudera Impala; allow more sensible and finer-grained resource configuration for better cluster utilization; and permit Hadoop to scale to accommodate more and larger jobs.

In this post, operators of Cloudera’s distribution of Hadoop and related projects (CDH) who want to upgrade their existing setups to run MR2 on top of YARN will get a guide to the architectural and user-facing differences between MR1 and MR2. (MR2 is the default processing framework in CDH 5, although MR1 will continue to be supported.) Hadoop users/MapReduce programmers can read a similar post designed for them here.

Terminology and Architecture

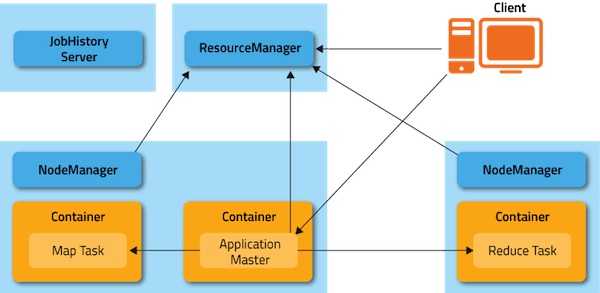

In Hadoop 2, MapReduce is split into two components: The cluster resource management capabilities have become YARN, while the MapReduce-specific capabilities remain MapReduce. In the former MR1 architecture, the cluster was managed by a service called the JobTracker. TaskTracker services lived on each node and would launch tasks on behalf of jobs. The JobTracker would serve information about completed jobs. In MR2, the functions of the JobTracker are divided into three services. The ResourceManager is a persistent YARN service that receives and runs applications (a MapReduce job is an application) on the cluster. It contains the scheduler, which, as in MR1, is pluggable.

The MapReduce-specific capabilities of the JobTracker have moved into the MapReduce Application Master, one of which is started to manage each MapReduce job and terminated when the job completes. The JobTracker’s function of serving information about completed jobs has been moved to the JobHistoryServer. The TaskTracker has been replaced with the NodeManager, a YARN service that manages resources and deployment on a node. NodeManager is responsible for launching containers, each of which can house a map or reduce task.

MR2 architecture is illustrated below.

The new architecture has a couple advantages. First, by breaking up the JobTracker into a few different services, it avoids many of the scaling issues facing MR1. Most important, it makes it possible to run frameworks other than MapReduce on a Hadoop cluster. For example, Impala can also run on YARN and share resources on a cluster with MapReduce.

Configuring and Running MR2 Clusters

Migrating Configurations

Because MR1 functionality has been split into two components in Hadoop 2, MapReduce cluster configuration options have been split into YARN configuration options, which go in yarn-site.xml; and MapReduce configuration options, which go in mapred-site.xml. Many have been given new names to reflect the shift. As JobTrackers and TaskTrackers no longer exist in MR2, all configuration options pertaining to them no longer exist, although many have corresponding options for the ResourceManager, NodeManager, and JobHistoryServer. We’ll follow up with a full translation table in a future post.

A minimal configuration required to run MR2 jobs on YARN is:

yarn-site.xml:

yarn.resourcemanager.hostname

your.hostname.com

yarn.nodemanager.aux-services

mapreduce_shuffle

mapred-site.xml:

mapreduce.framework.name

yarn

Resource Configuration

One of the larger changes in MR2 is the way that resources are managed. In MR1, each node was configured with a fixed number of map slots and a fixed number of reduce slots. Under YARN, there is no distinction between resources available for maps and resources available for reduces – all resources are available for both. Second, the notion of slots has been discarded, and resources are now configured in terms of amounts of memory (in megabytes) and CPU (in “virtual cores”, which are described below). Resource configuration is an inherently difficult topic, and the added flexibility that YARN provides in this regard also comes with added complexity. Cloudera Manager will pick sensible values automatically, but if you are setting up your cluster manually or just interested in the details, read on.

Resource Requests

From the perspective of a developer requesting resource allocations for a job’s tasks, nothing needs to be changed. Map and reduce task memory requests still work and, furthermore, tasks that will use multiple threads can request more than one core with the mapreduce.map.cpu.vcores and mapreduce.reduce.cpu.vcores properties.

Configuring Node Capacities

In MR1, the mapred.tasktracker.map.tasks.maximum and mapred.tasktracker.reduce.tasks.maximum properties dictated how many map and reduce slots each TaskTracker had. These properties no longer exist in YARN. Instead, YARN uses yarn.nodemanager.resource.memory-mb and yarn.nodemanager.resource.cpu-vcores, which control the amount of memory and CPU on each node, both available to both maps and reduces. If you were using Cloudera Manager to configure these properties automatically, Cloudera Manager will take care of it in MR2 as well. If configuring these properties manually, simply set these to the amount of memory and number of cores on the machine after subtracting out resources needed for other services.

Virtual Cores

To better handle varying CPU requests, YARN supports virtual cores (vcores), a resource meant to express parallelism. The “virtual” in the name is somewhat misleading: Rather, on the NodeManager, vcores should be configured equal to the number of physical cores on the machine. Tasks should be requested with vcores equal to the number of cores they can saturate at once. Currently vcores are very coarse; tasks will rarely want to ask for more than one of them, but a complementary axis that represents processing power will likely be added in the future to enable finer-grained resource configuration.

Rounding Request Sizes

Also noteworthy are the yarn.scheduler.minimum-allocation-mb, yarn.scheduler.minimum-allocation-vcores, yarn.scheduler.increment-allocation-mb, and yarn.scheduler.increment-allocation-vcores properties, which default to 1024, 1, 512, and 1, respectively. If you submit tasks with resource requests lower than the minimum-allocation values, their requests will be set to these values. If you submit tasks with resource requests that are not multiples of the increment-allocation values, their requests will be rounded up to the nearest increments.

To make all of this more concrete, let’s explore an example. Let’s say each node in your cluster has 24GB of memory and 6 cores. Other services running on the nodes require 4GB and 1 core, so you set yarn.nodemanager.resource.memory-mb to 20480 and yarn.nodemanager.resource.cpu-vcores to 5. If you leave the map and reduce task defaults of 1024MB and 1 virtual core intact, you will have at most 5 tasks running at the same time. If you want each of your tasks to use 5GB, you would set their mapreduce.(map|reduce).memory.mb to 5120, which would limit you to 4 tasks running at the same time.

Scheduler Configuration

Cloudera supports the use of the Fair and FIFO schedulers in MR2. Fair Scheduler allocation files require changes in light of the new way that resources work. The minMaps, maxMaps, minReduces, and maxReduces queue properties have been replaced with a minResources property and a maxProperties. Instead of taking a number of slots, these properties take a value like “1024MB, 3 vcores”. By default, the MR2 Fair Scheduler will attempt to equalize memory allocations in the same way it attempted to equalize slot allocations in MR1. The MR2 Fair Scheduler contains a number of new features including hierarchical queues and fairness based on multiple resources.

Administration Commands

The jobtracker and tasktracker commands, which start the JobTracker and TaskTracker, are no longer supported because these services no longer exist. They are replaced with yarn resourcemanager and yarn nodemanager, which start the ResourceManager and NodeManager respectively. hadoop mradmin is no longer supported; instead, use yarn rmadmin. The new admin commands mimic the functionality of the old ones, allowing nodes, queues, and ACLs to be refreshed while the ResourceManager is running.

Security

The following section outlines the additional changes needed to migrate a secure cluster.

New YARN Kerberos service principals should be created for the ResourceManager and NodeManager, using the pattern used for other Hadoop services (yarn@). The mapred principal should still be used for the JobHistoryServer. If you’re using Cloudera Manager to configure security, that will be taken care of automatically.

As in MR1, a configuration must be set to have the user that submits a job own its task processes. The equivalent of MR1’s LinuxTaskController is the LinuxContainerExecutor. In a secure setup, NodeManager configurations should set yarn.nodemanager.container-executor.class to org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor. Properties set in the taskcontroller.cfg configuration file should be migrated to their analogous properties in the container-executor.cfg file.

In secure setups, configuring hadoop-policy.xml allows administrators to set up access control lists on internal protocols. The following is a table of MR1 options and their MR2 equivalents:

| MR1 | MR2 | Comments |

| security.task.umbilical.protocol.acl | security.job.task.protocol.acl | As in MR1, this should never be set to anything other than * |

| security.inter.tracker.protocol.acl | security.resourcetracker.protocol.acl | |

| security.job.submission.protocol.acl | security.applicationclient.protocol.acl | |

| security.admin.operations.protocol.acl | security.resourcemanager-administration.protocol.acl | |

| security.applicationmaster.protocol.acl | No MR1 equivalent | |

| security.containermanagement.protocol.acl | No MR1 equivalent | |

| security.resourcelocalizer.protocol.acl | No MR1 equivalent | |

| security.job.client.protocol.acl | No MR1 equivalent |

Queue access control lists (ACLs) are now placed in the Fair Scheduler configuration file instead of the JobTracker configuration. A list of users and groups that can submit jobs to a queue can be placed in aclSubmitApps in the queue’s configuration. The queue administration ACL is not supported in CDH 5 Beta 1, but will be in a future release.

Ports

The following is a list of default ports used by MR2 and YARN, as well as the configuration properties used to configure them.

| Port | Use | Property |

| 8032 | ResourceManager Client RPC | yarn.resourcemanager.address |

| 8030 | ResourceManager Scheduler RPC (for ApplicationMasters) | yarn.resourcemanager.scheduler.address |

| 8033 | ResourceManager Admin RPC | yarn.resourcemanager.admin.address |

| 8088 | ResourceManager Web UI and REST APIs | yarn.resourcemanager.webapp.address |

| 8031 | ResourceManager Resource Tracker RPC (for NodeManagers) | yarn.resourcemanager.resource-tracker.address |

| 8040 | NodeManager Localizer RPC | yarn.nodemanager.localizer.address |

| 8042 | NodeManager Web UI and REST APIs | yarn.nodemanager.webapp.address |

| 10020 | Job History RPC | mapreduce.jobhistory.address |

| 19888 | Job History Web UI and REST APIs | mapreduce.jobhistory.webapp.address |

| 13562 | Shuffle HTTP | mapreduce.shuffle.port |

High Availability (HA)

YARN supports ResourceManager HA to make a YARN cluster highly-available; the underlying architecture of Active/Standby pair is similar to JobTracker HA in MR1. A major improvement over MR1 is: in YARN, the completed tasks of in-flight MapReduce jobs are not re-run on recovery after the ResourceManager is restarted or failed over.

Further, the configuration and setup has also been simplified. The main differences are:

- Failover controller has been moved from a separate ZKFC daemon to be a part of the ResourceManager itself. So, there is no need to run an additional daemon.

- Clients, Applications, and NodeManagers do not require configuring a proxy-provider to talk to the active ResourceManager.

Below is a table with HA-related configs used in MR1 and their equivalents in YARN:

|

MR1 |

YARN/MR2 |

Comments |

| mapred.jobtrackers. | yarn.resourcemanager.ha.rm-ids | |

| mapred.ha.jobtracker.id | yarn.resourcemanager.ha.id | Unlike in MR1, this must be configured in YARN. |

| mapred.jobtracker… | yarn.resourcemanager.. | YARN/ MR2 has different RPC ports for different functionalities. Each port-related config must be suffixed with an id. Note that there is noin YARN. |

| mapred.ha.jobtracker. rpc-address.. | yarn.resourcemanager.ha.admin.address | |

| mapred.ha.fencing.methods | yarn.resourcemanager.ha.fencer | Not required to be specified |

| mapred.client.failover.* | None | Not required |

| yarn.resourcemanager.ha.enabled | Enable HA | |

| mapred.jobtracker.restart.recover | yarn.resourcemanager.recovery.enabled | Enable recovery of jobs after failover |

| yarn.resourcemanager.store.class | org.apache.hadoop.yarn.server. resourcemanager.recovery.ZKRMStateStore |

|

| mapred.ha.automatic-failover.enabled | yarn.resourcemanager.ha.auto-failover.enabled | Enable automatic failover |

| mapred.ha.zkfc.port | yarn.resourcemanager.ha.auto-failover.port | |

| mapred.job.tracker | yarn.resourcemanager.cluster.id | Cluster name |

Upgrading an MR1 Installation with Cloudera Manager

Cloudera Manager enables adding a YARN service in the same way that you would add any other Cloudera Manager-managed service. No further steps are required.

Manually Upgrading MR1 Installation

The following packages are no longer used in MR2 and should be uninstalled:

- hadoop-0.20-mapreduce

- hadoop-0.20-mapreduce-jobtracker

- hadoop-0.20-mapreduce-tasktracker

- hadoop-0.20-mapreduce-zkfc

- hadoop-0.20-mapreduce-jobtrackerha

The following additional packages must be installed:

- hadoop-yarn

- hadoop-mapreduce

- hadoop-mapreduce-historyserver

- hadoop-yarn-resourcemanager

- hadoop-yarn-nodemanager

The next step is to look at all the service configs placed in mapred-site.xml and replace them with their corresponding YARN configs. Configs starting with “yarn” should be placed inside yarn-site.xml, not mapred-site.xml. Refer to the “Resource Configuration” section above for best practices on how to convert TaskTracker slot capacities (mapred.tasktracker.map.tasks.maximum and mapred.tasktracker.reduce.tasks.maximum) to NodeManager resource capacities (yarn.nodemanager.resource.memory-mb and yarn.nodemanager.resource.cpu-vcores), as well as how to convert configs in the Fair Scheduler allocations file, fair-scheduler.xml.

Finally, you can start the ResourceManager, and NodeManagers, and JobHistoryServer.

Web UI

In MR1, the JobTracker web UI served detailed information about the state of the cluster and the jobs currently and recently running on it. It also contained the job history page, which served information from disk about older jobs.

The MR2 web UI provides the same information structured in the same way, but has been revamped with a new look and feel. The ResourceManager’s UI, which includes information about running applications and the state of the cluster, is now located by default at:8088. The job history UI is now located by default at:19888. You can search and view jobs there just as you could in MR1.

Because the ResourceManager is meant to be agnostic to many of the concepts in MapReduce, it cannot host job information directly. Instead, it proxies to a web UI that can. If the job is running, this is the relevant MapReduce Application Master; if it has completed, this is the JobHistoryServer. In this sense, the user experience is similar to that of MR1, but the information is coming from different places.

Conclusion

I hope you now have a good understanding of the differences between MR1 and MR2, as well as how to make a seamless transition to the latter. Although Cloudera Manager makes many of the necessary configurations transparent for you, for operators who prefer a manual approach, this post contains everything you need to know to migrate your cluster to YARN/MR2.

Sandy Ryza is a Software Engineer at Cloudera and a Hadoop Committer.