As described in our recent blog post, an SQL AI Assistant has been integrated into Hue with the capability to leverage the power of large language models (LLMs) for a number of SQL tasks. It can help you to create, edit, optimize, fix, and succinctly summarize queries using natural language. This is a real game-changer for data analysts on all levels and will make SQL development faster, easier, and less error-prone.

This blog post aims to help you understand what you can do to get started with generative AI assisted SQL using Hue image version 2023.0.16.0 or higher on the public cloud. Both Hive and Impala dialects are supported. Please refer to the product documentation for more information about specific releases.

Getting started with the SQL AI Assistant

Later in this blog we will walk you through the steps of how to configure your Cloudera environment to use the SQL AI Assistant with your supported LLM of choice. But first, let’s explore what the SQL AI Assistant does, and how people would use it within the SQL editor.

Using the SQL AI Assistant

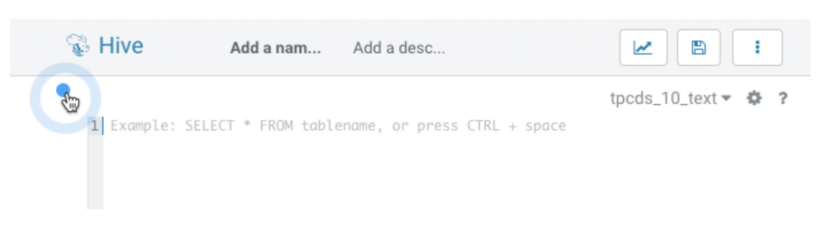

To launch the SQL AI Assistant, start the SQL editor in Hue and click the blue dot as shown in the following image. This will expand the SQL AI toolbar with buttons to generate, edit, explain, optimize and fix SQL statements. The assistant will use the same database as the editor, which in the image below is set to a DB named tpcds_10_text.

The toolbar is context aware and different actions will be enabled depending on what you are doing in the editor. When the editor is empty, the only option available is to generate new SQL from natural language.

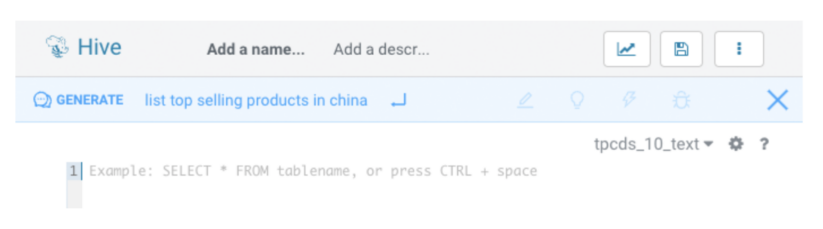

Click “generate” and type your query in natural language. In the edit field, press the down arrow to see a history of query prompts. Click “enter” to generate the SQL query.

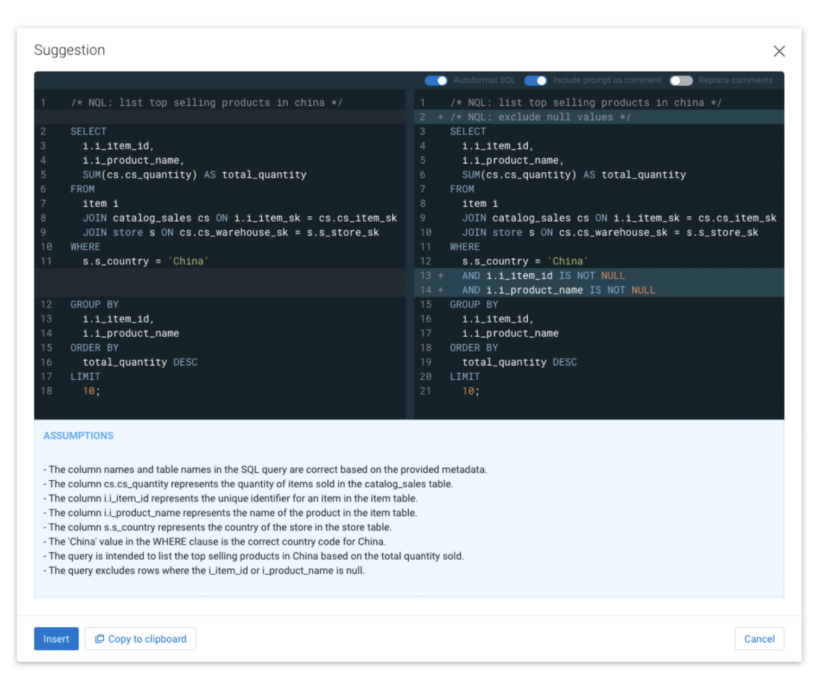

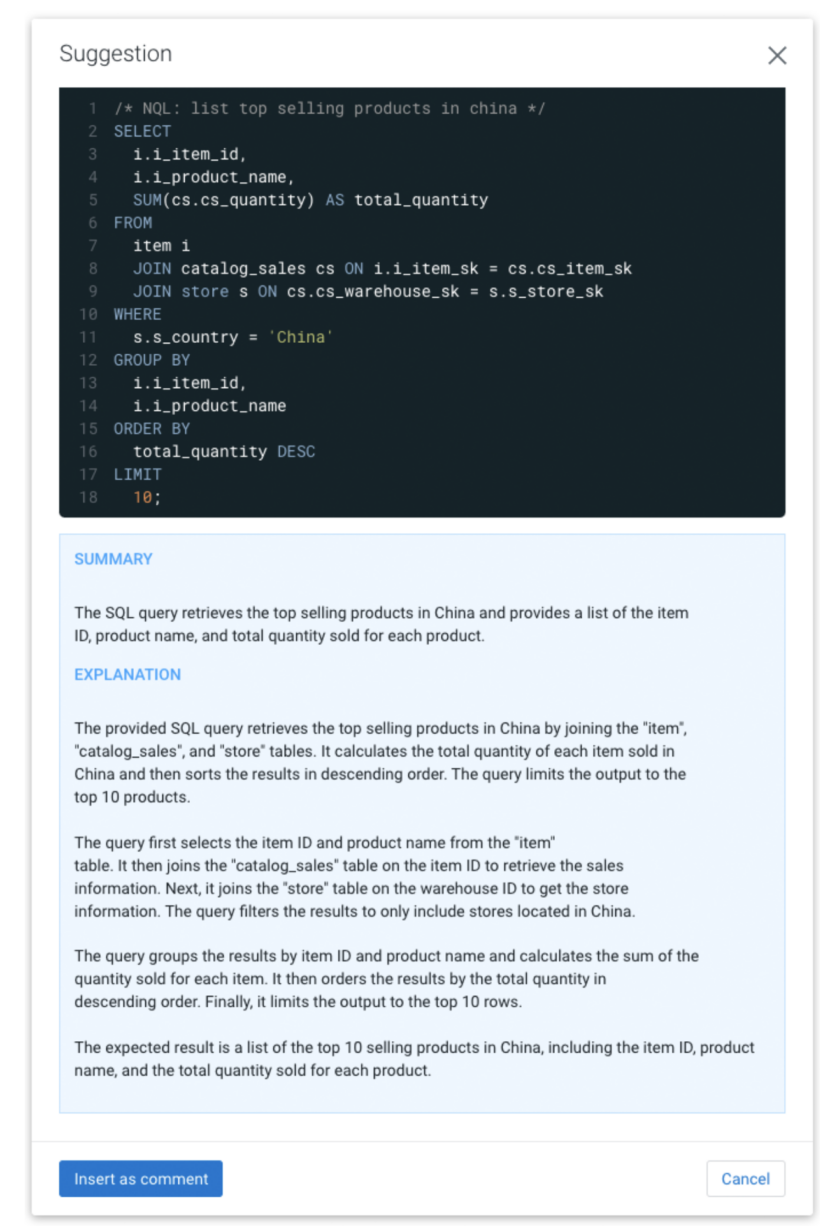

The generated SQL is presented in a modal together with the assumptions made by the LLM. This can include assumptions about the intent of the natural language used, like the definition of “top selling products,” values of needed literals, and how joins can be created. Now, you can insert the SQL directly into the editor or copy it to the clipboard.

When there is an active SQL statement in the editor the SQL AI Assistant will enable the “edit,” “explain,” and “optimize” buttons. The “fix” button will only be enabled when the editor finds an error, such as a SQL syntax error or a misspelled name.

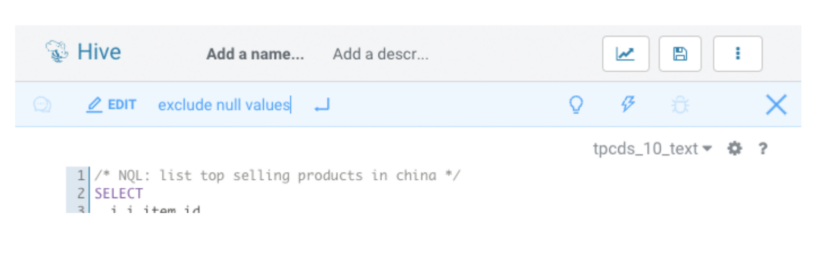

Click “edit” to modify the active SQL statement. If the statement is preceded by a NQL-comment then that prompt can be reused by pressing tab. You can also just start typing a new instruction.

After using edit, optimize, or fix, a preview shows the original query and the modified query differences. If the original query has a different formatting or keyword upper/lower case than the generated query, you can enable “Autoformat SQL” at the top of the modal for a better result.

Click “insert” to replace the original query with the modified one in the editor.

The optimize and the fix functionality do not need user input. To use them simply select a SQL statement in the editor, and click “optimize” or “fix” to generate an improved version displayed as a diff of the original query, as shown above. “Optimize” will try to improve the structure and performance without impacting the returned result of running the query. “Fix” will try to automatically fix syntactic errors and misspelling.

If you need help making sense of complex SQL then simply select the statement, and click “explain.” A summary and explanation of the SQL in natural language will appear. You can choose to insert the text as a comment above the SQL statement in the editor as shown below.

Supported AI models and services

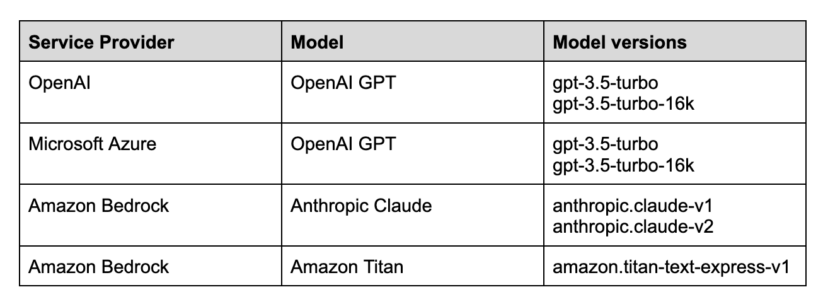

The SQL AI Assistant is not bundled with a specific LLM; instead it supports various LLMs and hosting services. The model can run locally, be hosted on CML infra or in the infrastructure of a trusted service provider. Cloudera has been testing with GPT running in both Azure and OpenAI, but the following service-model combinations are also supported:

Note: Cloudera recommends using the Hue AI assistant with the Azure OpenAI service.

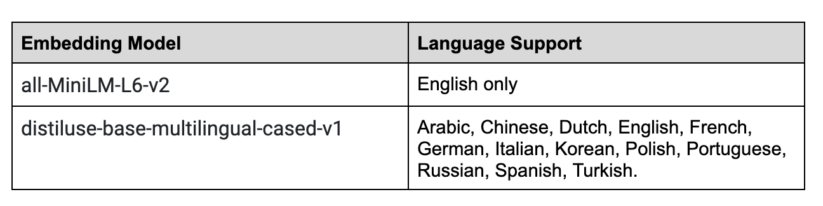

The supported AI models are pre-trained on natural language and SQL but they have no knowledge of your organization’s data. To overcome this the SQL AI Assistant uses a Retrieval Augmented Generation (RAG)-based architecture where the appropriate information is retrieved for each individual SQL task (prompt) and used to augment the request to the LLM. During the retrieval process it uses the Python SentenceTransformers framework for semantic search, which by default utilizes the all-MiniLM-L6-v2 model. The SQL AI Assistant can be configured with many pre-trained models for better multi-lingual support. Below are the models tested by Cloudera:

Data shared with the LLM models

It is important to understand that by using the SQL AI Assistant you are sending your own prompts and also significant additional information as input to the LLM. The SQL AI Assistant will only share data that the currently logged-in user is allowed to access, but it is of utmost importance that you use a service that you can trust with your data. The RAG-based architecture reduces the number of tables sent per request to a short list of the most likely needed, but there is currently no way to explicitly exclude certain tables; consequently, info about all tables that the logged-in user can access in the database could be shared. The list below details exactly what is shared:

- Everything that a user inputs in the SQL AI Assistant

- The selected SQL statement (if any) in the Hue editor

- SQL dialect in use (Hive, Impala for example)

- Table details such as table name, column names, column data types and related keys, partitions and constraints

- Three sample rows from the tables (following the best practices specified in Rajkumar et al, 2022)

Prerequisites for enabling the AI assistant

The administrator must obtain clearance from your organization’s infosec team to make sure it is safe to use the SQL AI Assistant because some of the table metadata and data, as mentioned in the previous section, is shared with the LLM.

Configuring the SQL AI Assistant

Getting started with the SQL AI Assistant is a straightforward process. First arrange access to one of the supported services and then add the service details in Hue’s configuration.

Using Microsoft Azure OpenAI service

Microsoft Azure provides the option to have dedicated deployments of OpenAI GPT models. Azure’s OpenAI service is much more secure than the publicly hosted OpenAI APIs because the data can be processed in your virtual private cloud (VPC). Considering the added security, Azure’s OpenAI is the recommended service to use for GPT models in the SQL AI Assistant. For more information, see the Azure OpenAI quick start guide.

Step 1. Azure subscription

First, get Azure access. Contact your IT department to get an Azure subscription. Subscriptions could be different based on your team and purpose. For more information, see subscription considerations.

2. Azure Open AI access

Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI by completing the form at https://aka.ms/oai/access. Once approved, you should receive a welcome email.

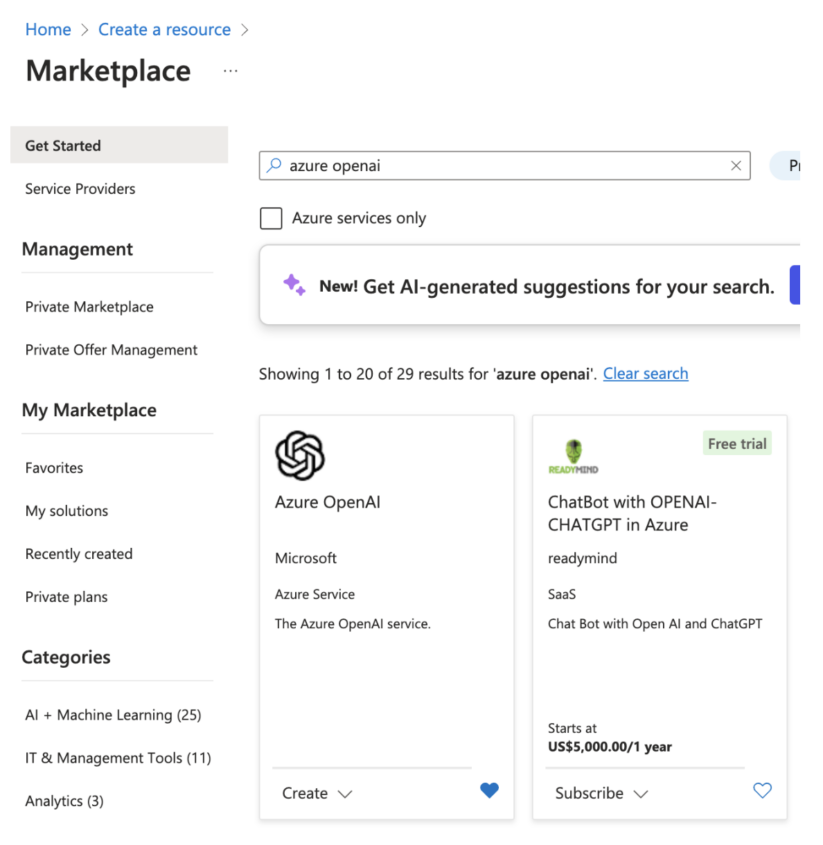

3. Create resource

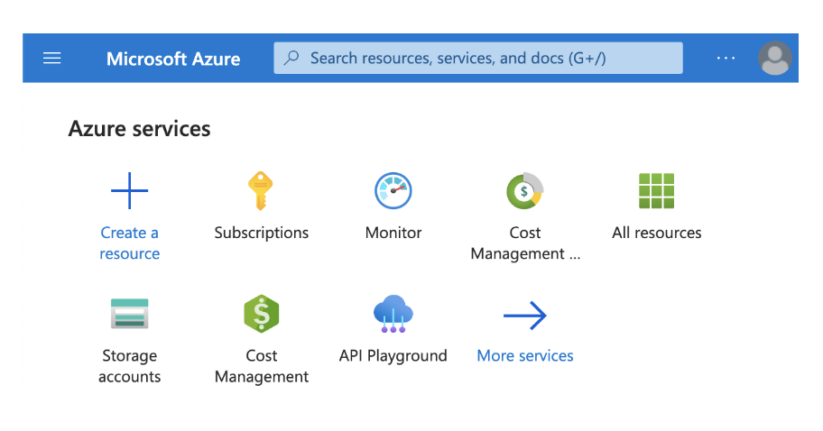

In the Azure portal, create your Azure OpenAI resource: https://portal.azure.com/#home.

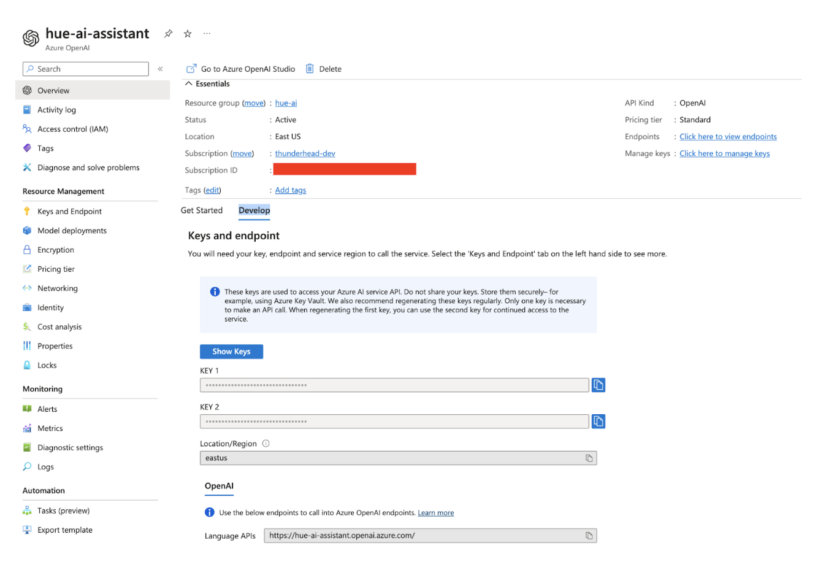

In the resource details page, under “Develop”, you can get your resource URL and keys. You just need any one of the two provided keys.

4. Deploy GPT

Go to Azure OpenAI Studio at https://oai.azure.com/portal and create your deployment under management > Deployments. Select gpt-35-turbo-16k or higher.

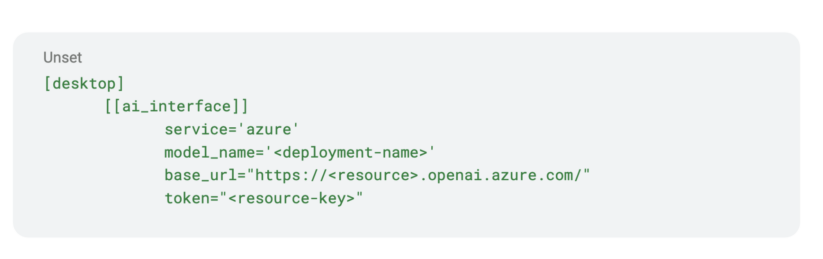

5. Configure SQL AI Assistant in Hue

Now that the service is up and running with your model, the last step is to enable and configure the SQL AI assistant in Hue.

- Log in to the Cloudera Data Warehouse service as DWAdmin.

- Go to the virtual warehouse tab, locate the Virtual Warehouse on which you want to enable this feature, and click “edit.”

- Go to “configurations” > Hue and select “hue-safety-valve” from the configuration files drop-down menu.

Edit the text under the desktop section by adding a subsection called ai_interface. Populate it as shown below by replacing the angle bracket values with those from your own service:

Using OpenAI service

1. Open AI platform sign up

Request access to the Open AI platform from your IT department or go to https://platform.openai.com/ and create an account if allowed by your company’s policies.

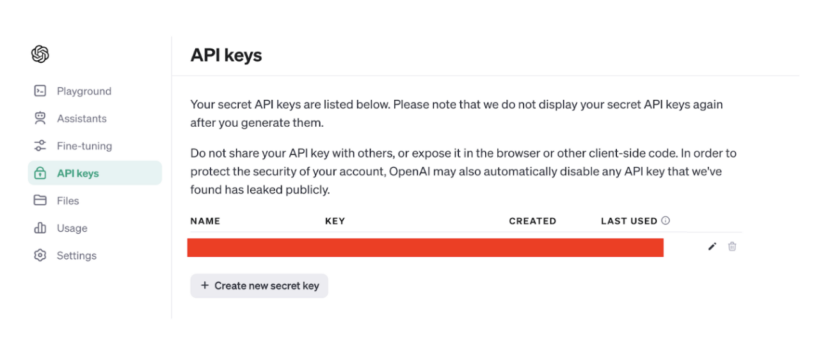

2. Get the API key

In the left menu bar, navigate to AI keys. You should be able to view existing keys or create new ones. The API key is the only thing you need to integrate with the SQL AI Assistant.

3. Configure SQL AI Assistant in Hue

Finally, enable and configure the SQL AI assistant in Hue.

- Log in to the data warehouse service as DWAdmin.

- Go to the virtual warehouse tab, locate the Virtual Warehouse on which you want to enable this feature, and click “edit.”

- Go to “configurations” > Hue and select “hue-safety-valve” from the configuration files drop-down menu.

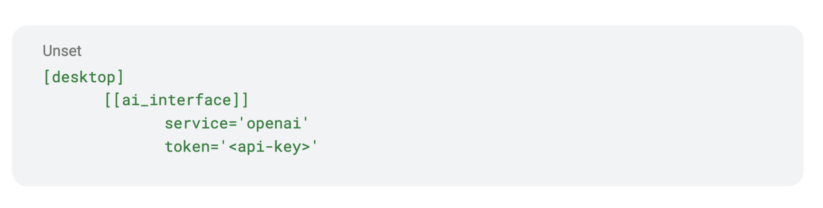

- Edit the text under the desktop section by adding a subsection called ai_interface. Only two key value pairs are needed as shown below. Replace the <api-key> value with the API key from Open AI.

Amazon Bedrock Service

Amazon Bedrock is a fully managed service that makes foundation models from leading AI startups and Amazon available via an API. You must have an AWS account with Bedrock access before following these steps.

- Get your access key and secret

Get the access key ID and the secret access key for using Bedrock-hosted models in Hue Assistant:

- Go to IAM console: https://console.aws.amazon.com/iam

- Click “users” in the left menu

- Find the user who needs access

- Click “security credentials”

- Go to the “access keys” section and find your keys there.

2. Get Anthropic Claude access

Claude from Anthropic is one of the best models available in Bedrock for SQL-related tasks. More details are available at https://aws.amazon.com/bedrock/claude/. Once you have access, you will be able to try Claude in the text playground under the Amazon Bedrock service.

3. Configure SQL AI Assistant in Hue

Finally, enable and configure the SQL AI assistant in Hue.

- Log in to the data warehouse service as DWAdmin.

- Go to the virtual warehouse tab, locate the virtual warehouse on which you want to enable this feature, and click “edit.”

- Go to “configurations: > Hue and select “hue-safety-valve” from the configuration files drop-down menu.

- Edit the text to make sure the following sections, subsections and key value pairs are set. Replace the <access_key> and the <secret_key> with the values from your AWS account.

SQL AI Assistant configurations

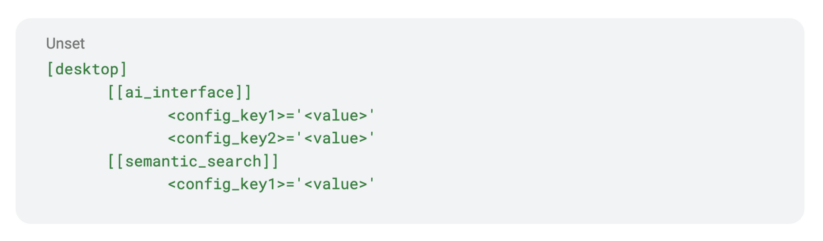

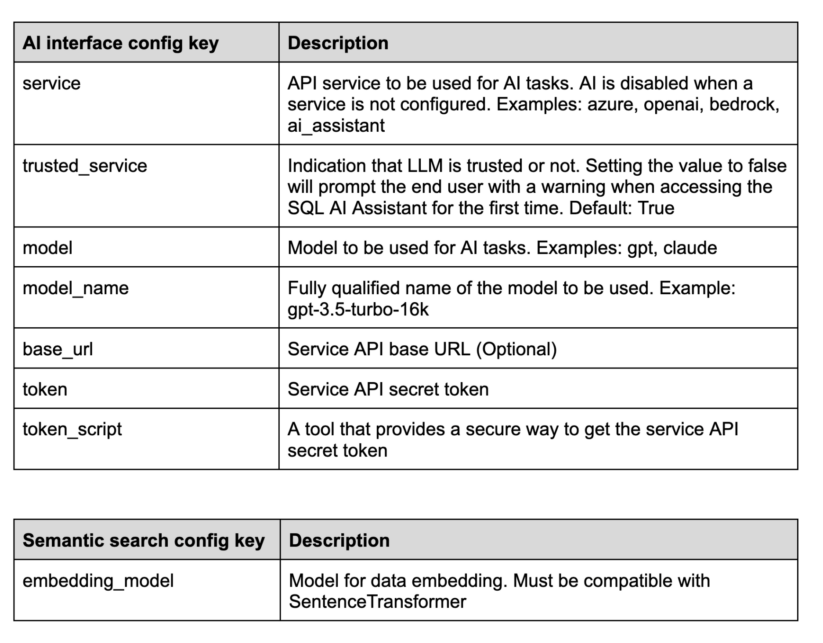

Service- and model-related configurations are under ai_interface, and semantic search related configurations used for RAG are under the semantic_search section.

Limitations and known issues

The configurable LLMs are very good at generating and modifying SQL. The RAG architecture provides the proper context. But there is no guarantee suggestions from LLMs, or from human experts, are always accurate. Please be aware of the following:

- Non-deterministic: LLMs are non-deterministic. You cannot guarantee the exact same output for the same input every time, and different responses for very similar queries can occur.

- Ambiguity: LLMs may struggle to handle ambiguous queries or contexts. SQL queries often rely on specific and unambiguous language, but LLMs can misinterpret or generate ambiguous SQL queries, leading to incorrect results.

- Hallucination: In the context of LLMs, hallucination refers to a phenomenon where these models generate responses that are incorrect, nonsensical, or fabricated. Occasionally you might see incorrect identifiers or literals, or even table and column names, if the provided context is incomplete or user input simply doesn’t match any data.

- Partial context: The RAG architecture provides context to each request but it has limitations and there is no guarantee the context sent to the LLM will always be complete.

The SQL AI Assistant is now available in tech preview on Cloudera Data Warehouse on Public Cloud. We encourage you to try it out and experience the benefits it can provide when it comes to working with SQL. Additionally, check out the overview blog on SQL AI Assistant to learn how it can help data and business analysts in your organization speed up data analytics. Check out the SQL AI Assistant documentation Reach out to your Cloudera team for more details.