Editor’s Note, August 2020: CDP Data Center is now called CDP Private Cloud Base. You can learn more about it here.

Following the big launch Cloudera Data Platform (CDP), we are excited about introducing some of the key streaming products on the same platform. Apache NiFi and Apache Kafka will be added to CDP Data Hub shortly. We are introducing these capabilities first in the public cloud through CDP Data Hub before we’re adding support for CDP’s broad deployment spectrum across CDP Data Center and CDP Private Cloud.

This blog post is focused on public cloud deployments of Apache NiFi and Kafka through CDP Data Hub. Let’s get started with a quick review of what CDP Data Hub is.

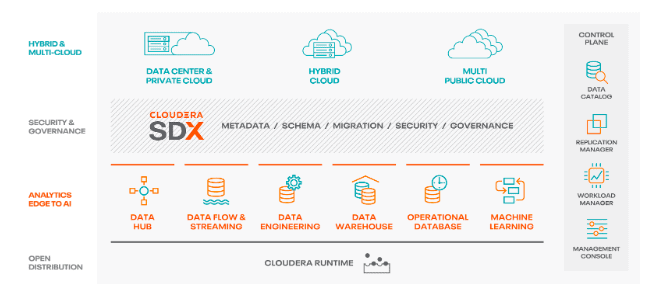

Cloudera Data Hub is a powerful cloud service on Cloudera Data Platform (CDP) that makes it easier, safer, and faster to build modern, mission-critical, data-driven applications with enterprise security, governance, scale, and control. The cloud-native service is powered by a suite of integrated open source technologies that delivers the widest range of analytical workloads such as data flow & messaging, data marts and data engineering.

If you want to learn more about CDP Data Hub, please visit our Release Blog Post and watch the corresponding webinar.

Use Cases for NiFi and Kafka in CDP Data Hub

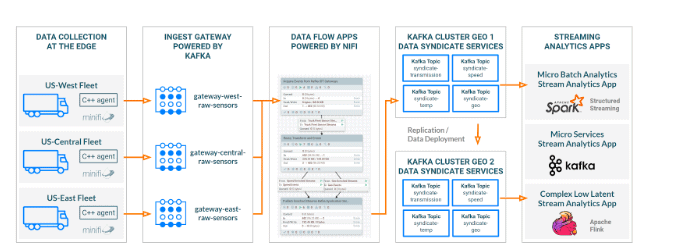

NiFi and Kafka are at the center of many modern data streaming architectures where NiFi is orchestrating data movement across the enterprise while Kafka is used as a distributed messaging system, making it easy for new applications to tap into a continuous data stream by subscribing to topics of interest. Historically, our customers have successfully implemented streaming architectures on-premises and in public clouds by writing custom automation around the distribution that Cloudera provides.

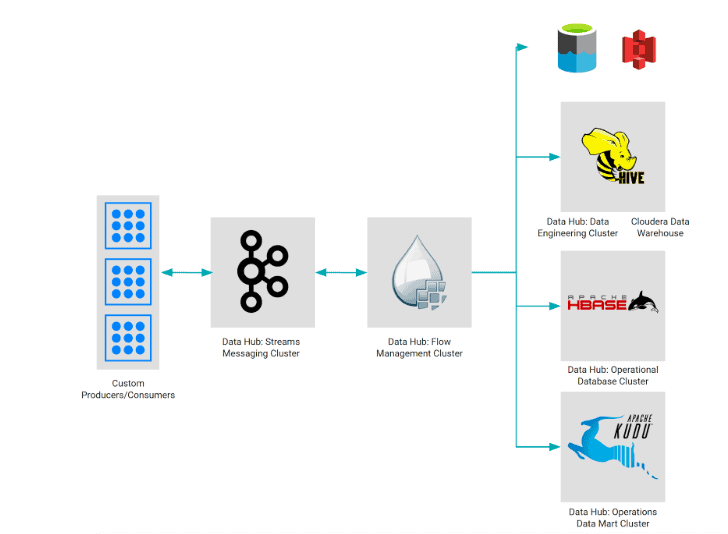

Cloudera’s Streaming Reference Architecture

With the upcoming availability of Kafka and NiFi in CDP Data Hub, customers will have an easy way of extending their streaming architectures into the public cloud, creating true hybrid deployments and unlocking new use cases such as:

1. Hybrid NiFi and Kafka Deployments

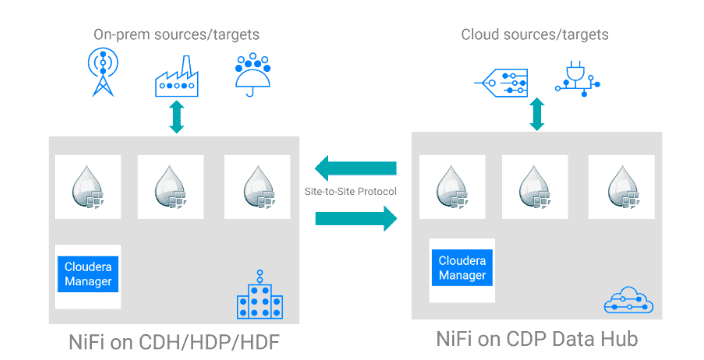

With more data being generated in the public cloud, there is an increased demand for hybrid NiFi and Kafka deployments spanning on-premises and cloud deployments.

While you could have a NiFi cluster on-premises running data flows integrating with cloud sources and cloud targets, setting up a dedicated NiFi cluster on-premises and in the public cloud allows you to merge data streams which partially originate in the cloud and originate in different environments. NiFi’s Site-to-Site protocol is used to efficiently move data between the on-premises and public cloud deployment.

A Hybrid NiFi architecture leveraging the Site-to-Site protocol for inter cluster data movement

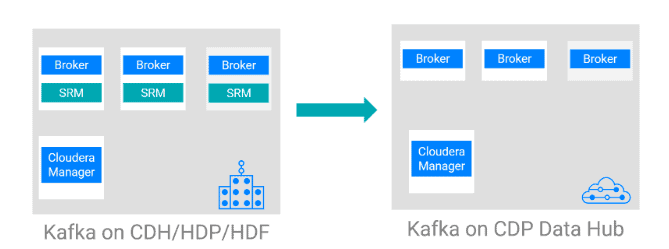

A similar architecture can be implemented for Kafka, leveraging Cloudera’s Streams Replication Manager (SRM) to set up cross-cluster Kafka replication. Organizations running their primary Kafka clusters on-premises can leverage this architecture to set up a dedicated cluster in the cloud for Disaster Recovery. This architecture can also be used for active/active deployments where SRM replicates certain topics across environments. Replication can be bi-directional so you can have primary topics in the cloud that are replicated to your on-premises cluster as well as primary topics on-premises that are replicated to the cloud cluster. Running a Cloudera supported Kafka distribution on-premises and Kafka in CDP Data Hub ensures consistent tooling and support, minimizing integration risks.

A hybrid Kafka architecture leveraging Cloudera Streams Replication Manager for cross-cluster replication

2. Migrating to the public cloud

While Kafka clusters running on CDP Data Hub can be used as migration targets for your on-premises Kafka clusters, the hybrid NiFi architecture introduced earlier can not only help you move your NiFi environments to the public cloud, but help you move and migrate any data set to the public cloud which might be required by any of your new cloud applications.

3. Building a cloud-native data movement backbone

NiFi in CDP Data Hub allows you to build data flows orchestrating data movement across cloud-native services and custom applications that your teams have built serving as your new cloud data movement backbone. This data movement backbone is also well suited to manage data ingest into other CDP services such as Cloudera Data Warehouse or other Data Hub clusters such as Operational Database or Operations Data Mart.

Using Nifi and Kafka in CDP Data Hub as data movement backbone to ingest data into other CDP services

4. Building cloud-native streaming applications

NiFi and Kafka deployments in CDP Data Hub can serve as your building blocks to build cloud-native streaming applications in the public cloud requiring data movement and messaging capabilities with strong streaming semantics and data governance.

Benefits of running NiFi and Kafka in CDP Data Hub

With options to manually deploy NiFi and Kafka in the public cloud and various Kafka-based cloud services available, there are several benefits of running NiFi and Kafka in CDP Data Hub over choosing other offerings:

1. CDP Data Hub comes with a managed Control Plane which orchestrates and monitors the cluster deployments. With not being responsible for providing a cluster provisioning and monitoring service, your teams can focus on building streaming applications rather than managing infrastructure.

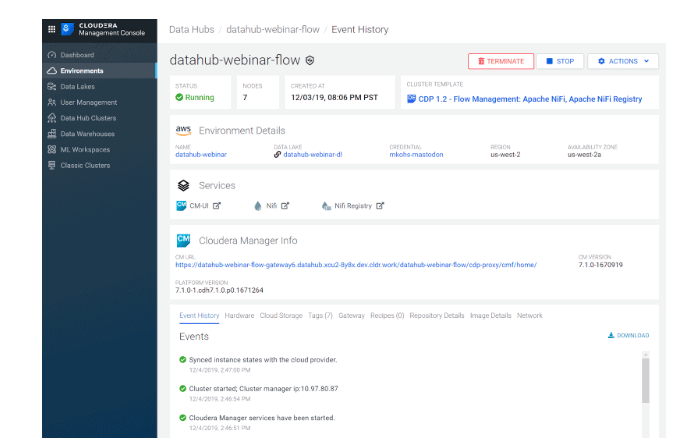

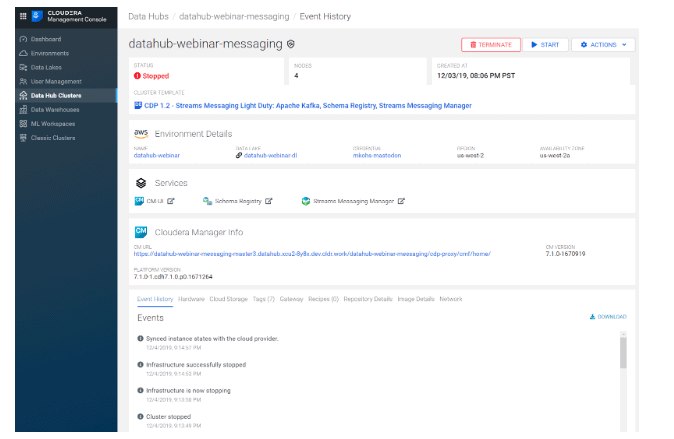

2. Easy deployment through pre-defined Cluster Definitions which have Cloudera’s best practices for NiFi and Kafka cloud deployments baked in so you don’t have to worry about selecting the right instance types and storage classes. Cloudera provides pre-baked images with all components installed to minimize the deployment time. Furthermore, the Cluster Definitions not only deploy the core NiFi and Kafka services but also set up additional components like the NiFi Registry, Cloudera Schema Registry, and Cloudera Streams Messaging Manager. All UIs can be easily reached from the cluster overview page and are fully integrated into CDP’s Single Sign-On system requiring end-users to only log in once.

An Apache NiFi cluster running in CDP Data Hub, exposing links to the NiFi and Nifi Registry UIs

3. Fully secure clusters out of the box by integrating with Cloudera SDX. All NiFi and Kafka clusters deployed through CDP Data Hub are configured with Kerberos authentication and TLS wire encryption. The KDC is managed by Cloudera as part of SDX so you don’t have to worry about creating or managing Kerberos principals.

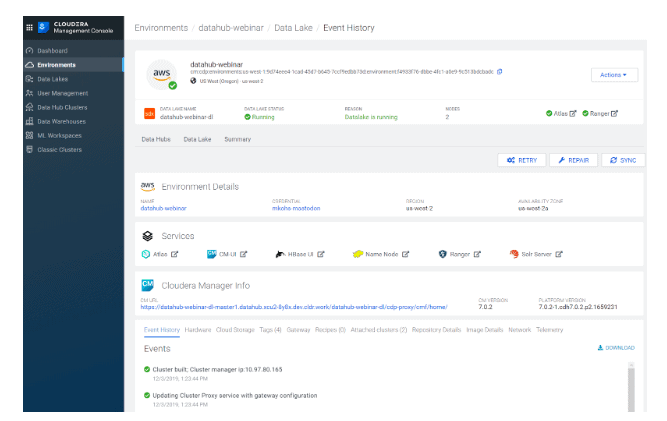

The central Data Lake cluster is hosting SDX services such as Atlas and Ranger and is automatically wired to the Kafka and NiFi clusters.

4. Protection against infrastructure failures such as VM failures. CDP Data Hub detects unhealthy cluster nodes and replaces them with new instances keeping your clusters healthy. The repair process is fully application-aware and preserves information such as the Broker ID for Kafka brokers and the content in NiFi repositories to ensure the services stay healthy during and after the repair process.

5. Only pay for what you use through consumption-based hourly pricing based on the instance types you are using. You can stop and start clusters as needed to optimize your cloud bill.

A fully secure Kafka cluster exposing the Schema Registry and Streams Messaging Manager UIs through SSO. This cluster has been stopped to save cloud costs.

6. Full integration into Cloudera SDX for consistent data governance across the entire CDP platform.

SDX Integration for consistent data governance and security

Providing a holistic experience for setting security policies and managing data governance across an end-to-end streaming application is a challenge for enterprise customers. The tight integration of NiFi and Kafka with SDX allows you to:

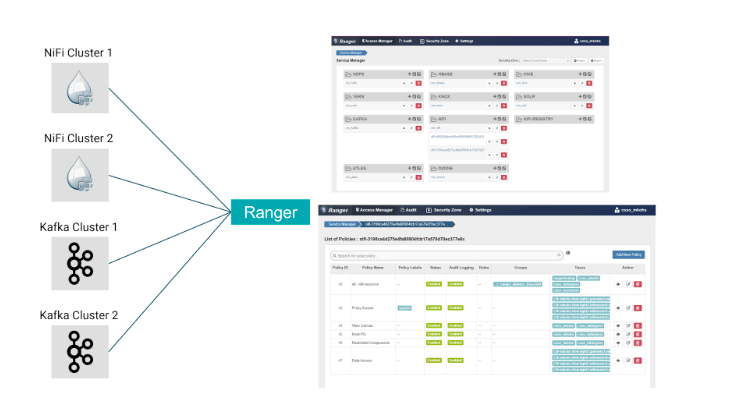

- Centrally manage access policies for Kafka topics, NiFi process groups and other CDP objects like Hive tables in Apache Ranger. In CDP Data Hub, your Kafka and NiFi clusters are automatically integrated with a central Ranger instance allowing you to manage access policies in one place. Within the central Ranger instance, you have the option to define access policies on a cluster level. For NiFi this means that you can control user access very granularly and for Kafka you can set different access policies on topics with the same name on different clusters.

Kafka and NiFi clusters are connected to a central Ranger instance

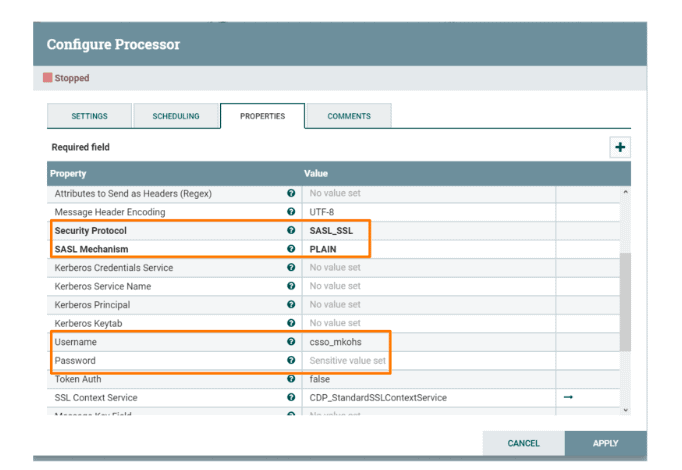

- Use your CDP identity to authenticate to Kafka. CDP allows you to connect your own Identity Provider to import existing users and groups into CDP’s user management system. Every Kafka cluster created in CDP Data Hub is configured to authenticate against CDP’s central user management system exposing a SASL/PLAIN endpoint to consumers and producers. You can now use your CDP username and password to authenticate against Kafka brokers. No more dealing with Kerberos Principals or Keytabs anymore!

An example showing how NiFi leverages the new SASL/PLAIN authentication using the CDP user “csso_mkohs” and the corresponding password

- Automatically report NiFi metadata and lineage to Atlas running in the SDX Data Lake cluster enabling end-to-end data lineage starting with where your data sets originated from all the way to where your data is consumed from.

Summary

Kafka and NiFi’s availability in CDP Data Hub allows organizations to build the foundation for their Data Movement and Stream Processing use cases in the cloud. CDP Data Hub provides a cloud-native service experience built to meet the security and governance needs of large enterprises.

If you missed the NiFi and Kafka on Cloudera Data Hub webinar and demo, watch the replay here now.