Cloudera Data Platform (CDP) supports access controls on tables and columns, as well as on files and directories via Apache Ranger since its first release. It is common to have different workloads using the same data – some require authorizations at the table level (Apache Hive queries) and others at the underlying files (Apache Spark jobs). Unfortunately, in such instances you would have to create and maintain separate Ranger policies for both Hive and HDFS, that correspond to each other.

As a result, whenever a change is made on a Hive table policy, the data admin should make a consistent change in the corresponding HDFS policy. Failure to do so could result in security and/or data exposure issues. Ideally the data admin would set a single table policy, and the corresponding file access policies would automatically be kept in sync along with access audits, referring to the table policy that enforced it.

In this blog post I will introduce a new feature that provides this behavior called the Ranger Resource Mapping Service (RMS). The RMS was included in CDP Private Cloud Base 7.1.4 as tech preview and became GA in CDP Private Cloud Base 7.1.5.

What is Ranger RMS?

In a nutshell, Ranger RMS enables automatic translation of access policies from Hive to HDFS, reducing the operational burden of policy management. In simple terms, this means that any user with access permissions on a Hive table automatically receives similar HDFS file level access permissions on the table’s data files. So, Ranger RMS allows you to authorize access to HDFS directories and files using policies defined for Hive tables. Any access authorization materialized through Ranger RMS is fully audited and would be present in Ranger Audit logs.

How does it help?

The functionality provided by Ranger RMS is very useful for the usage of external table data by non-Hive workloads such as Spark. Let us consider the users who are currently on the different versions of the Cloudera product stack to understand how this feature would benefit them.

- CDH – In the CDH stack, Apache Sentry managed authorizations for Hive/Impala tables. Sentry has a feature called HDFS ACL Sync, which would provide a similar functionality. Sentry uses HDFS ACLs to provide access to users on HDFS files of Hive tables. The implementation of HDFS ACL Sync in Sentry is very different from how Ranger RMS handles automatic translations of access policies from Hive to HDFS. But the underlying concept and the results are the same for table-level access.

- HDP – In the HDP stack, if direct HDFS access is required on Hive table locations, storage access policies would need to be created manually. These can be done through either HDFS policies in Ranger or via setting POSIX permissions or HDFS ACLs on the files and directories. Essentially, two different Hive and HDFS policies were managed and manually kept in sync for all such tables.

- CDP (prior to CDP Private Cloud Base 7.1.4) – Direct HDFS access to Hive table locations was handled in CDP using manually created storage access policies either through HDFS policies or using other options like POSIX permissions or HDFS ACLs. Again, two different policies had to be always created and kept in sync for all such tables.

With the introduction of Ranger RMS in CDP Private Cloud Base 7.1.4, Ranger provides an equivalent functionality as the Sentry HDFS ACL sync in CDH. Users upgrading or migrating from CDH to the latest version of CDP should not worry about losing this important capability. Additionally, for HDP users upgrading or migrating to the latest version of CDP, Ranger RMS removes the need for separately managed storage policies on Hive table locations. This means many manually implemented Ranger HDFS policies, Hadoop ACLs, or POSIX permissions created solely for this purpose can now be removed, if desired. This eases the operational maintenance requirement for policies and reduces the chance of mistakes that can happen during the manual steps performed by a data steward or admin.

What does it do?

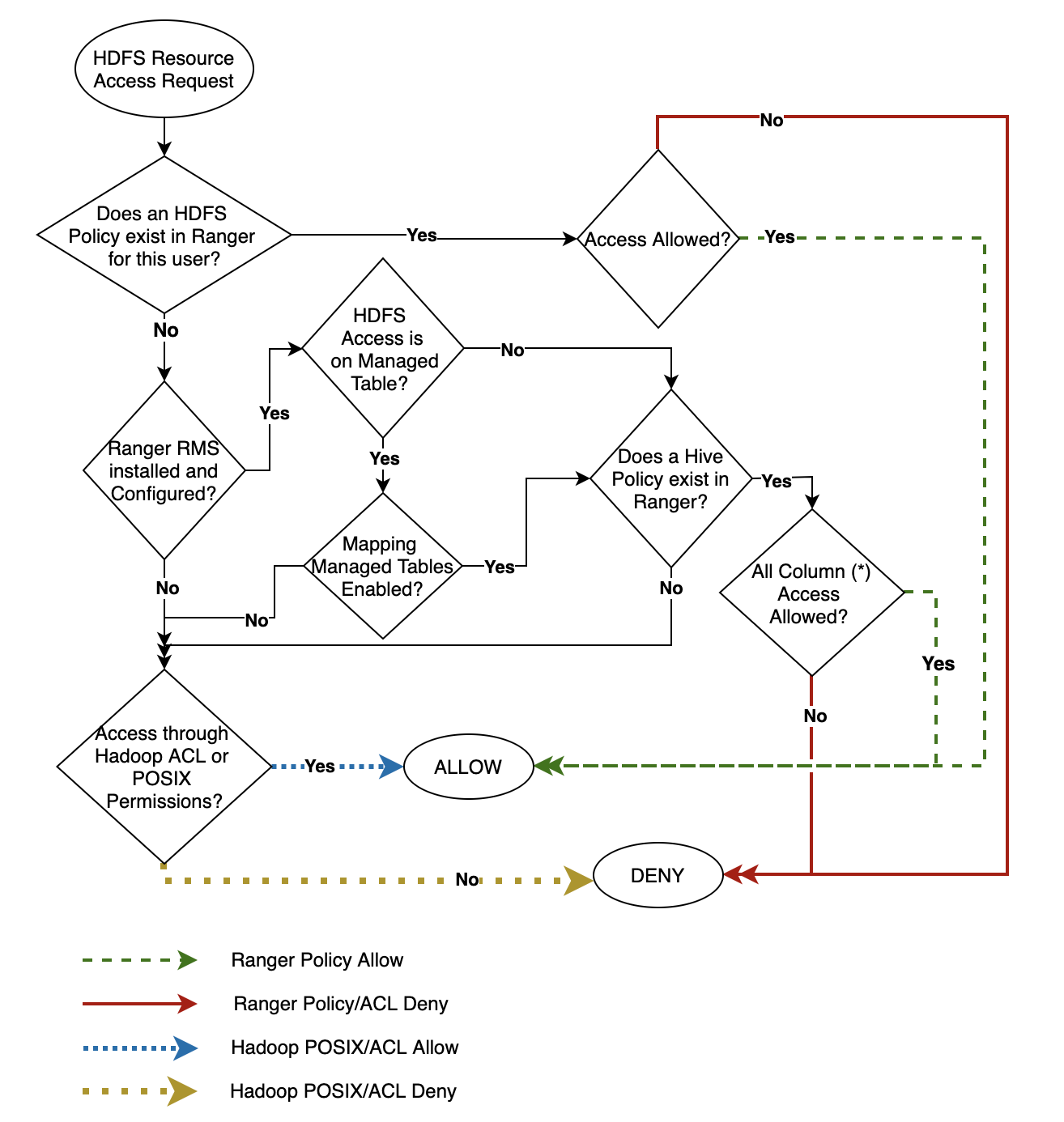

As suggested, Ranger RMS internally translates the Hive policies into HDFS access rules and allows the HDFS NameNode to enforce them. Though it seems direct and simple, the automatic translation of access considers several factors before granting any user the HDFS file level access. Ranger RMS does not create explicit HDFS policies in Ranger, nor does it change the HDFS ACLs presented to users (as Apache Sentry does for ‘hdfs dfs -ls’ command). Instead, it generates a mapping that allows the Ranger Plugin in HDFS to make run-time decisions based on the Hadoop SQL grants.

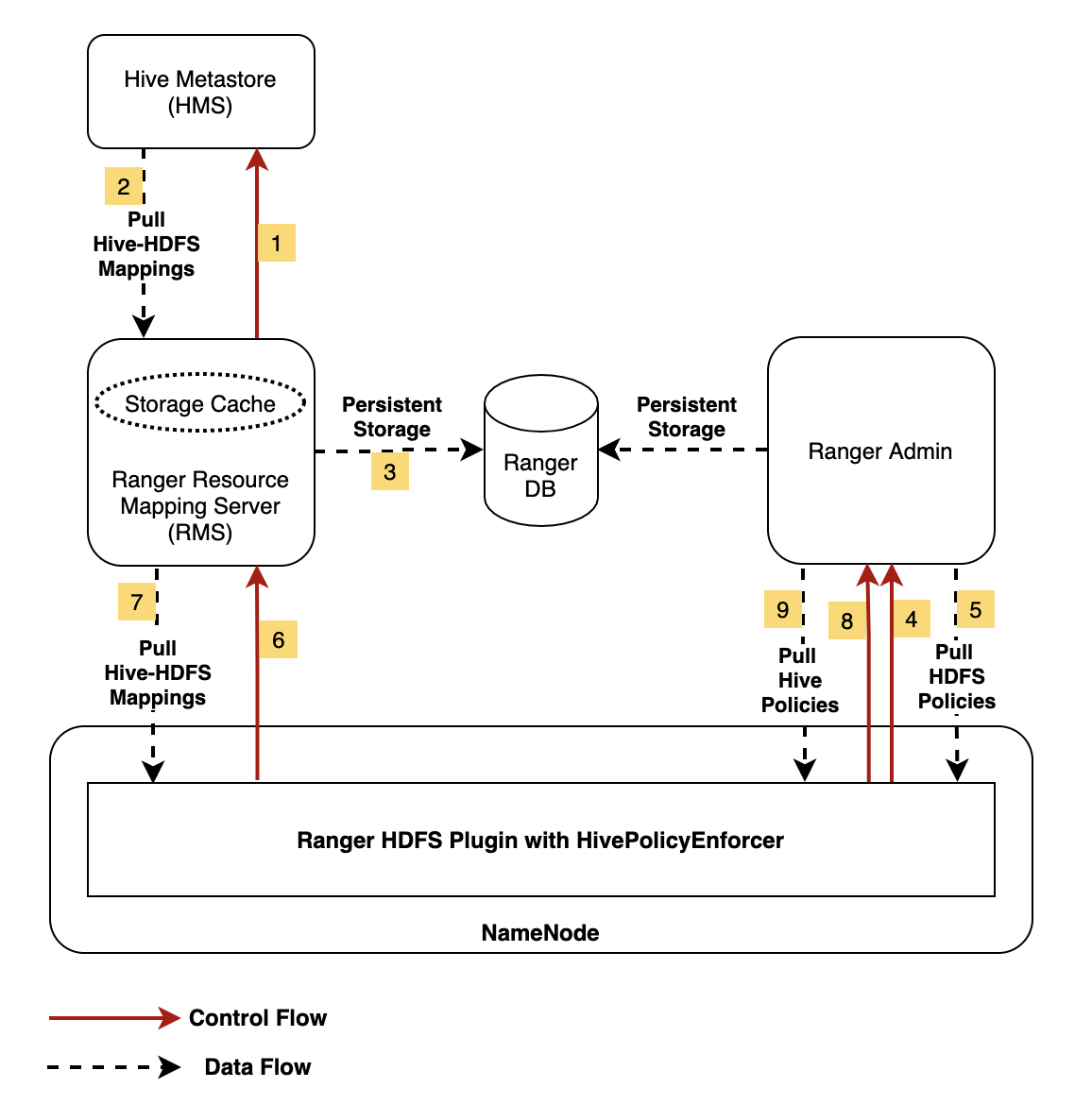

The picture below shows the interactions between NameNode, Ranger Admin, Hive Metastore and Ranger RMS.

Each time Ranger RMS starts, it connects to the Hive Metastore (HMS) and performs a sync that generates a resource mapping file linking Hive resources to their storage locations on HDFS. This mapping file is stored locally in a storage cache within RMS. It is also persisted in RMS specific tables in the backend database used by the Ranger service. After startup, Ranger RMS periodically updates this map, querying HMS every 30 seconds for new mapping updates via Hive notifications. This polling frequency is configurable using the “ranger-rms.polling.notifications.frequence.ms” setting.

If no sync checkpoint exists or it is not recognized by HMS, then a full sync is done. For example, clearing the Ranger RMS mapping in the backend database may be required when specific configuration changes are made. Such an action clears the sync checkpoint and causes a full resync to occur the next time RMS is restarted. The synchronization process queries HMS to gather Hive object metadata, such as database name and table name to map to the underlying file paths in HDFS.

On the HDFS side, the Ranger HDFS plugin running in the NameNode now has an additional HivePolicyEnforcer module. In addition to downloading the HDFS policies from Ranger Admin, this enhanced HDFS plugin also downloads Hive policies from Ranger Admin, along with the mapping file from Ranger RMS. HDFS access is then determined by both HDFS policies and Hive policies.

During the evaluation of access requirements for HDFS files, any HDFS policies from Ranger are applied first. It is then checked to see if the HDFS resource has an entry in the resource mapping file provided by RMS. Next the corresponding Hive resource is computed from the mapping and then Hive policies present in Ranger are applied. Finally depending on the various configurations, the composite evaluation result is computed.

Policy Evaluation Flow with Ranger RMS

The following flow diagram depicts the policy evaluation process when Ranger RMS is involved. It is important to note that even if you have Ranger RMS enabled, manually created HDFS policies will have priority and can override RMS policy behavior.

What about Managed Tables, can it help?

There are two types of Hive tables in CDP – Managed Tables and External Tables. A detailed explanation of these different types can be found in the official documentation for Hive. While the primary use case for Ranger RMS is to simplify External table policy management, there are specific conditions where you may need to enable this on Managed tables.

Spark Direct Reader mode in Hive Warehouse Connector can read Hive transactional tables directly from the filesystem. This is one special use-case where enabling RMS for Hive Managed Tables can be beneficial. The user launching such a Spark application must have read and execute permissions on the Hive warehouse location. If your environment has many applications using Spark direct reader to consume Hive transactional table data within a Spark application, you can consider enabling Ranger RMS for Hive Managed Tables. Apart from this use-case, the recommendation is to not open up the Hive warehouse location for Managed Tables through RMS for security reasons. Please study your use-cases properly specifically for Hive Managed Tables before enabling Ranger RMS for Managed Tables. This feature may not be desirable in many scenarios where Hive Managed Tables location should be locked down completely.

The most important point for Ranger RMS on a Managed Table is the location. It should be noted that the location for such Managed Tables can only be the managed space of the database in which they are created. If a location is not defined at the database level, then it defaults to the value of “hive.metastore.warehouse.dir”.

Ranger RMS does provide an option to map managed tables as specified below.

- RMS currently has a checkbox configuration to “Enable Mapping Hive Managed Tables”

- This configuration, if enabled, provides the HDFS access to managed tables’ files based on the policies in Ranger Hadoop SQL.

- This configuration is expected to be enabled only during the initial installation and configuration of Ranger RMS.

If this configuration is not enabled in Ranger RMS, then the default Hive managed space would be locked down to the user and group for “hive”. In such a case, users belonging to any other groups would not have any access to the default hive managed location in HDFS.

Though Ranger RMS provides an option to map Hive Managed Tables, it needs to be understood that enabling this feature for Managed Tables provides users who have SQL level access on such tables with direct read access on the corresponding tables’ HDFS files. The following should be noted when enabling this feature in RMS. Users with update permission on Managed Tables in Hadoop SQL would be able to update table data through SQL. But RMS would not provide users with direct write access on the managed HDFS location even if they have update permission in Hadoop SQL.

Direct HDFS Access on Hive Table Data Using Ranger RMS

If Ranger RMS is installed and configured in a CDP environment the following details provide high-level access requirements for performing read/write on Hive tables’ corresponding HDFS files.

- If the configuration for “Mapping Hive Managed Tables” is not enabled in Ranger RMS, the user access to HDFS files for Managed Tables depend on the type of databases

- No user would be able to access the underlying HDFS files for Managed Tables present in databases created without any “managedlocation” clause

- Users can have access to the underlying HDFS files for Managed Tables present in databases created using a non-default “managedlocation” clause based on the HDFS POSIX permissions or HDFS ACLs of those directories

- If the configuration for “Mapping Hive Managed Tables” is enabled in Ranger RMS, then users are allowed to access the underlying HDFS files for Managed Tables

- Any user who created a Managed Table (owner) would be able to directly access the corresponding HDFS files for the table

- Any user with select/update privilege on all columns in a Managed Table would be have read access to the corresponding HDFS files for the table

- For External Tables, based on the select/update permissions users are allowed to access the corresponding HDFS files for the tables

- Any user who created an External Table (owner) would be able to directly access the corresponding HDFS files for the table

- Any user with select/update privilege on all columns in an External Table would be able to directly access the corresponding HDFS files for the table

- Any user with access to only a selected set of columns in a table would not be able to directly access the corresponding HDFS files for the table

- Any user given access to all columns in a table specifically by adding column names and not by “*” in the columns dropdown in Hadoop SQL would not be able to directly access the corresponding HDFS files for the table

- Any user with access to columns that have masking policies defined on them would not be able to directly access the corresponding HDFS files for the table

- Any user with access to columns that have row filtering policies defined on them would not be able to directly access the corresponding HDFS files for the table

Summary

Ranger RMS adds a key capability that enhances the security design of CDP clusters. By providing an automatic method to use Hive policies to access the corresponding tables’ HDFS data directly, it not only brings in an important feature to the Ranger data security framework, but also reduces the policy management overhead considerably.

To learn more about Ranger RMS and related features, here are some helpful resources:

Installing Ranger RMS

Ranger Hive-HDFS ACL Sync Overview

CDP Knowledge Hub

Hi Kiran,

that is a very useful feature! Happy to see this enhancement. A kind of similar functionality (less smart) was already there in Apache Sentry and was very useful for reading Hive managed tables in Spark ETL jobs.

Do you have plans to make this available on CDP Public Cloud? (I believe this will require Ranger RAZ as well)

Thanks and regards

Daniel

Hi Daniel,

Ranger RMS is currently designed to address the “HDFS ACL Sync” option in Sentry for CDP Private Cloud Base clusters. At this point, this feature is currently not available in CDP Public Cloud. There are open requests to implement an equivalent feature on CDP Public Cloud.

It would be also useful to have a checkbox for the “hive managed table RMS sync” on policy level in Ranger. So policy creator & maintainer can decide if he wants to use that feature for that specific database or table.

Daniel,

Good point. Currently there is no table level switch in RMS for Managed tables. As mentioned in the blog, there is a global configuration which controls if all Managed Tables are mapped in RMS.