The next generation of big data warehousing is being built on transactional tables. Transactions, of course, enable new use cases that require updating, deleting, and merging rows of data. But more importantly, a transaction-centric design enables advanced features such as materialized views, aggressive data caching and efficient replication between warehouses which are critical for modern analytics and BI In the past such rich features were privy to traditional data warehouses that were expensive, limited to the vendor’s proprietary tools and scaled to limited amounts of data forcing islands of data. In contrast, an important characteristics of the big data ecosystem has been the ability of a rich set of open source tools to access tables in a large, shared data lake. We continue to retain the innovative tool set for the next general of big data warehousing and have made strides in optimizing the system to work equally well on the slower cloud storage, taking advantage of our engine’s caching abilities.

At Cloudera we are partnering with Qubole and open source communities to build this next generation data warehouse. We have added support for these new use cases in Apache Hive, Apache Impala, and Apache Spark. Qubole has also added additional support for these use cases in Spark and will be releasing support for Presto soon.

We have a mature engine, having added transactions a few years ago, which we used to add advanced features over the last couple of years. We currently have hundreds of customers using transactions in production, and with the release of our latest product CDP, we continue to advance this vision. This blog explores the requirements for sharing transactional tables across open source SQL and non-SQL engines like Spark in our next generation data warehouse and discusses how we are implementing this vision.

The Case for Transactional Tables

The big data ecosystem has clearly moved towards tables as the preferred model for storing data. Tables are flexible enough to be used across batch and streaming systems. They can model a traditional relational table, a group of keys and values as in Apache HBase, a set of objects in S3, files in a directory, or an Apache Kafka stream. And they support sufficient metadata such as schema, storage format information, and statistics to enable tools to implement advanced features and better performance.

Initially, the Hadoop ecosystem supported transactional insertion of new partitions into a table. Replacing, appending, updating, or deleting data in a partition was not transactional and required user coordination to avoid errors. Updates and deletes to a single row required rewriting the entire partition or at least an entire file.

Today users need support for inserting, updating, deleting, and merging rows of data instead of just inserting or replacing partitions. Slow changing dimensions, a very common method for managing the change in user data over time, requires updates. Dropping a single customer’s records to comply with privacy regulation requires deletes. Inserting data continuously from a stream source, such as Apache Kafka, must be supported to enable continuous, near real time upload of data into the warehouse. Ingesting data into the warehouse via small or micro-batches from front end systems requires merge. All of these need transactions to ensure that writes do not conflict and that readers see consistent data regardless of changes taking place during their queries.

Supporting transactions also enables a number of advanced features, due to its consistency model. Consistency is a strong foundation for going faster, but safely. With transactions it is much easier to cache aggressively and correctly. Table data and even metadata can be cached, only to be re-read when the transaction system indicates that the table has changed. Query results can be cached and only recalculated when the transaction system indicates a change to one of the underlying tables. The transaction system can keep track of when materialized views are up to date. This allows the optimizer to decide whether to consider using a materialized view rather than the base table. Once the materialized view is determined to be out of date, the rebuild algorithm can use transactions to determine how to rebuild the materialized view with minimal processing. And using transactions, the replication system can easily track what has changed in a table and thus needs to be shipped to a target table in another instance.

Of course the data in these transactional tables must be secured and governed. Access control at the level of the entire database, a table or worse the files that store the table data is not sufficient. Column and row level permissions must be enforced. Compliance officers must be able to mark data as personally identifiable information (PII) and restrict access to those tables, columns, or rows to only the approved personnel. This must be true regardless of what tool a user chooses to access the data.

Supporting Transactional Tables across Tools

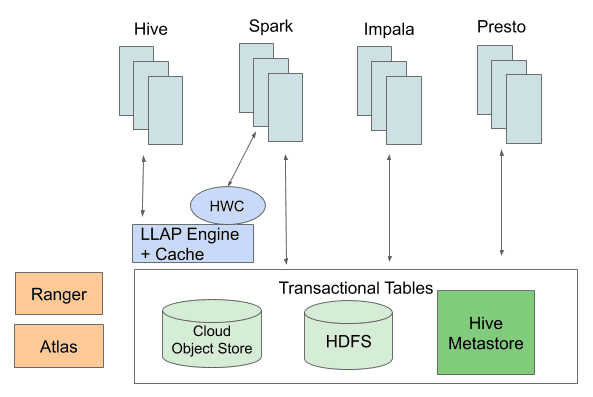

These row level transactions need to be supported across the tools. A key aspect of the big data ecosystem is the ability of users to choose the best tool for the job from a set of innovative open source technologies rather than advocating a one size fits all approach. And flexibility is required since new tools are introduced over time. It should be possible to stream records into a table with Spark and read that table with Presto. It should be possible to update a table in Hive and query it with Impala while staying transactional consistent. In addition to working across tools it must work across storage, with tables stored in S3, GFS, ADLS, and HDFS.

What must be done to meet these requirements in the big data ecosystem? Supporting the interoperation of transactional tables between tools requires three things: shared storage, shared metadata and transactions, and shared security and governance. All these must be open and usable by all supported user tools.

Even when these three requirements are met, sharing data across tools comes with challenges. Previously, tools could interoperate on data through the file formats. But transactions and advanced security require more than a file format. Additionally, it is not reasonable to expect every tool to understand every other tool’s data storage layout. Different tools may bucket data differently, even when using the same file format. Some tools may not support all of the security features needed to access a particular data set. Bridges need to be built to allow access across tools.

Cloudera is building the three legs of shared storage, shared metadata and transaction, and common security for data warehousing tables, as well as the necessary bridges, all in open source, to enable our users to bring the tools of their choice to those tables.

The Hadoop ecosystem has long supported a shared data lake using common storage, whether in the cloud or on premises. We have been optimizing our data warehousing tables for object stores, as the cloud storage systems are subtly different than HDFS and significantly slower.

The Hive metastore (HMS) is the de facto metastore for the Hadoop ecosystem. It is supported by a variety of open source tools, including Hive, Spark, Impala, and Presto.

Transactions require a write ahead log (WAL). When we started the work on Hive transactions five years ago, it was clear that the HMS is the natural place to store this WAL. HMS stores its data in an RDBMS itself and thus is transactional. It is already used across tools. Further, the metastore is already accessed by every query, so there is minimal additional overhead during query planning.

These ACID transactions are designed to work in write-once systems like cloud object-stores and HDFS that hold the table data and across large distributed computation engines without significantly impacting performance. The design minimizes contention in order to maximize the number of concurrent readers across different tools and allow concurrent writers while avoiding conflicts and deadlocks. The changes to data are stored in a way that minimizes overhead for readers even when there are many concurrent versions of the data.

For security and governance Apache Ranger and Apache Atlas were a natural choice, as these provide the most advanced security and governance available in the big data ecosystem. They work across a variety of tools and different kinds of data, be it files, tables, or Kafka streams.

Building Bridges across Tools

There are tradeoffs to be made when deciding how to connect tools to the existing transactional and security foundations. For example, Qubole is working on implementing direct access to transactional tables for Spark. This will allow Spark users to transactionally insert, update, and delete records in their tables. However, since Spark runs in the user’s context with arbitrary code directly accessing the data, the more advanced security options available in Ranger and Atlas, such as columnar and row level permissions, and tag based policies, cannot be implemented. For users who need these security mechanisms, we have built the Hive Warehouse Connector (HWC), which allows Spark users to access the transactional tables via LLAP’s daemons. This enforces the security policies and provide Spark users with fast parallel read and write access. Since Impala is already integrated with Ranger and can enforce the security policies it is being directly integrated with transactional tables.

For interoperation across tools, there are additional considerations beyond the ability to read and write transactional tables. One tool may choose to implement a particular feature in one manner, while another implements the same feature in a non-compatible way. Take, as an example, the hashing of data into buckets. Spark uses a different hash function than the latest version of Hive does. If each read data written by the other thinking that the data is bucketed according to their own hash function, they will return incorrect results. However, the metastore can easily record how the data was bucketed and inform readers when the bucketing scheme differs from that of the reader to avoid issues.

To solve the bucketing and similar problems, we are adding functionality in HMS to support translation of metadata between tools. So in the bucketing example, the metastore will track which bucketing mechanism is being used. When data bucketed according to Spark’s algorithm is read by Hive, the translator will not change the data. Instead it will tell Hive that the Spark data it is reading it is not bucketed, to avoid the Hive treating the Spark bucketing scheme as its own and producing wrong results.

Of course, all of this is being done in open source. In most cases this is enhancing existing Apache projects. Where new pieces are needed they are being done in the open as well. We are working with Qubole to enable Spark to directly access transactional tables, with a plan to extend it to Presto soon. You can read Qubole’s view on transactional tables and about the work they are doing in their recent blog posts “Building a Data Lake the Right Way” and “Qubole Open-Sources Multi-Engine Support for Updates and Deletes in Data Lakes“.

This post has focussed on the vision, its motivation and an overview of how the tools and technologies fit together to form the next generation data warehouse. Over the next few months we will post additional blogs and white papers giving technical details and example code of how we are building the next generation data warehouse by enabling the sharing of transactional tables across tools in the big data ecosystem.

how does this differs and affects delta lake feature of spark if using hive as the base?

Qubole has recently published a blog that does a great job comparing these, see https://www.qubole.com/blog/qubole-open-sources-multi-engine-support-for-updates-and-deletes-in-data-lakes/ The summary is that Hive’s transactional tables support update and delete from SQL at the row level while the open source version of Delta Lake works at a file level and is not SQL based. Also, as discussed here we’re focussed on working across tools, whereas Delta Lake works primarily with Spark (with some support for Presto).

I’m not sure what you mean by “affect delta lake features”. Hive cannot currently read Delta Lake files, with or without ACID.

In which version of Cloudera product this capability will be available for production use and the target release date?