The best data protection strategy is to remove sensitive information from everyplace it’s not needed.

Have you ever wondered what sort of “sensitive” information might wind up in Apache Hadoop log files? For example, if you’re storing credit card numbers inside HDFS, might they ever “leak” into a log file outside of HDFS? What about SQL queries? If you have a query like select * from table where creditcard = '1234-5678-9012-3456', where is that query information ultimately stored?

This concern affects anyone managing a Hadoop cluster containing sensitive information. At Cloudera, we set out to address this problem through a new feature called Sensitive Data Redaction, and it’s now available starting in Cloudera Manager 5.4.0 when operating on a CDH 5.4.0 cluster.

Specifically, this feature addresses the “leakage” of sensitive information into channels unrelated to the flow of data–not the data stream itself. So, for example, Sensitive Data Redaction will get credit-card numbers out of log files and SQL queries, but it won’t touch credit-card numbers from the actual data returned from an SQL query. nor modify the stored data itself.

Investigation

Our first step was to study the problem: load up a cluster with sensitive information, run queries, and see if we could find the sensitive data outside of the expected locations. In the case of log files, We found that SQL queries themselves were written to several log files. Beyond the SQL queries, however, we did not observe any egregious offenders; developers seem to know that writing internal data to log files is a bad idea.

That’s the good news. The bad news is that the Hadoop ecosystem is really big, and there are doubtless many code paths and log messages that we didn’t exercise. Developers are also adding code to the system all the time, and future log messages might reveal sensitive data.

Looking more closely at how copies of SQL queries are distributed across the system was enlightening. Apache Hive writes a job configuration file that contains a copy of the query, and makes this configuration file available “pretty much everywhere.” Impala keeps queries and query plans around for debugging and record keeping and makes them available in the UI. Hue saves queries so they can be run again. This behavior makes perfect sense: users want to know what queries they have run, want to debug queries that went bad, and want information on currently running queries. When sensitive information is in the query itself, however, this helpfulness is suddenly much less helpful.

One way to tackle such “leakage” of sensitive data is to put log files in an encrypted filesystem such as that provided by Cloudera Navigator Encrypt. This strategy is reasonable and addresses compliance concerns, especially in the event that some users require the original queries.

This approach allows some users to still see the log files in the clear; the contents of the log files make their way to the Cloudera Manager UI. However, in most cases, the original query strings are not strictly required and the preferred solution is to simply remove (a.k.a. redact) the sensitive data entirely from places where it’s not needed.

The Approach

We decided to tackle redaction for log files as well as for SQL queries. Even though we observed little information leakage from log files, we decided that it would be better to be safe than sorry and apply redaction to all of them. We also wanted to protect against future log messages and code paths that we didn’t exercise. We therefore implemented “log redaction” code that plugs itself into the logging subsystem used by every component of Hadoop. This “log4j redactor” will inspect every log message as it’s generated, redact it, and pass it on to the normal logging stream.

The other component of this effort was to redact SQL queries at their source, which required work in Hive, Impala, and Hue (the open source GUI for Hadoop components). In both Hive and Impala, as soon as the query is entered it is split into two: the original copy that’s used to actually run the query, and a redacted copy of the query that’s shown to anything external. In Hue, queries are redacted as they are saved.

Finally, Cloudera Manager makes this all easy to configure. The administrator is able to specify a set of rules for redaction in one place, click a button, and have the redaction rules take effect on everything throughout the cluster.

Configuring Redaction

Let’s see how this works in practice. In Cloudera Manager there are two new parameters in HDFS, one to enable redaction and one to specify what to redact. Let’s say that I want to redact credit-card numbers. But because credit-card numbers are also a boring demo, let’s also say that I just read a Harry Potter book and would feel more comfortable if the name “Voldemort” were replaced by “He Who Shall Not Be Named.”

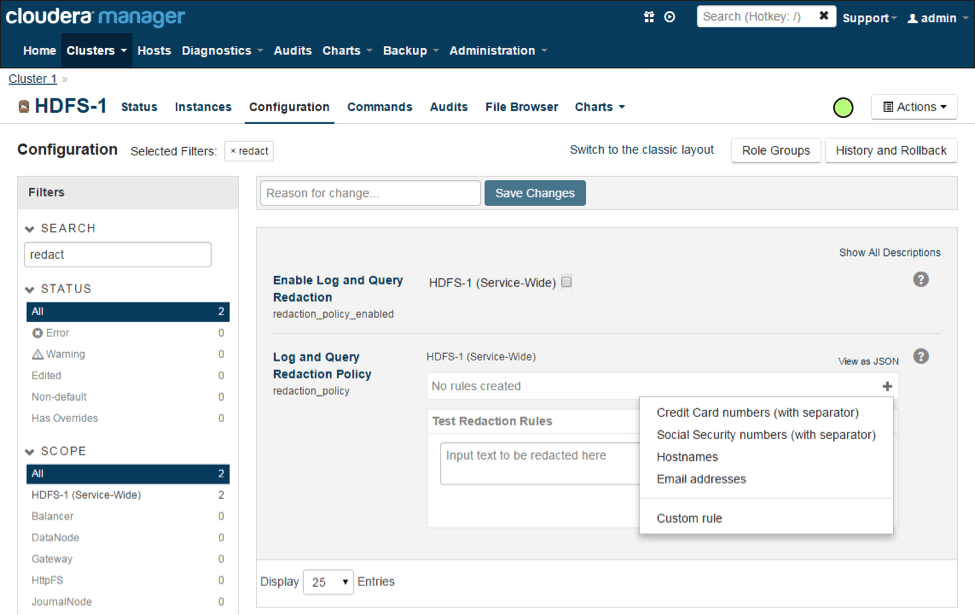

Redaction is an HDFS parameter that is applied to the whole cluster. The easiest way to find it is to simply search for ‘redact’ on the HDFS Configuration page:

Here there is no “Log and Query Redaction Policy” defined yet, and I’ve clicked on the + sign to add one. There are four presets in the UI, and it’s easy to create custom rules from scratch. For the credit-card numbers, I’ll select the first entry, “Credit Card numbers (with separator)”.

This action creates the first rule.

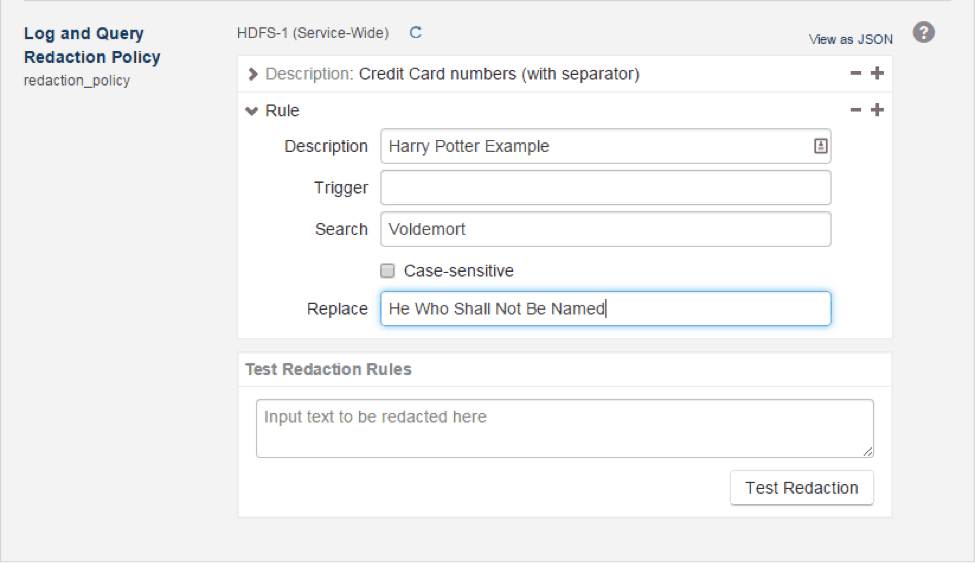

- The “Description” is useful for record keeping and to remember what a rule is for, but has no impact on the actual redaction itself.

- The “Trigger” field, if specified, limits the cases in which the “Search” field is applied. It’s a simple string match (not a regular expression), and if that string appears in the data to redact the “Search” regular expression is applied. This is a performance optimization: string matching is much faster than regular expressions.

- The most important parameter is the “Search” field. It’s a regular expression that describes the thing to redact. The search parameter shown here is complicated and matches four digits, a separator, and so on that describes a credit card.

- The final field, “Replace,” is what to put in the place of the text matched by “Search.”

Let’s now click on the + sign and select a custom rule.

The fields start out blank; I filled them in so that instances of the name “Voldemort” are replaced with “He Who Shall Not Be Named”.

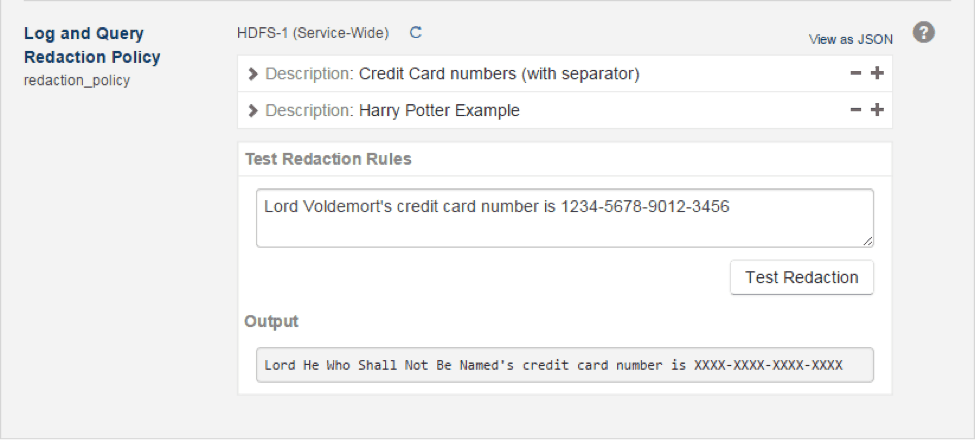

Now, for a really useful part of the UI: It’s possible to test the existing redaction rules against some text in order to be certain that they work as expected. This takes the guesswork out of making redaction rules; you can easily see how they work in action. Here’s an example:

The Test Redaction Rules box has my sample sentence to redact, and the Output box shows that Voldemort’s name and credit-card information have both been replaced with something much more comforting.

After checking the “Enable Log and Query Redaction” box (visible in the first screenshot) and a cluster restart, these redaction rules are populated to the cluster. The easiest place to see them in effect is in Hue. For the purposes of this entirely contrived example, let’s say I have a table containing Harry Potter character credit-card information. Let’s try an Impala search inside Hue:

I actually typed in the word “Voldemort” into the UI. After being executed, the notification “This query had some sensitive information removed when saved” appeared. Let’s check out the list of recent queries:

Hue replaced Voldemort’s name with “He Who Shall Not Be Named” in the list of recent queries. We can also see what Impala does with this query. Going to the Impala Daemon’s web UI, we see the same redaction taking place:

The same redaction holds true for Hive, for log files, and for any other place where this query might have appeared.

Conclusion

We hope that you find this functionality useful. Several teams across CDH came together to make this project happen, including those working on Cloudera Manager, Impala, Hive, Hue, packaging, QA, and docs.

Removing sensitive information from places where it’s not needed is the simplest and most effective data protection strategy. The Sensitive Data Redaction feature achieves that goal throughout CDH and provides an easy, intuitive UI in Cloudera Manager.

Michael Yoder is a Software Engineer at Cloudera.