Has your organization considered upgrading from Hortonworks Data Flow (HDF) to Cloudera Flow Management (CFM), but thought the migration process would be too disruptive to your mission critical dataflows? In truth, many NiFi dataflows can be migrated from HDF to CFM quickly and easily with no data loss and without any service interruption. Here we explore three common use cases where a CFM cluster can assume an HDF cluster’s dataflows with minimal to no downtime. Now is the time to get to the latest and greatest NiFi in a non-disruptive way for your business.

Use Case 1: NiFi pulling data from Kafka and pushing it to a file system (like HDFS)

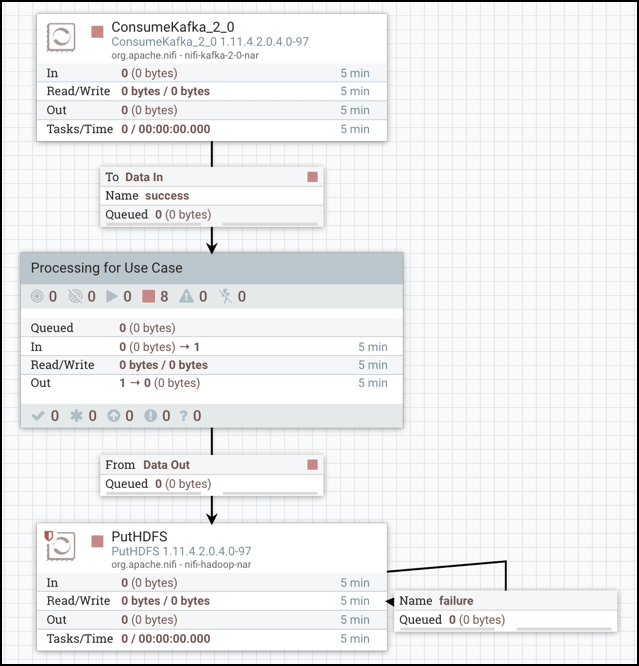

An example of this use case is a flow that utilizes the ConsumeKafka and PutHDFS processors.

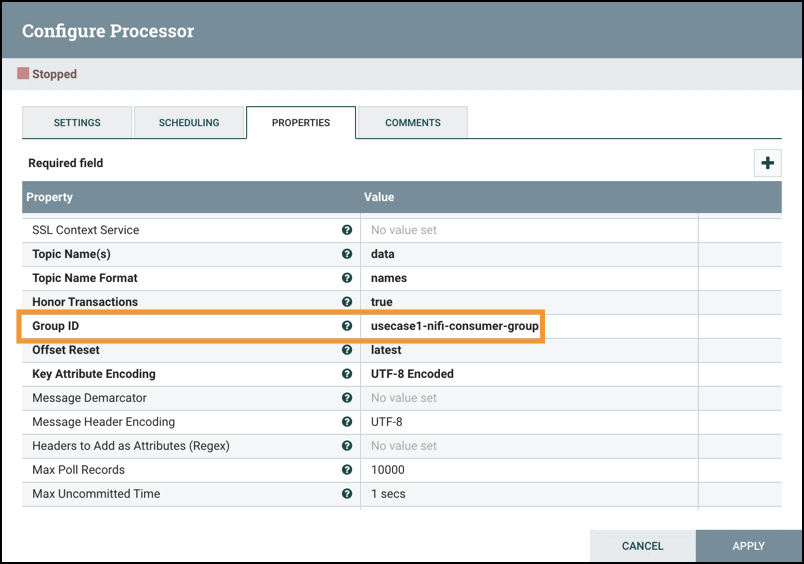

With the flow running in HDF, you can set up the same flow in the CFM cluster, making sure to use the same “Group ID” in each ConsumeKafka processor configuration.

Start the flow in the CFM cluster. The Kafka coordinator, for the specified Consumer Group ID, will rebalance the existing topic partitions across the consumers from both HDF and CFM clusters.

When ready to migrate, stop the ConsumeKafka processor in the HDF flow. There should be no data ingested in HDF, only CFM. Once the downstream PutHDFS has fully processed all the data, the HDF cluster can be shut down as CFM has seamlessly taken over the flow’s responsibilities.

Thanks to the Kafka protocol and how consumers (from the same Group ID) are assigned to Kafka partitions for a given topic, it is really easy to migrate flows where Kafka is the source.

Use Case 2: NiFi pulling data from a database and sending it to a data store (like Kudu)

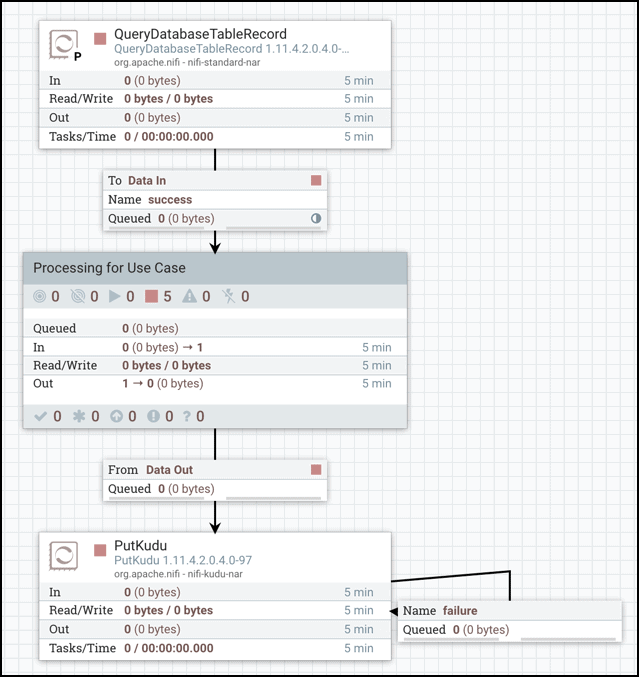

An example of this use case is a flow that utilizes the QueryDatabaseTableRecord and PutKudu processors.

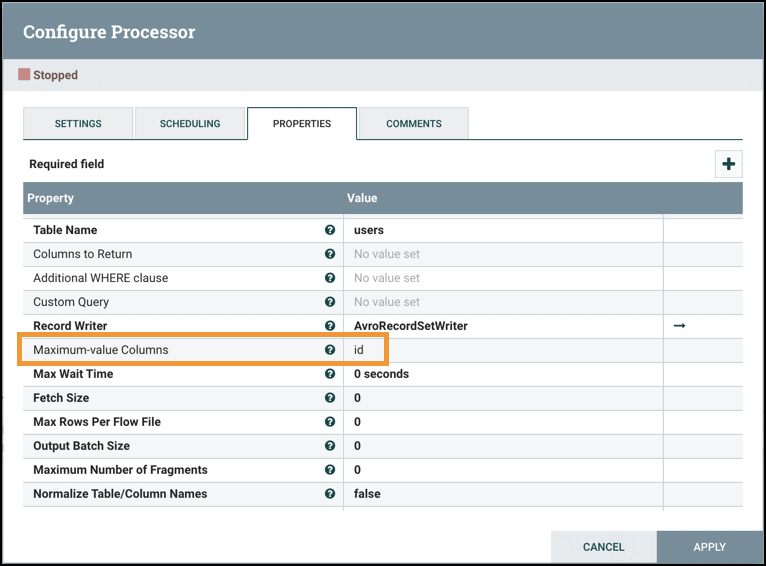

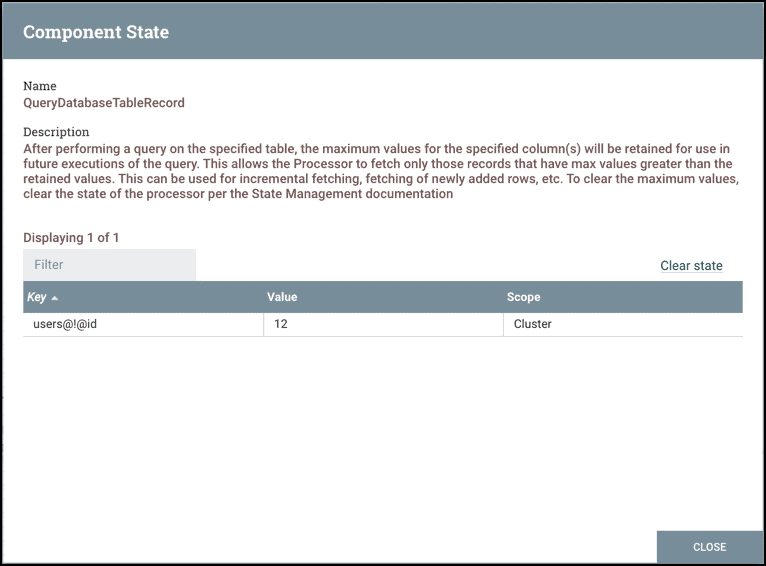

Have the flow running in HDF with the QueryDatabaseTableRecord processor configured to use the “Maximum-value Columns” property.

In this example, the QueryDatabaseTableRecord processor will keep track of the maximum value for the “id” column in the “users” table since the processor started running.

Setup the same flow in the CFM cluster but do not start it yet.

When ready to migrate from HDF to CFM, stop the QueryDatabaseTableRecord processor in HDF and allow the remaining data to be processed by the downstream PutKudu. Then right-click on the QueryDatabaseTableRecord processor and select “View State” to note the “id” value at which the processor stopped retrieving.

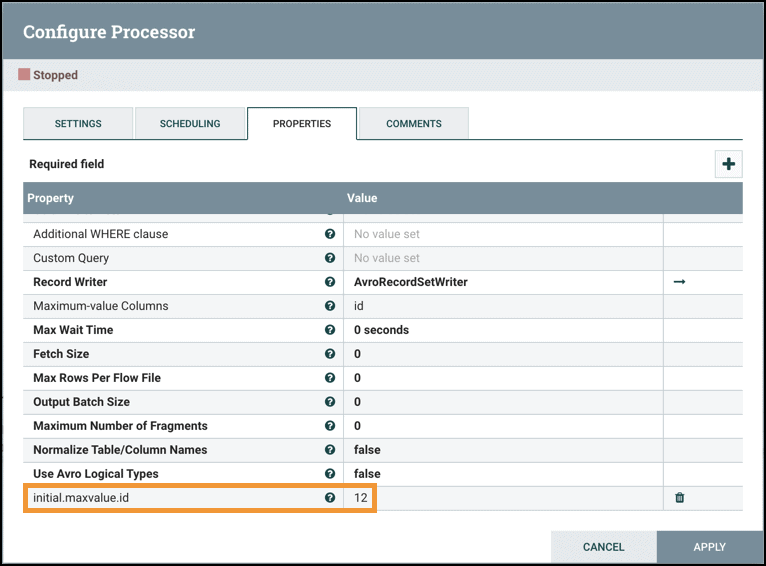

In CFM, add the initial.maxvalue.<max_value_column> dynamic property to the QueryDatabaseTableRecord processor. Set the value of the dynamic property to the “id” value noted from the HDF processor state.

Start the CFM flow and data from the database table will begin to pull right from where HDF left off. After confirming the flow is working as expected in CFM, the HDF cluster can be shut down.

Use Case 3: NiFi listening for incoming HTTP data and sending it to a file system (like HDFS)

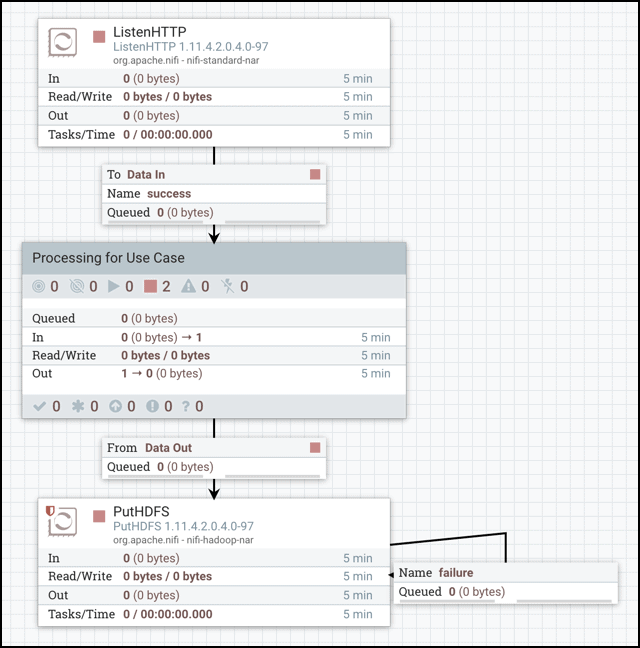

An example of this use case is a flow that utilizes the ListenHTTP and PutHDFS processors.

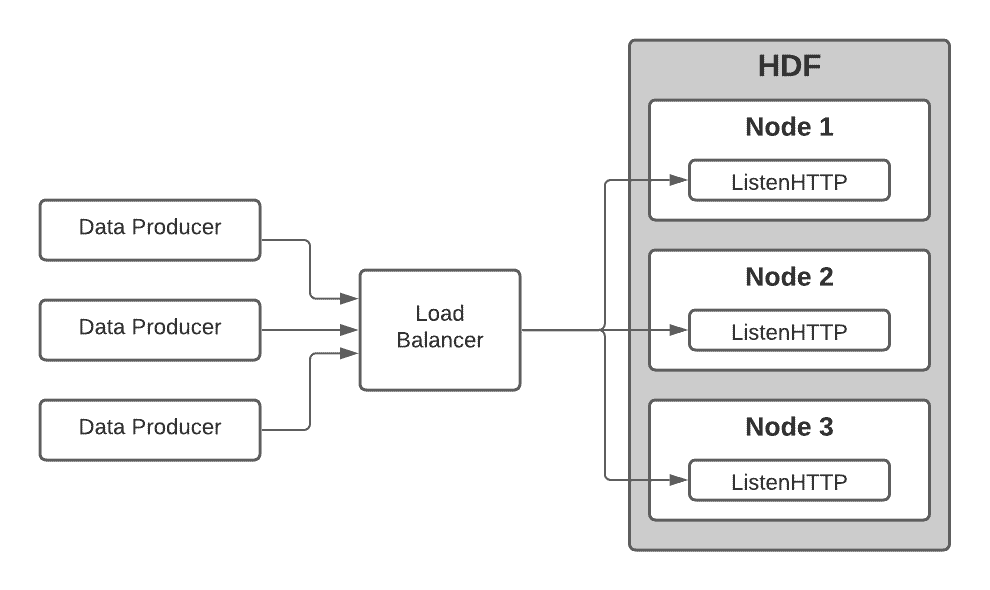

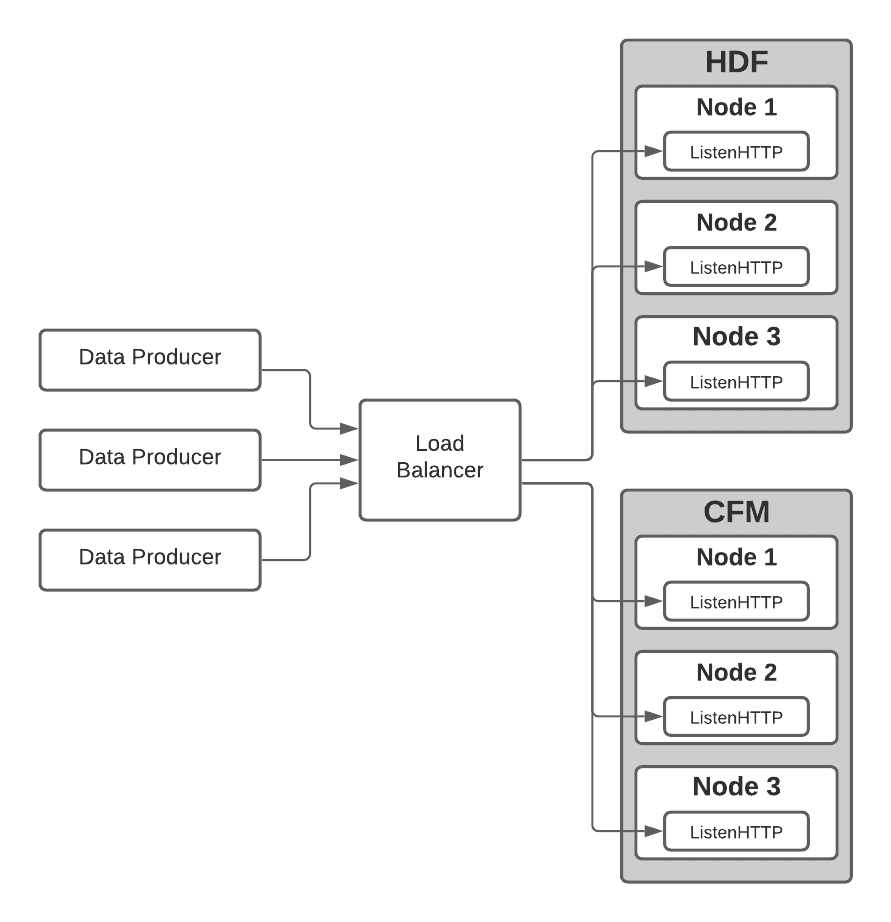

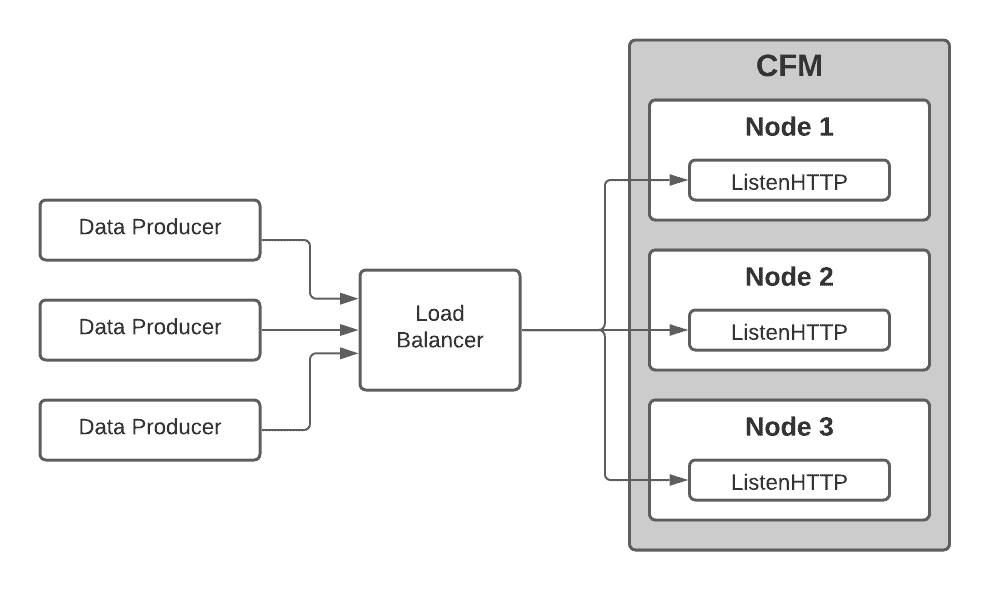

When NiFi exposes an HTTP endpoint (or any other type of endpoint like Syslog, TCP, UDP, etc.), a load balancer is always set up in front of NiFi. The load balancer is initially configured for the HDF nodes to ingest data.

With the HDF flow running, you can set up the CFM cluster and run the same flow. No data will be ingested by the CFM nodes unknown to the load balancer. When ready to migrate, add the CFM nodes to the nodes group configuration in the load balancer so that CFM nodes also ingest data.

Next, remove the HDF nodes from the load balancer configuration.

With the HDF nodes no longer ingesting data, let the HDF flow drain out. The CFM flow is now ingesting data as expected and the HDF cluster can be shut down.

Additional Benefits & Next Steps

As demonstrated, many common NiFi use cases can be migrated from HDF to CFM with minimal to no downtime. Furthermore, the path to migrate with separate HDF and CFM clusters provide the following additional advantages:

- The ability to rollback to the HDF flow if the CFM flow requires debugging.

- The hardware requirements for an additional NiFi cluster are small compared to those of Hadoop clusters.

- The ability to start with a fresh cluster on RHEL8 when supported by the Cloudera Data Platform during Q1/Q2 in 2021.

Are you excited to learn more? Contact your Cloudera account representative to talk directly with our experts about your NiFi dataflows to chart a successful migration path from HDF to CFM.