Engineers from across the Apache Hadoop community are collaborating to establish Arrow as a de-facto standard for columnar in-memory processing and interchange. Here’s how it works.

Apache Arrow is an in-memory data structure specification for use by engineers building data systems. It has several key benefits:

- A columnar memory-layout permitting O(1) random access. The layout is highly cache-efficient in analytics workloads and permits SIMD optimizations with modern processors. Developers can create very fast algorithms which process Arrow data structures.

- Efficient and fast data interchange between systems without the serialization costs associated with other systems like Thrift, Avro, and Protocol Buffers.

- A flexible structured data model supporting complex types that handles flat tables as well as real-world JSON-like data engineering workloads.

Arrow isn’t a standalone piece of software but rather a component used to accelerate analytics within a particular system and to allow Arrow-enabled systems to exchange data with low overhead. It is sufficiently flexible to support most complex data models.

For the Python and R communities, Arrow is extremely important, as data interoperability has been one of the biggest roadblocks to tighter integration with big data systems (which largely run on the JVM).

In this post, we’ll explain some of the motivations for Arrow, how Cloudera will use it in its projects, and how the open source community can get involved to create open source analytical software that is both faster and more interoperable.

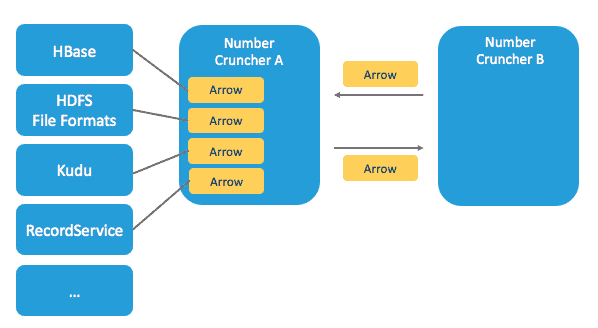

Moving Data Efficiently Between Systems

In the Apache Hadoop ecosystem, the data is often stored in one of the following ways:

- In HDFS in a binary format like Apache Parquet or a text format such as sequence files or CSV/TSV

- An online storage system for structured data like Apache Cassandra, Apache HBase, or Apache Kudu (incubating)

Computation engines (such as Apache Impala [incubating] or Apache Spark) request data from these systems, converting it as it arrives to its native in-memory data structures in order to perform analytics or data transformations. Generally, the in-memory data structures within each computation system are specific to that system, and adapter code converts to and from file formats and marshals data to and from the wire-protocol formats of the online Hadoop storage systems.

Arrow improves the performance for data movement within a cluster in these ways:

- Two processes utilizing Arrow as their in-memory data representation can “relocate” the data from one process to the other without serialization or deserialization. For example, Spark could send Arrow data to a Python process for evaluating a user-defined function.

- Arrow data can be received from Arrow-enabled database-like systems without costly deserialization on receipt. For example, Kudu could send Arrow data to Impala for analytics purposes.

How Arrow’s in-memory columnar memory layout enables better performance

Arrow’s design is optimized for analytical performance on nested structured data, such as that found in Impala or Spark DataFrames. Let’s look at a concrete example to see what the Arrow array will look like.

people = [

{

name: ‘mary’, age: 30,

places_lived: [

{city: ‘Akron’, state: ‘OH’},

{city: ‘Bath’, state: OH’}

]

},

{

name: ‘mark’, age: 33,

places_lived: [

{city: ‘Lodi’, state: ‘OH’},

{city: ‘Ada’, state: ‘OH’},

{city: ‘Akron’, state: ‘OH}

]

}

]

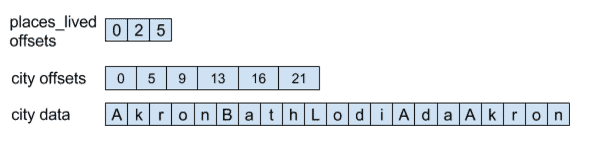

Now, let’s consider just the people.places_lived.city values. In Arrow, the array data enabling you to interpret these values looks like this:

This has immediate benefits:

- You can locate any value of interest in constant O(1) time by “walking” the variable-length dimension offsets.

- The city data itself is stored contiguous in-memory, so scanning the values for analytics purposes is highly cache-efficient and amenable to SIMD operations (provided the memory is suitably aligned).

We won’t go into further details here, but encourage you to explore the initial format specification and code on http://arrow.apache.org and get involved on the ASF mailing list.

Arrow and Columnar Data Formats Like Apache Parquet

Apache Parquet is a compact, efficient columnar data storage designed for storing large amounts of data stored in HDFS. Arrow is an ideal in-memory “container” for data that has been deserialized from a Parquet file, and similarly in-memory Arrow data can be serialized to Parquet and written out to a filesystem like HDFS or Amazon S3. Arrow and Parquet are thus companion projects.

As an example, we have recently been working on Parquet’s C++ implementation to provide an Arrow-Parquet toolchain for native code consumers like Python and R. Within a short time frame, Python programmers will be able to read and write Parquet files natively for the first time ever.

What’s Next and How to Learn More

Initial code (in Java and C++) and work-in-progress specification documents for Arrow can be found here.

We’re really interested in opportunities to use Arrow in Spark, Impala, Kudu, Parquet, and Python projects like Pandas and Ibis. For an overview of Cloudera’s Python-on-Hadoop efforts generally, read this post.

Marcel Kornacker is the founder of Impala.

Todd Lipcon is a Software Engineer at Cloudera, and the founder of the Kudu project. He is also a committer and PMC Member on the Apache Arrow, Apache HBase, and Apache Hadoop projects.

Wes McKinney is a Software Engineer at Cloudera, the founder of the pandas and Ibis projects, and an Apache Arrow committer. Previous to Cloudera, Wes was co-founder of DataPad, and CTO and Cofounder of Lambda Foundry, Inc. Wes is author of the O’Reilly book Python for Data Analysis.

hi : Thanks for the post .

The things I understood are :

1. Arrow is a column Storage

2. Arrow is a memory Storage.

3. Arrow is not a Database :

Correct me please here ,

a. I mean Arrow does not have a Query Engine inbuilt ?

b. I have to use Spark or Impala to query ?

c. Can multiple processes connect and access Arrow like a Database ?

d. Any coding tutorial you would like to recommend.

Hi,

Thanks for the post.

1. Is there any APIs to connect to arrow using Java or how to interact arrow using java to store the data in-memory

2. what are the prerequisites to work with arrow using java

3. Please provide coding tutorial