Learn how to use Cloudera Director, Microsoft Active Directory, and Centrify Express to deploy a secure EDH cluster for workloads in the public cloud.

In Part 1 of this series, you learned about configuring Microsoft Active Directory and Centrify Express for optimal security across your Cloudera-powered EDH, whether for on-premise or public-cloud deployments. In this concluding installment, you’ll learn the cloud-specific pieces in this process, including some AWS fundamentals and in-depth details about cluster provisioning using Cloudera Director.

Basic Concepts: Instance Types, AMIs, VPCs, and Subnets

(Note: AWS veterans can skip this section.)

The basic building block of the AWS Elastic Compute Cloud service (EC2) is the instance type, which is basically a virtual server with certain hardware specifications. Different instance types are appropriate for different purposes; for example, an instance type that is suitable for use as a worker node would have more local storage than an instance type used to run Cloudera Manager.

When requesting an instance type, a user must also specify an Amazon Machine Image, or AMI. An AMI specifies an operating system build on which to deploy the instance. There are many AMIs available from which to choose, and it is possible to create your own.

Extending past just standing up EC2 instances is the notion of logically grouping them to ease administration, network configuration, and security set up. This grouping is done using a virtual private cloud, or VPC. Using a VPC is great for security as it allows for hosts to use private networking and not necessarily expose all the hosts to the public Internet.

Within a VPC there can be multiple network subnets. These subnets are custom defined, and can be used to further segment the VPC. For example, it may be desirable to set up a specific subnet for one project’s cluster(s) separate from other projects.

While provisioning EC2 instances inside the same VPC subnet is a good practice, that step by itself is not enough to allow network traffic between them. When provisioning EC2 instances, along with specifying the VPC and subnet to which the instance should belong, it is also necessary to specify one or more security groups. A security group is basically a network ACL that defines which traffic is whitelisted. When defining security group policies, be mindful of the ports used by the various EDH components.

A common practice is to allow all traffic in the same security group, and add a few specific ports to be opened up from outside the security group for things like web UIs.

Now that we’ve covered some AWS fundamentals for those who need them, let’s proceed to cluster provisioning. All the client configuration files and scripts used for this post are available here.

Deploying Active Directory on AWS

There are several options for deploying Microsoft Active Directory to support an EDH environment on AWS. Customers often ask if the cluster on AWS can use an on-premise Active Directory infrastructure. While this is certainly possible, it is not recommended. Most of the rationale behind this recommendation is due to the inherent latencies introduced between on-premise and AWS networks.

A better option is to provision domain controllers on AWS itself inside the same VPC where the cluster resides. There are several ways to use Active Directory on AWS; for example, Amazon recently introduced a Directory Service offering, which is similar to its database offering, RDS.

Directory Service has three flavors: Simple AD, Enterprise, and AD Connector. Unfortunately, none of these offerings are suitable for an EDH environment. Simple AD and Enterprise do not support LDAPS (aka secure LDAP), and AD Connector is only a proxy for specific web applications on AWS, not a general-purpose AD with Kerberos and LDAP integration points. Supporting LDAPS for Simple AD and Enterprise is on the AWS roadmap, but there is no timeline on when this might be implemented.

With that in mind, the remaining option is to use an EC2 instance (or instances, for redundancy) to stand up a Windows Server and configure a new Active Directory environment. It is recommended that the Windows Server instance(s) be in the same VPC as the cluster, to simplify the architecture and for better security. For the example in this post, Windows Server 2012 R2 was used.

Using Cloudera Director

Cloudera Director enables the easy provisioning of EDH clusters in the cloud. A rapidly evolving product, Cloudera Director has become a powerful tool to stand up clusters with security controls enabled by default, getting you closer to using your most sensitive data right away. Cloudera Director 2.0, the current version as of this writing, provides the ability to provision clusters on AWS and Google Cloud Platform, with support for Microsoft Azure on the near-term roadmap.

The general workflow when Director spins up a cluster is as follows (assuming a completely blank slate):

- A new environment is created. An environment contains details such as credentials, VPC, security groups, etc.

- Instances are requested for Cloudera Manager and all the cluster hosts.

- In parallel, all instances are set up–including formatting and mounting disks, installing base packages, and executing a custom bootstrap script (discussed later).

- Install Cloudera Manager server, agent, and management services.

- Install Cloudera Manager agents and CDH parcels on cluster hosts.

- Install and configure the cluster.

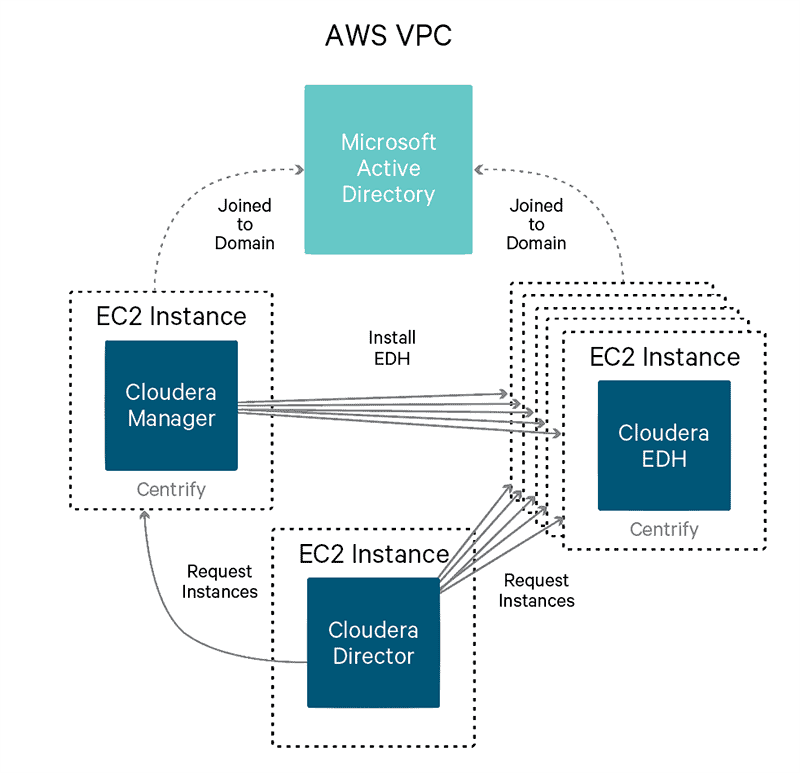

With our example here, all the instances that are provisioned by Director will also have Centrify Express installed and configured (as described in Part 1) so that the instances can be integrated with Active Directory. The high-level system architecture diagram is below.

Client Configuration Files

Cloudera Director offers two different ways to provision clusters: with the web UI, or with a client configuration file. The web UI provides a friendly interface to set up and configure clusters, and has a similar look and feel to Cloudera Manager. In this post, we will focus on using a client configuration file to provision a cluster.

The client configuration file provides an easier way to setup a cluster when extensive configuration tuning is needed. Another benefit to using a client configuration file is that it does not require setting up the Cloudera Director server; rather, it can be used to provision a cluster using only the Director client libraries.

After installing the Cloudera Director client, sample configuration files are available. On a 64-bit instance on AWS, the files aws.simple.conf and aws.reference.conf are located in /usr/lib64/cloudera-director/client. The former is a basic configuration file with the bare bones needed to setup a cluster, while the latter is a more advanced configuration file that is more in line with what is covered here.

Note: In all examples shown in this post, the same password is used everywhere for simplicity. In production environments, passwords should never all be the same, especially for keystore and truststore passwords.

Bootstrap Script

Cloudera Director has a feature within a cluster configuration to allow for a custom bootstrap script. This script is run on every node (for a specific node template), and the script executes as root. This approach provides a flexible way to do additional configurations and set up that fall outside the capabilities of what Director does. The most common usage of these bootstrap scripts is to install additional software that might be needed.

For the security use case we are demonstrating here, the bootstrap script will perform the following actions:

- Configure DNS to point to the Active Directory domain controller.

- Install dependency packages (e.g. openldap-clients, krb5-workstation, etc.).

- Remove unnecessary Java installations (e.g. OpenJDK).

- Install Oracle JDK 1.8u60.

- Install Java Cryptographic Extensions (JCE).

- Install and configure rng-tools, which is useful for increasing entropy.

- Install and configure Centrify Express.

- Join the hosts to the Active Directory domain, using adjoin.

- Obtain host certificates from ADCS, using adcert.

- Prepare certificates for use by EDH components (PEM and JKS artifacts).

For this example, the AMI used is based on RHEL 7.2. The complete bootstrap script used is as follows:

#!/bin/bash

#### Configurations ####

# DNS server, probably AD domain controller

NAMESERVER="172.31.53.203"

# Centrify adjoin parameters

PREWIN2K_HOSTNAME=$(hostname -f | sed -e 's/^ip-//' | sed -e 's/\.ec2\.internal//')

DOMAIN="CLOUDERA.LOCAL"

COMPUTER_OU="ou=servers,ou=prod,ou=clusters,ou=cloudera"

ADJOIN_USER="centrify"

ADJOIN_PASSWORD='Cloudera!'

# Centrify adcert parameters

WINDOWS_CA="cloudera-BEN-AD-2-CA"

WINDOWS_CA_SERVER="ben-ad-2.cloudera.local"

CERT_TEMPLATE="Centrify"

# Cloudera base directory

CLOUDERA_BASE_DIR="/opt/cloudera/security"

# Centrify base cert directory - shouldn't need to be changed

CENTRIFY_BASE_DIR="/var/centrify/net/certs"

# Passwords for certificate objects - should make trust store password different

PRIVATE_KEY_PASSWORD='Cloudera!'

KEYSTORE_PASSWORD='Cloudera!'

TRUSTSTORE_PASSWORD='Cloudera!'

#### SCRIPT START ####

# Set SELinux to permissive

setenforce 0

# Update DNS settings to point to the AD domain controller

sed -e 's/PEERDNS\=\"yes\"/PEERDNS\=\"no\"/' -i /etc/sysconfig/network-scripts/ifcfg-eth0

chattr -i /etc/resolv.conf

sed -e "s/nameserver .*/nameserver ${NAMESERVER}/" -i /etc/resolv.conf

chattr +i /etc/resolv.conf

# Install base packages

yum install -y perl wget unzip krb5-workstation openldap-clients rng-tools

# Enable and start rngd

cp -f /usr/lib/systemd/system/rngd.service /etc/systemd/system

sed -e "s/.*ExecStart.*/ExecStart=\/sbin\/rngd -f -r \/dev\/urandom/" -i /etc/systemd/system/rngd.service

systemctl daemon-reload

systemctl enable rngd

systemctl restart rngd

# Update packages and remove OpenJDK

yum erase -y java-1.6.0-openjdk java-1.7.0-openjdk

wget --no-cookies --no-check-certificate --header "Cookie: oraclelicense=accept-securebackup-cookie" "http://download.oracle.com/otn-pub/java/jdk/8u60-b27/jdk-8u60-linux-x64.rpm" -O jdk-8-linux-x64.rpm

rpm -i jdk-8-linux-x64.rpm

export JAVA_HOME=/usr/java/jdk1.8.0_60

export PATH=$JAVA_HOME/bin:$PATH

rm -f jdk-8-linux-x64.rpm

# Download the JCE and install it

wget --no-check-certificate --no-cookies --header "Cookie: oraclelicense=accept-securebackup-cookie" "http://download.oracle.com/otn-pub/java/jce/8/jce_policy-8.zip" -O UnlimitedJCEPolicyJDK8.zip

unzip UnlimitedJCEPolicyJDK8.zip

cp -f UnlimitedJCEPolicyJDK8/*.jar /usr/java/jdk1.8.0_60/jre/lib/security/

rm -rf UnlimitedJCEPolicyJDK8*

# Download and install the Centrify bits

wget http://edge.centrify.com/products/centrify-suite/2016/installers/20160315/centrify-suite-2016-rhel4-x86_64.tgz

tar xzf centrify-suite-2016-rhel4-x86_64.tgz

rpm -i ./*.rpm

# If the Centrify packages didn't install, abort

if ! rpm -qa | grep -i centrify; then

exit 1;

fi

# Configure Centrify so it doesn't create the http principal when it joins the domain

sed -e 's/.*adclient\.krb5\.service\.principals.*/adclient\.krb5\.service\.principals\: ftp cifs nfs/' -i /etc/centrifydc/centrifydc.conf

# Configure Centrify to turn off automatic clock syncing to AD since the hosts use ntpd already

sed -e 's/.*adclient\.sntp\.enabled.*/adclient\.sntp\.enabled\: false/' -i /etc/centrifydc/centrifydc.conf

# Join the AD domain

adjoin -u "${ADJOIN_USER}" -p "${ADJOIN_PASSWORD}" -c "${COMPUTER_OU}" -w "${DOMAIN}" --prewin2k "${PREWIN2K_HOSTNAME}"

# Check if the domain join was successful

if ! adinfo | grep -qi "joined as"; then

exit 1;

fi

# Check if the Centrify certificates directory exists, if not then create it

if [ ! -d ${CENTRIFY_BASE_DIR} ]; then

mkdir -p ${CENTRIFY_BASE_DIR}

fi

# Create the server certificates

/usr/share/centrifydc/sbin/adcert -e -n ${WINDOWS_CA} -s ${WINDOWS_CA_SERVER} -t ${CERT_TEMPLATE}

# Check if the certificates were successfully created

if [[ ! -f ${CENTRIFY_BASE_DIR}/cert.chain || ! -f ${CENTRIFY_BASE_DIR}/cert.key || ! -f ${CENTRIFY_BASE_DIR}/cert.cert ]]; then

exit 1;

fi

# Create the directory that will contain the certificate objects

mkdir -p ${CLOUDERA_BASE_DIR}

mkdir ${CLOUDERA_BASE_DIR}/x509

mkdir ${CLOUDERA_BASE_DIR}/jks

chmod -R 755 ${CLOUDERA_BASE_DIR}

# Create the trust stores in PEM and JKS format

openssl pkcs7 -print_certs -in $CENTRIFY_BASE_DIR/cert.chain -out ${CLOUDERA_BASE_DIR}/x509/truststore.pem

chmod 644 “${CLOUDERA_BASE_DIR}/x509/truststore.pem”

keytool -importcert -trustcacerts -alias root-ca -file ${CLOUDERA_BASE_DIR}/x509/truststore.pem -keystore ${CLOUDERA_BASE_DIR}/jks/truststore.jks -storepass ${TRUSTSTORE_PASSWORD} -noprompt

chmod 644 “${CLOUDERA_BASE_DIR}/jks/truststore.jks”

# Obtain the full hostname

HOST_NAME=$(hostname -f)

# Create the key stores in PEM and JKS format

openssl rsa -in "${CENTRIFY_BASE_DIR}/cert.key" -out "${CLOUDERA_BASE_DIR}/x509/${HOST_NAME}.key" -aes128 -passout pass:${PRIVATE_KEY_PASSWORD}

chmod 644 "${CLOUDERA_BASE_DIR}/x509/${HOST_NAME}.key"

cp "${CENTRIFY_BASE_DIR}/cert.cert" \

"${CLOUDERA_BASE_DIR}/x509/$(HOST_NAME).pem"

chmod 644 "${CLOUDERA_BASE_DIR}/x509/${HOST_NAME}.pem"

chmod 644 "${CLOUDERA_BASE_DIR}/x509/$(HOST_NAME).key"

openssl pkcs12 -export -in "${CLOUDERA_BASE_DIR}/x509/${HOST_NAME}.pem" -inkey "${CENTRIFY_BASE_DIR}/cert.key" -out "${CLOUDERA_BASE_DIR}/x509/${HOST_NAME}.pfx" -passout pass:${KEYSTORE_PASSWORD}

keytool -importkeystore -srcstoretype PKCS12 -srckeystore "${CLOUDERA_BASE_DIR}/x509/${HOST_NAME}.pfx" -srcstorepass ${KEYSTORE_PASSWORD} -destkeystore "${CLOUDERA_BASE_DIR}/jks/${HOST_NAME}.jks" -deststorepass ${KEYSTORE_PASSWORD}

chmod 644 "${CLOUDERA_BASE_DIR}/jks/${HOST_NAME}.jks"

echo ${KEYSTORE_PASSWORD} > ${CLOUDERA_BASE_DIR}/passphrase.txt

chmod 400 ${CLOUDERA_BASE_DIR}/passphrase.txt

# Setup JSSE cacerts

cp ${JAVA_HOME}/jre/lib/security/cacerts ${JAVA_HOME}/jre/lib/security/jssecacerts

chmod 644 ${JAVA_HOME}/jre/lib/security/jssecacerts

keytool -importcert -trustcacerts -alias root-ca -file ${CLOUDERA_BASE_DIR}/x509/truststore.pem -keystore ${JAVA_HOME}/jre/lib/security/jssecacerts -storepass changeit -noprompt

# Setup symlinks

ln -s "${CLOUDERA_BASE_DIR}/x509/${HOST_NAME}.key" \

"${CLOUDERA_BASE_DIR}/x509/server.key"

ln -s "${CLOUDERA_BASE_DIR}/x509/${HOST_NAME}.pem" \

"${CLOUDERA_BASE_DIR}/x509/server.pem"

ln -s "${CLOUDERA_BASE_DIR}/jks/${HOST_NAME}.jks" \

"${CLOUDERA_BASE_DIR}/jks/server.jks"

exit 0

(Note: Using a different Red Hat-based AMI may require changes to the bootstrap script.)

Cloudera Manager Configurations

A key step in configuring Cloudera Manager is to set up the cluster appropriately for Kerberos. The requirements for doing that in the Cloudera Director cluster configuration file are described in detail here. As mentioned in Part 1, Cloudera Director utilizes the Cloudera Manager Kerberos wizard.

The configuration snippet is below:

unlimitedJce: true

krbAdminUsername: "prod_cm@CLOUDERA.LOCAL"

krbAdminPassword: "Cloudera!"

CLOUDERA_MANAGER {

KDC_TYPE: "Active Directory"

KDC_HOST: "cloudera.local"

SECURITY_REALM: "CLOUDERA.LOCAL"

KRB_MANAGE_KRB5_CONF: true

KRB_ENC_TYPES: "aes256-cts aes128-cts rc4-hmac"

AD_ACCOUNT_PREFIX: "cdh_"

AD_KDC_DOMAIN: "ou=serviceaccounts,ou=prod,ou=clusters,ou=cloudera,dc=cloudera,dc=local"

}

Cloudera Management Services Configurations

The Cloudera Management Services include Cloudera Navigator, as well as all the monitoring services. From a security perspective, the configurations needed here are for TLS and also Active Directory integration for the Cloudera Navigator web UI.

Service-Wide Configurations

As far as service-wide configurations go, the only security configuration needed is configuring a trust store for the services to use to validate certificates during TLS communication. The configuration snippet is as follows:

CLOUDERA_MANAGEMENT_SERVICE {

ssl_client_truststore_location:

"/opt/cloudera/security/jks/truststore.jks"

ssl_client_truststore_password: "Cloudera!"

}

Role Configurations

Role configurations needed for security are centered around the Navigator Metadata Server, which is the Cloudera Navigator web UI. This includes enabling HTTPS for the web console, enabling Active Directory authentication, and setting up LDAP group lookups that are used for fine-grained RBAC. The configuration snippet is as follows:

NAVIGATORMETASERVER {

# HTTPS/TLS configurations

ssl_enabled: true

ssl_server_keystore_location: "/opt/cloudera/security/jks/server.jks"

ssl_server_keystore_password: "Cloudera!"

ssl_server_keystore_keypassword: "Cloudera!"

# Active Directory authentication and group lookups

auth_backend_order: "CM_THEN_EXTERNAL"

external_auth_type: "ACTIVE_DIRECTORY"

nav_ldap_bind_dn: "prod_binduser"

nav_ldap_bind_pw: "Cloudera!"

nav_ldap_group_search_base:

"ou=groups,ou=prod,ou=clusters,ou=cloudera,dc=cloudera,dc=local"

nav_ldap_url: "ldaps://cloudera.local"

nav_ldap_user_search_base: "dc=cloudera,dc=local"

nav_nt_domain: "CLOUDERA.LOCAL"

}

CDH Configurations

There are numerous security configurations necessary across the CDH stack. They run the gamut of enabling wire encryption with TLS and SASL, authentication with LDAP and SPNEGO, spill encryption, and setting up proper groups for authorization.

Service-Wide Configurations

The service-wide security configurations needed for relevant components are shown below, including comments about their purpose.

For HDFS:

HDFS {

# TLS/SSL configurations

hdfs_hadoop_ssl_enabled: true

ssl_client_truststore_location: "/opt/cloudera/security/jks/truststore.jks"

ssl_client_truststore_password: "Cloudera!"

ssl_server_keystore_location: "/opt/cloudera/security/jks/server.jks"

ssl_server_keystore_keypassword: "Cloudera!"

ssl_server_keystore_password: "Cloudera!"

# Sentry configurations

hdfs_sentry_sync_enable: true

dfs_namenode_acls_enabled: true

# Web UI authentication

hadoop_secure_web_ui: true

hadoop_http_auth_cookie_domain: "CLOUDERA.LOCAL"

# RPC encryption

hadoop_rpc_protection: "privacy"

# Users/groups for authorization controls

dfs_permissions_supergroup: “prod_cdh_admins”

hadoop_authorized_admin_groups: "prod_cdh_admins"

hadoop_authorized_admin_users: "hdfs,yarn"

hadoop_authorized_groups: "prod_cdh_users"

hadoop_authorized_users:

"hdfs,yarn,mapred,hive,impala,oozie,hue,zookeeper,sentry,spark,sqoop,kms,httpfs,hbase,sqoop2,flume,solr,kafka,llama"

}

For YARN:

YARN {

# Web UI authentication

hadoop_secure_web_ui: true

# TLS/SSL configurations

ssl_server_keystore_location: "/opt/cloudera/security/jks/server.jks"

ssl_server_keystore_keypassword: "Cloudera!"

ssl_server_keystore_password: "Cloudera!"

# YARN admin authorization

yarn_admin_acl: "yarn prod_cdh_admins"

}

For Apache Hive:

HIVE {

# TLS/SSL configurations (CM/CDH 5.5+)

hiveserver2_enable_ssl: true

hiveserver2_keystore_path: "/opt/cloudera/security/jks/server.jks"

hiveserver2_keystore_password: "Cloudera!"

hiveserver2_truststore_file: "/opt/cloudera/security/jks/truststore.jks"

hiveserver2_truststore_password: "Cloudera!"

}

For Apache Impala (incubating):

IMPALA {

# TLS/SSL configurations

client_services_ssl_enabled: true

ssl_private_key: "/opt/cloudera/security/x509/server.key"

ssl_private_key_password: "Cloudera!"

ssl_server_certificate: "/opt/cloudera/security/x509/server.pem"

ssl_client_ca_certificate: "/opt/cloudera/security/x509/truststore.pem"

# LDAP authentication configurations

enable_ldap_auth: true

impala_ldap_uri: "ldaps://ben-ad-2.cloudera.local"

ldap_domain: "CLOUDERA.LOCAL"

}

For Apache Sentry:

SENTRY {

# Sentry admin authorization

sentry_service_admin_group: "hive,impala,hue,prod_sentry_admins"

}

For Apache Oozie:

OOZIE {

# Enable TLS/SSL

oozie_use_ssl: true

}

For Hue:

HUE {

# LDAP authentication

auth_backend: "desktop.auth.backend.LdapBackend"

base_dn: "dc=cloudera,dc=local"

bind_dn: "cn=prod_binduser,ou=users,ou=prod,ou=clusters,ou=cloudera,dc=cloudera,dc=local"

bind_password: "Cloudera!"

group_filter: "(&(objectClass=group)(|(sAMAccountName=prod_hue_admins)(sAMAccountName=prod_hue_users)))"

ldap_cert: "/opt/cloudera/security/x509/truststore.pem"

ldap_url: "ldaps://ben-ad-2.cloudera.local"

nt_domain: "CLOUDERA.LOCAL"

search_bind_authentication: true

use_start_tls: false

user_filter: "(&(objectClass=user)(|(memberOf=cn=prod_hue_admins,ou=groups,ou=prod,ou=clusters,ou=cloudera,dc=cloudera,dc=local)(memberOf=cn=prod_hue_users,ou=groups,ou=prod,ou=clusters,ou=cloudera,dc=cloudera,dc=local)))"

}

Role Configurations

In addition to the many service-wide configurations, there are role-specific configurations needed for the various security settings.

For HDFS:

HDFS {

HTTPFS {

# TLS/SSL configurations

httpfs_use_ssl: true

httpfs_https_keystore_file: "/opt/cloudera/security/jks/server.jks"

httpfs_https_keystore_password: "Cloudera!"

httpfs_https_truststore_file: "/opt/cloudera/security/jks/truststore.jks"

httpfs_https_truststore_password: "Cloudera!"

}

}

For Impala:

IMPALA {

STATESTORE {

# TLS/SSL configurations

webserver_certificate_file: "/opt/cloudera/security/x509/server.pem"

webserver_private_key_file: "/opt/cloudera/security/x509/server.key"

webserver_private_key_password_cmd: "Cloudera!"

# Web UI authentication

webserver_htpassword_user: "impala"

webserver_htpassword_password: "Cloudera!"

}

CATALOGSERVER {

# TLS/SSL configurations

webserver_certificate_file: "/opt/cloudera/security/x509/server.pem"

webserver_private_key_file: "/opt/cloudera/security/x509/server.key"

webserver_private_key_password_cmd: "Cloudera!"

# Web UI authentication

webserver_htpassword_user: "impala"

webserver_htpassword_password: "Cloudera!"

}

IMPALAD {

# Spill file encryption

disk_spill_encryption: true

# TLS/SSL configurations

webserver_certificate_file: "/opt/cloudera/security/x509/server.pem"

webserver_private_key_file: "/opt/cloudera/security/x509/server.key"

webserver_private_key_password_cmd: "Cloudera!"

impalad_ldap_ca_certificate: "/opt/cloudera/security/x509/truststore.pem"

# Web UI authentication

webserver_htpassword_user: "impala"

webserver_htpassword_password: "Cloudera!"

}

}

For Hive:

HIVE {

HIVESERVER2 {

# TLS/SSL configurations for HiveServer2 web UI (CM/CDH 5.7+)

ssl_enabled: true

ssl_server_keystore_location: "/opt/cloudera/security/jks/server.jks"

ssl_server_keystore_password: "Cloudera!"

}

}

For Oozie:

OOZIE {

OOZIE_SERVER {

# TLS/SSL configurations

oozie_https_keystore_file: "/opt/cloudera/security/jks/server.jks"

oozie_https_keystore_password: "Cloudera!"

oozie_https_truststore_file: "/opt/cloudera/security/jks/truststore.jks"

oozie_https_truststore_password: "Cloudera!"

}

}

For Hue:

HUE {

HUE_SERVER {

# TLS/SSL configurations

ssl_enable: true

ssl_certificate: "/opt/cloudera/security/x509/server.pem"

ssl_private_key: "/opt/cloudera/security/x509/server.key"

ssl_private_key_password: "Cloudera!"

ssl_cacerts: "/opt/cloudera/security/x509/truststore.pem"

}

}

Bootstrapping A Secure Cluster

After completing all the configurations and setting up the bootstrap script, you are ready to have Cloudera Director bootstrap the secure cluster. There are two different options using the client: local mode or remote mode. In local mode, the client does all the heavy lifting of provisioning the cluster. In remote mode, the client sends the configuration to the Cloudera Director server, and the server does all the heavy lifting. The only difference between the two is whether or not you wish to further monitor and maintain the cluster using the web UI. See the docs for more information about the Cloudera Director client CLI syntax.

For our example, which interacts with a Cloudera Director server, the syntax is:

cloudera-director bootstrap-remote prod.aws.conf \ --lp.remote.username=admin --lp.remote.password=admin

Future Work

As you can tell from this post, numerous security configurations are possible via Cloudera Director. However, there is still work to be done. Some important security components and configurations that are not currently available as of Cloudera Director 2.0 include:

- Provisioning encryption components (Navigator Key Trustee Server and KMS)

- Setting up Cloudera Manager web console with HTTPS

- Setting up Cloudera Manager server and agents with TLS (Level 1, 2, and 3)

- Setting up external authentication (e.g. Active Directory) for Cloudera Manager

- Enabling Data Transfer Encryption for HDFS

- Not relying on Cloudera Manager to maintain Kerberos client configuration (krb5.conf), which is better maintained by the Centrify agent

It may be possible to fill these gaps using Cloudera Director post-creation scripts, which allow for a script or scripts to be run after a cluster is fully provisioned.

Conclusion

You should now have a good understanding about what’s involved provisioning secure EDH clusters on AWS. Along the way, you’ve seen that many of the security integrations familiar from on-premise deployments, such as integrating with Active Directory, are also valid for public-cloud deployments.