We are entering the golden age of machine learning, and it’s all about the data. As the quantity of data grows and the costs of compute and storage continue to drop, the opportunity to solve the world’s biggest problems has never been greater. Our customers already use advanced machine learning to build self-driving cars, provide better care to newborns in the hospital , stop financial crimes and combat cyber threats. But this is clearly just the beginning.

At Cloudera, we’re constantly working to help customers push the boundaries of what’s possible with data. Today, we’re excited to introduce Cloudera Data Science Workbench, which enables fast, easy, and secure self-service data science for the enterprise. It dramatically accelerates the ability of teams to build, scale, and deploy machine learning and advanced analytics solutions using the most powerful technologies.

In this post, we’ll summarize our motivations for building Data Science Workbench, currently in private beta, and provide an overview of its capabilities.

Data Scientists: A Thousand Questions, A Thousand Tools

Over the past few years, enterprises have adopted big data solutions for a huge variety of business problems. At the same time, though, data scientists struggle to build and test new analytics projects as fast as they’d like, particularly at large scale in secure environments.

On the one hand, this is not surprising. Most analytics problems are not cookie-cutter. Data in the enterprise is complex. The questions data scientists ask often require advanced models and methods. Building a sustained competitive advantage or having a transformational impact using data requires experimentation, innovation, and hard work.

Yet it should only be as hard as the problem, and no harder. Too often, technical and organizational constraints limit the ability of data scientists to innovate. Why is this?

To begin, we need to understand who data scientists really are. According to typical big data industry marketing, a data scientist is equal parts an expert in statistics, machine learning, and software engineering, with solid business domain expertise to match. That’s a vanishingly rare combination of skills.

It turns out, though, that many organizations already employ statisticians, quantitative researchers, actuaries, and analysts. These real-world data scientists often aren’t software engineers, but are quite comfortable with mathematics and the business domain. Rather than program Apache Hadoop and Apache Spark using Java or Scala, they typically work with small-to-medium data on their desktops, increasingly relying on open data science tools like Python, R, and their respectively vast ecosystems of libraries and frameworks for data cleansing, analysis, and predictive modeling.

This presents several challenges, including:

- Every team, user, and project may require a different language, library, framework, or algorithm (e.g. Python vs R, Python 2.7 vs. 3.5). Meanwhile, collaboration and compliance depend on reproducibility, which is doubly hard with so many combinations.

- What works on a single machine may not scale across a cluster. Most data scientists typically work on samples and extracts.

- Secured clusters are challenging for data scientists. Not many statisticians are familiar with Kerberos authentication.

The results is that for both technical and organizational reasons, data scientists are caught in a bind. They require flexibility and simplicity to be innovative and productive, but scale and security to impact the business.

IT: Adoption vs. Compliance

This puts IT in a tough spot. Data scientists are among the most strategic users in the organization. Their insights drive the business forward. Indeed, a common motivation for building an enterprise data hub is to support advanced analytics use cases. Since the business may depend on the results data scientists provide, IT teams are under tremendous pressure to make them productive.

IT is responsible for compliance with corporate directives like security and governance. This is hard enough when every user is accessing your environment through a common interface, such as SQL. It becomes much harder when every team, user, and project uses a different set of open source tools. Managing so many environment permutations against a secured cluster is an unenviable, if not impossible, task. IT, forced to balance enterprise data security against the benefits of data science, is often forced to lock the data up and the data scientists out.

As a result, data science teams are cut off from one of the enterprise’s most strategic assets. They remain on their desktops or adopt “shadow IT” cloud infrastructure where they can use their preferred tools, albeit on limited data sets. This usability gap limits innovation and accuracy for the data science team and increases cost and risk from fragmented data silos for IT.

Introducing Cloudera Data Science Workbench

A year ago, Cloudera acquired a startup, Sense.io, to help dramatically improve the experience of data scientists on Cloudera’s enterprise platform for machine learning and advanced analytics. The result of this acquisition and subsequent development is today’s announcement of Cloudera Data Science Workbench.

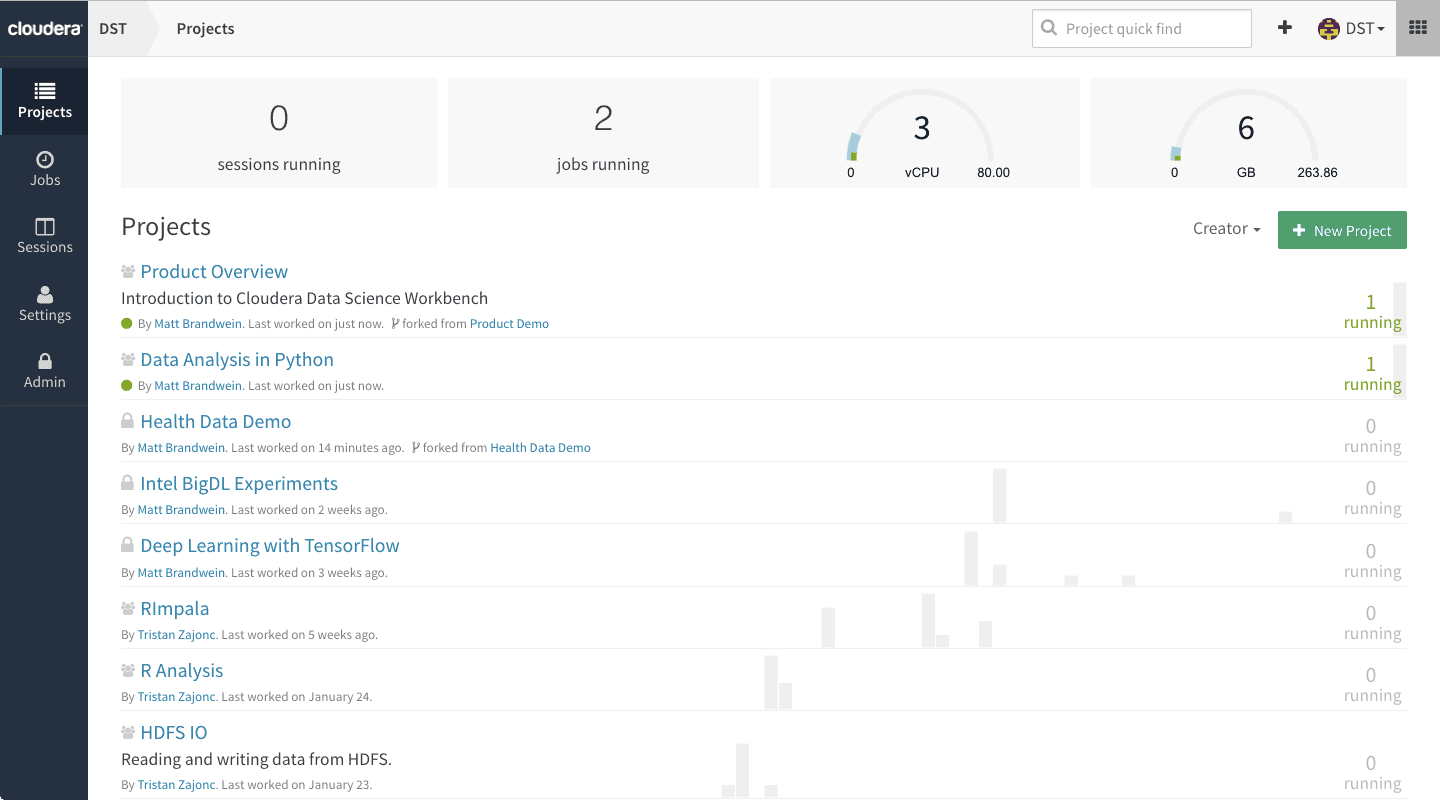

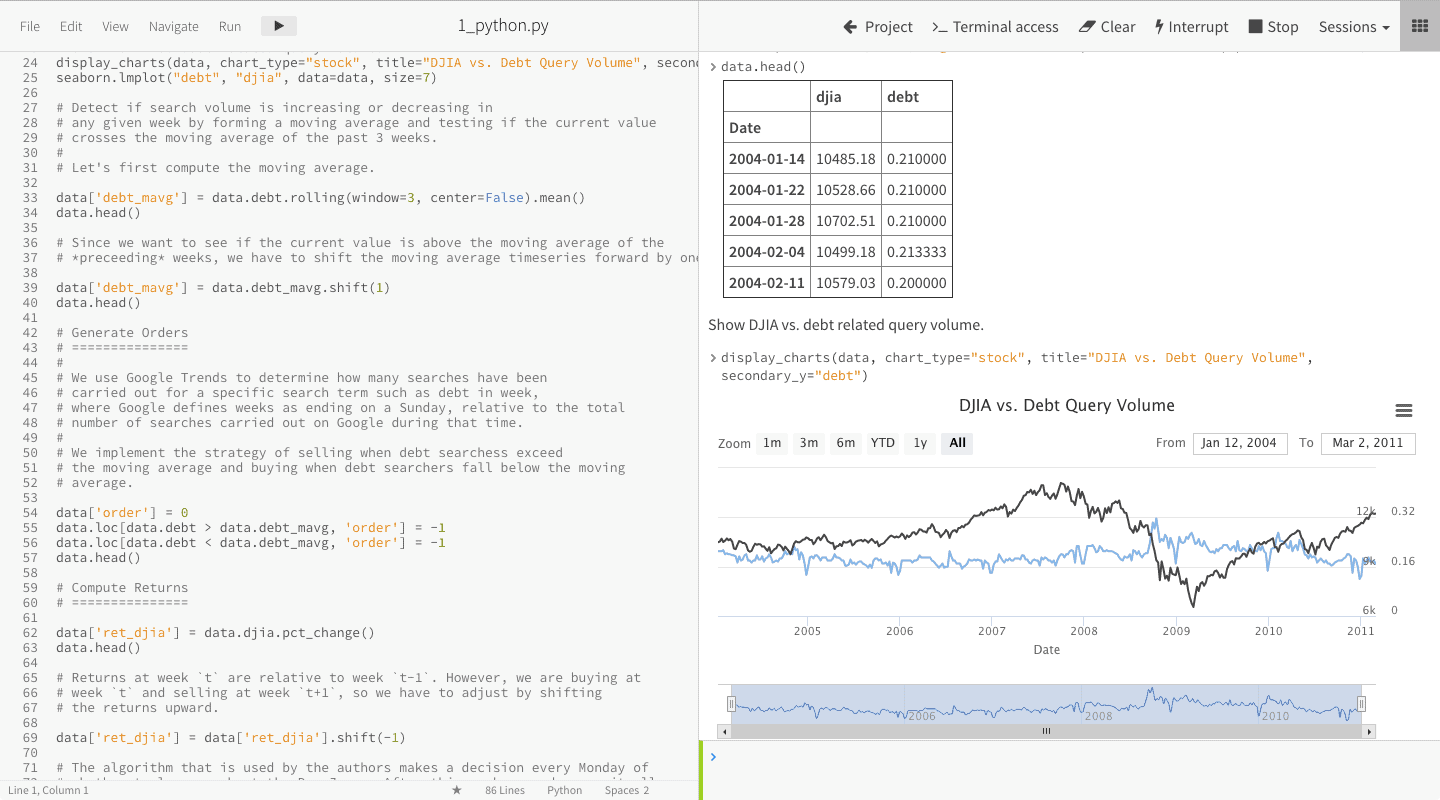

Cloudera Data Science Workbench is a web application that allows data scientists to use their favorite open source libraries and languages — including R, Python, and Scala — directly in secure environments, accelerating analytics projects from exploration to production.

Built using container technology, Cloudera Data Science Workbench offers data science teams per-project isolation and reproducibility, in addition to easier collaboration. It supports full authentication and access controls against data in the cluster, including complete, zero-effort Kerberos integration. Add it to an existing cluster, and it just works.

With Cloudera Data Science Workbench, data scientists can:

- Use R, Python, or Scala on the cluster from a web browser, with no desktop footprint.

- Install any library or framework within isolated project environments.

- Directly access data in secure clusters with Spark and Impala.

- Share insights with their team for reproducible, collaborative research.

- Automate and monitor data pipelines using built-in job scheduling.

Meanwhile, IT professionals can:

- Give their data science team the freedom to work how they want, when they want.

- Stay compliant with out-of-the-box support for full platform security, especially Kerberos.

- Run on-premises or in the cloud, wherever data is managed.

We’re thrilled to announce Cloudera Data Science Workbench and look forward to sharing more information in the coming weeks.

Learn More

To learn more about Cloudera Data Science Workbench, come see our session, “Making Self-service Data Science a Reality” on Thursday, March 16, 2017 at Strata + Hadoop World San Jose.

Can’t make it to the conference? Sign up for the webinar series starting April 13.

I am now installing CDSW with reference to some blogs on the Internet, but unfortunately, there are some problems, so I would like to ask whether there are detailed installation steps of CDSW?Detailed installation steps would be appreciated.I Thanks, wish you good luck.