In modern enterprises, the exponential growth of data means organizational knowledge is distributed across multiple formats, ranging from structured data stores such as data warehouses to multi-format data stores like data lakes. Information is often redundant and analyzing data requires combining across multiple formats, including written documents, streamed data feeds, audio and video. This makes gathering information for decision making a challenge. Employees are unable to quickly and efficiently search for the information they need, or collate results across formats. A “Knowledge Management System” (KMS) allows businesses to collate this information in one place, but not necessarily to search through it accurately.

Meanwhile, ChatGPT has led to a surge in interest in leveraging Generative AI (GenAI) to address this problem. Customizing Large Language Models (LLMs) is a great way for businesses to implement “AI”; they are invaluable to both businesses and their employees to help contextualize organizational knowledge.

However, training models require huge hardware resources, significant budgets and specialist teams. A number of technology vendors offer API-based services, but there are doubts around security and transparency, with considerations across ethics, user experience and data privacy.

Open LLMs i.e. models whose code and datasets have been shared with the community, have been a game changer in enabling enterprises to adapt LLMs, however pre-trained LLMs tend to perform poorly on enterprise-specific information searches. Additionally, organizations want to evaluate the performance of these LLMs in order to improve them over time. These two factors have led to development of an ecosystem of tooling software for managing LLM interactions (e.g. Langchain) and LLM evaluations (e.g. Trulens), but this can be much more complex at an enterprise-level to manage.

The Solution

The Cloudera platform provides enterprise-grade machine learning, and in combination with Ollama, an open source LLM localization service, provides an easy path to building a customized KMS with the familiar ChatGPT style of querying. The interface allows for accurate, business-wide, querying that is quick and easy to scale with access to data sets provided through Cloudera’s platform.

The enterprise context for this KMS can be provided through Retrieval-Augmented Generation (RAG) of LLMs, to help contextualize LLMs to a specific domain. This allows the responses from a KMS to be specific and avoids generating vague responses, called hallucinations.

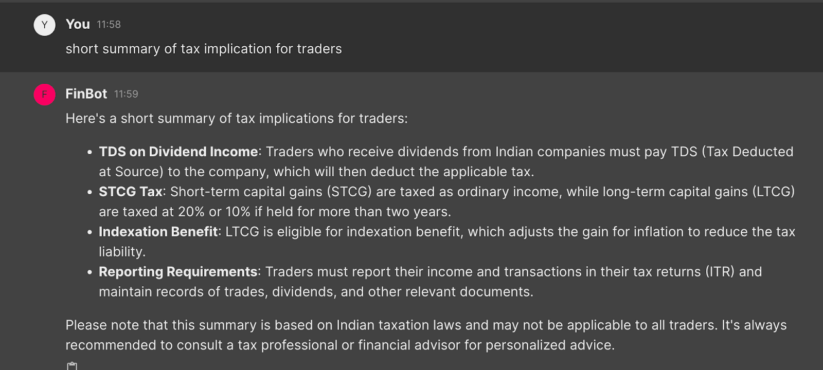

The image above demonstrates a KMS built using the llama3 model from Meta. This application is contextualized to finance in India. In the image, the KMS explains that the summary is based on Indian Taxation laws, even though the user has not explicitly asked for an answer related to India. This contextualization is possible thanks to RAG.

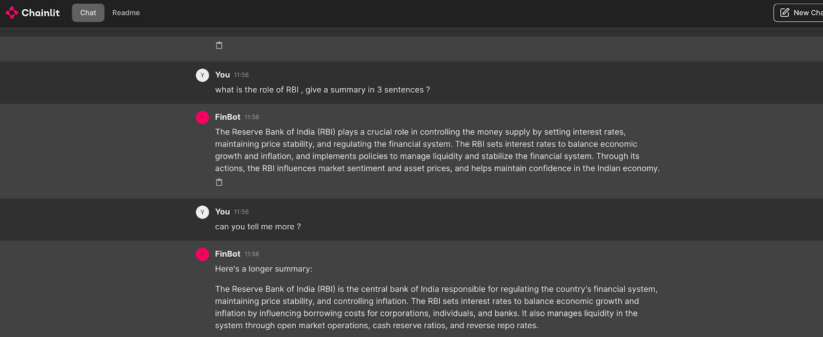

Ollama provides optimization and extensibility to easily set up private and self-hosted LLMs, thereby addressing enterprise security and privacy needs. Developers can write just a few lines of code, and then integrate other frameworks in the GenAI ecosystem such as Langchain, Llama Index for prompt framing, vector databases such as ChromaDB or Pinecone, evaluation frameworks such as Trulens. GenAI specific frameworks such as Chainlit also allow such applications to be “smart” through memory retention between questions.

In the picture above, the application is able to first summarize and then understand the follow-up question “can you tell me more”, by remembering what was answered earlier.

However, the question remains: how do we evaluate the performance of our GenAI application and control hallucinating responses?

Traditionally, models are measured by comparing predictions with reality, also called “ground truth.” For example if my weather prediction model predicted that it would rain today and it did rain, then a human can evaluate and say the prediction matched the ground truth. For GenAI models operating in private environments and at-scale, such human evaluations would be impossible.

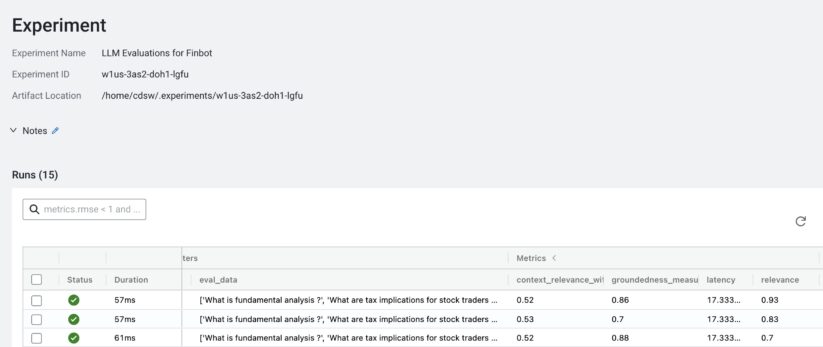

Open source evaluation frameworks, such as Trulens, provide different metrics to evaluate LLMs. Based on the asked question, the GenAI application is scored on relevance, context and groundedness. Trulens therefore provides a solution to apply metrics in order to evaluate and improve a KMS.

The picture above demonstrates saving the earlier metrics in the Cloudera platform for LLM performance evaluation

With the Cloudera platform, businesses can build AI applications hosted by open-source LLMs of their choice. The Cloudera platform also provides scalability, allowing progress from proof of concept to deployment for a large variety of users and data sets. Democratized AI is provided through cross-functional user access, meaning robust machine learning on hybrid platforms can be accessed securely by many people throughout the business.

Ultimately, Ollama and Cloudera provide enterprise-grade access to localized LLM models, to scale GenAI applications and build robust Knowledge Management systems.

Find out more about Cloudera and Ollama on Github, or sign up to Cloudera’s limited-time, “Fast Start” package here.