Enterprises see embracing AI as a strategic imperative that will enable them to stay relevant in increasingly competitive markets. However, it remains difficult to quickly build these capabilities given the challenges with finding readily available talent and resources to get started rapidly on the AI journey.

Cloudera recently signed a strategic collaboration agreement with Amazon Web Services (AWS), reinforcing our relationship and commitment to accelerating and scaling cloud native data management and data analytics on AWS. Our vision is to make it easier, more economical, and safer for our customers to maximize the value they get from AI. In this post, we share our vision and the integrations that are available to our customers on Cloudera Data Platform with generative AI on AWS. Generative AI offerings on AWS include Amazon Bedrock, Amazon SageMaker JumpStart, AWS Trainium, AWS Inferentia, Amazon CodeWhisperer, AWS HealthScribe, and Generative BI in Amazon QuickSight.

Our vision: building AI with CDP on AWS

Cloudera’s AI vision in alignment with AWS is to enable customers to leverage the 25 exabytes of data managed in Cloudera to build differentiated AI in their specific industry. Our vision is built on two pillars:

- Build AI with Cloudera, powered by generative AI on AWS: Enable customers to build AI applications rapidly and cost-effectively by building capabilities and integrations between Cloudera Machine Learning and generative AI on AWS.

- Build AI in Cloudera, powered by generative AI on AWS: Enable AI-powered productivity for data practitioners using Cloudera Data Platform (CDP) by building generative AI features into CDP.

Let us dive into what is happening in each of these pillars between AWS and Cloudera.

Building AI with Cloudera, powered by Amazon Bedrock

We are building generative AI capabilities in Cloudera, using the power of Amazon Bedrock, a fully managed serverless service. Customers can quickly and easily build generative AI applications using these new features available in Cloudera.

CML text summarization AMP built using Amazon Bedrock

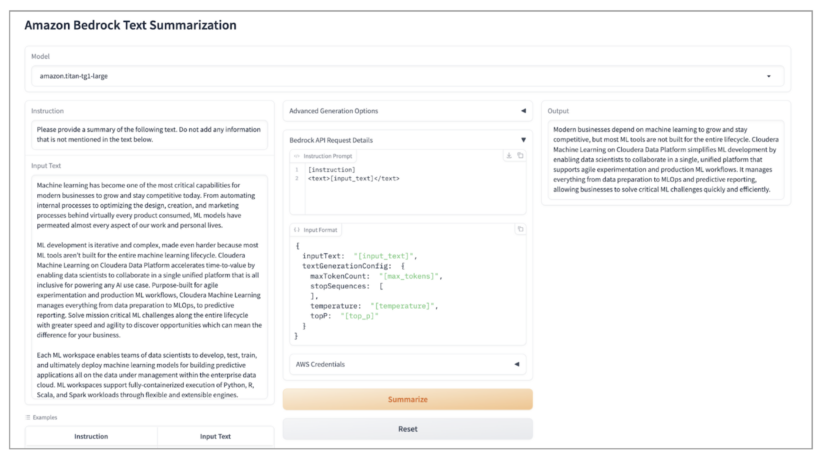

With the general availability of Amazon Bedrock, Cloudera is releasing its latest applied ML prototype (AMP) built in Cloudera Machine Learning: CML Text Summarization AMP built using Amazon Bedrock. Using this AMP, customers can use foundation models available in Amazon Bedrock for text summarization of data managed both in Cloudera Public Cloud on AWS and Cloudera Private Cloud on-premise.

LLM Text Summarization AMP showcases how our customers can quickly build and deploy AI applications leveraging foundation models available in Amazon Bedrock to perform automated text summarization. This allows enterprises to distill lengthy documents, articles, or communications into concise and coherent summaries, facilitating quick decision-making and enhancing productivity. By harnessing the capabilities of Amazon Bedrock and our AMP, organizations can streamline their data analysis processes, extract crucial information, and gain a competitive edge.

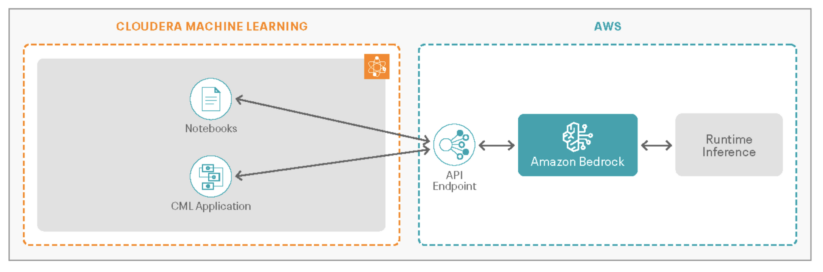

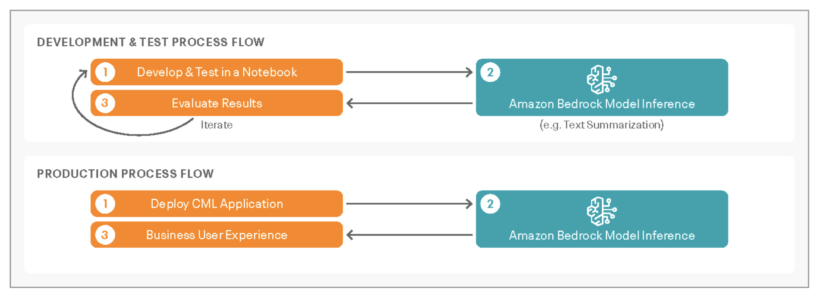

Below is a high-level architecture and process flow for Cloudera’s Text Summarization AMP built using Amazon Bedrock:

In building this AMP, Cloudera’s research and development team explored and chose Amazon Bedrock.

- With Amazon Bedrock, customers can interact via a single API and select from a wide range of industry leading foundation models.

- As a fully managed service, there is no need to set up or manage any infrastructure, allowing customers to get started on building their application immediately.

- We can fine-tune the Amazon Bedrock model using our own labeled data to create an accurate customized model for our specific problem.

- Amazon Bedrock is integrated with AWS security capabilities, which customers were familiar with and helped them avoid a new infosec review, another major time saver.

- Customers use the AWS tools and capabilities they are familiar with to deploy reliable, secure, and scalable generative AI applications.

For this use case, we selected Amazon’s Titan Text model for its strong track record with text summarization use cases and the use of responsible AI best practices in its creation.

Here’s an example of Cloudera’s AMP in action with the Amazon Bedrock API request code that’s automatically generated by the application based on the input text exposed. This AMP can be used on any Cloudera system running on-premise or any public cloud directly integrated with Amazon Bedrock APIs.

CML AWS Inferentia and AWS Trainium planned integrations

The LLM Text Summarization AMP is just the beginning of the benefits our customers will gain from Cloudera and AWS generative AI product integrations. Cloudera is working on integrations of AWS Inferentia and AWS Trainium–powered Amazon EC2 instances into Cloudera Machine Learning service (CML). This will give CML customers the ability to spin-up isolated compute sessions using these powerful and efficient accelerators purpose-built for AI workloads.

AWS Trainium–powered Amazon EC2 instance support will bring efficiency improvements to the training phase of machine learning models within CML. Amazon EC2 Trn1 instances deliver faster time to train while offering up to 50 percent cost-to-train savings over comparable Amazon EC2 instances.

With AWS Inferentia, CML customers can leverage custom-designed inference chips, enabling faster and more cost-effective inference for their self-hosted machine learning models. Amazon EC2 Inf2 instances deliver up to nine times higher throughput and up to 80 percent lower cost per inference than comparable Amazon EC2 instances.

Customers can also use AWS Neuron SDK to train and deploy models on Amazon EC2 Trn1 and Amazon EC2 Inf2 instances as on-demand instances, reserved instances, and spot instances, or as part of a savings plan: US East (Northern Virginia), US West (Oregon), and US East (Ohio).

Building AI in Cloudera, powered by Amazon Bedrock

We offer in-built generative AI capabilities within Cloudera services and applications, so customers can easily interact and benefit by getting faster outcomes.

CDP’s SQL code AI assistant

We couldn’t be more excited about building generative AI capabilities into CDP to power data practitioner productivity.

CDP’s SQL code AI assistant powered by Amazon Bedrock is already under development. This generative AI tool lets analysts generate and edit SQL queries using natural language statements. It can also optimize SQL queries to make them run more efficiently, explain what a SQL query is doing in plain English, and automatically find and fix errors in queries that won’t run. We are using the Claude v2 Foundation model from Anthropic available in Amazon Bedrock for this text-to-sql generation feature.

This tool alone will revolutionize how analysts get work done—allowing them to spend more time on creating business value and less time on writing code.

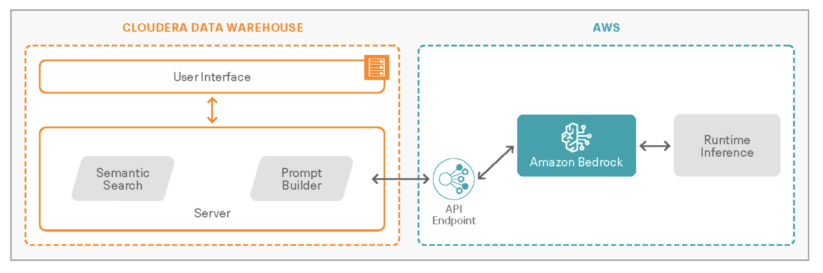

Below is the high-level architecture for CDP’s SQL code AI assistant:

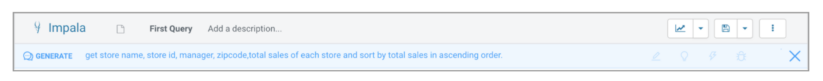

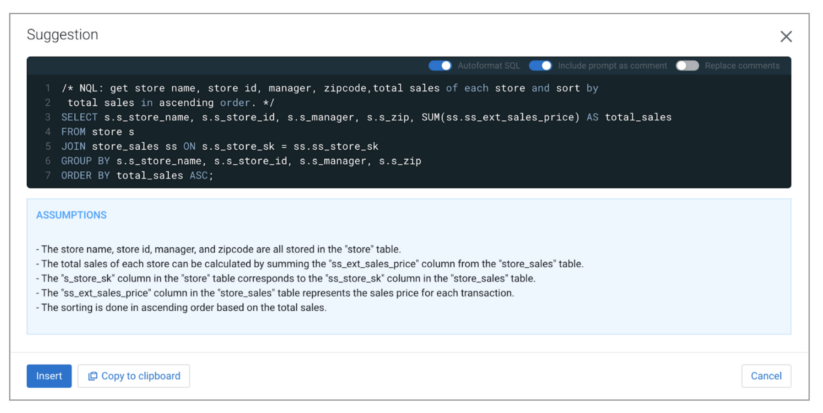

We want to analyze sales by store so we click the generate button in HUE (our standard SQL editor UI). Then we write what data points we want in natural language and click go.

The AI assistant finds the relevant tables needed and writes the SQL query with a detailed explanation of its logic in seconds. All we have to do is review, click insert, and run it.

What’s next?

Even with these integrations in our development pipeline we are just scratching the surface of what we will build using CDP and AWS AI services. Stay tuned for updates as we bring our vision to life by following our What’s New product feed. We’re more committed than ever to making it easier, economical, and safer for our customers to maximize the value they get from AI.

Resources to build generative AI with CDP on AWS

To learn more, check out new generative AI features available in Cloudera Machine Learning page. Subscribe to the 60-day CDP Public Cloud trial and start learning to build solutions with CDP on AWS. Learn about generative AI on AWS using AWS Training Resources and Amazon Bedrock Workshop.