This blog post was published on Hortonworks.com before the merger with Cloudera. Some links, resources, or references may no longer be accurate.

We have already discussed how data marketplaces are emerging in today’s explosive data economy. We also shared a vision for how Blockchains are emerging as an ideal solution to address some of the key issues related to data marketplaces. In this third and final part of the blog series, let us roll our sleeves up and talk about the technology behind data marketplaces. There is not just one way to skin the cat (Thankfully, I am a dog person!) when it comes to designing a reference architecture for such a complex use case. So, feel free to take the best out of the design below and adapt to your vision or needs.

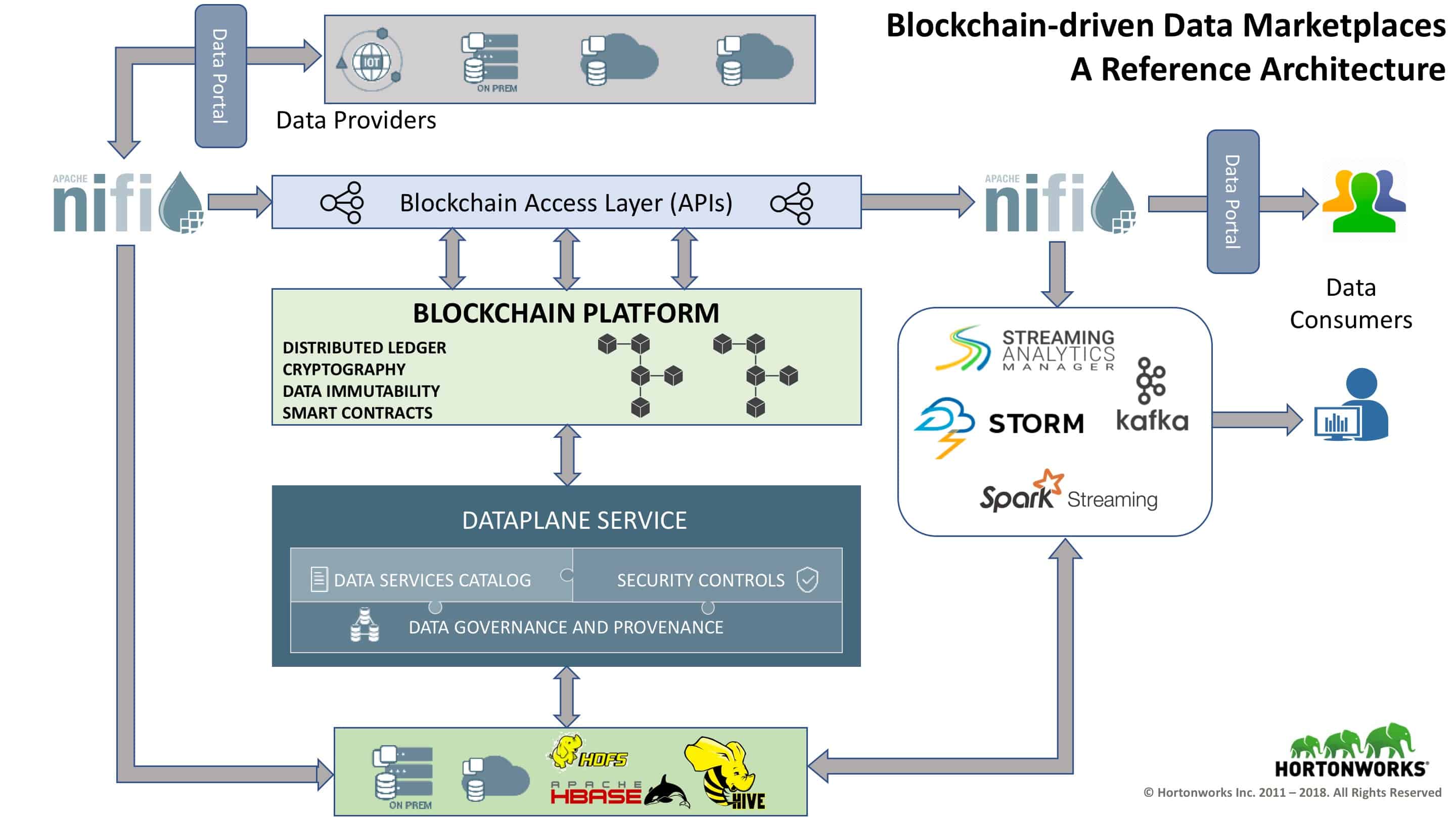

Broadly speaking, the key components of a data marketplace are as follows –

- Data Portal – This is the marketplace portal that the data providers and the data consumers will use to either put up data for sale or checkout data for purchase. Given that the user experience is going to be the most critical thing for this portal to succeed, this can be assumed to be a custom website or a third-party portal customized with the right set of security and access built into it.

- Blockchain Platform – Given that we have already discussed why we need a Blockchain in this design, let us make sure that we select an appropriate platform that supports some of the key capabilities that we expect in a Blockchain implementation – distributed ledgering, support for cryptography, immutability assurance, smart contracts, oracles etc. Based on the ecosystem, the players within that and the sensitivity of the data, you may end up choosing a permissioned or permissionless blockchain.

- Data Storage – Given that we are talking about potentially petabytes of information being exchanged here, we need to consider a few things when it comes to storage – Volume, Variety, Location of data and maybe Sensitivity. Given the potential latency within Blockchains, we may not want to store all of the actual data on-chain. So, there may be a significant amount of data off-chain – meaning, external storage outside of the Blockchain. Big data storage options like Apache HBase, Apache HDFS along with Apache Hive or Apache Phoenix should be leveraged to handle such massive volumes. Of course, if the location of the data has to be on the cloud, you may end up leveraging potential cloud storage options like Amazon S3 too. The “Variety” factor can also determine which storage option you choose since not all data on the marketplace may be structured. A significant portion of it might be unstructured data.

- Marketplace Engine – In order for the marketplace to function effectively, the connecting glue between the on-chain data and the off-chain data will be a set of services that offer unified security, governance, management and visibility across all data sets. This brings forth a seamless and unified data plane that both data providers and consumers can leverage easily. The services will include a data catalog that can make data discoverability and searchability a breeze across multiple data sources. Given that we are dealing with potentially sensitive data sets based on the ecosystem, these data plane services offer unified security across multiple data sets ensuring that the right people have access to the right data sets within the right context. Finally, one cannot emphasize enough the need for complete end-to-end data governance in this design. We live in the world of GDPR and other such compliance needs. It is pertinent to understand who accessed what, who changed what, when something was edited etc.

- Blockchain Access Layer – There are multiple protocols or mechanisms through which a Blockchain platform can be accessed. Standardizing on a common access layer that leverages something as common as APIs makes it easy for the developer community around this initiative to work together easily. APIs allow for abstraction that enable a fair amount of future-proofing, which is needed when you consider that the Blockchain technology is still evolving. You might end up replacing or upgrading to a better Blockchain platform 12-18 months from now. That shouldn’t impact other components accessing the platform.

Now that we have covered all the elements of the architecture, what remains is the question of how data gets in and out of this architecture. We understand that we are addressing voluminous amounts of data as well as potentially streaming sources such as IoT. The traditional integration tools do not meet such demands, particularly scale. So, with that premise, here are some ideal technologies for data ingestion and advanced analytics –

- Apache NiFi is an integrated data logistics platform for automating the movement of data between disparate systems. It provides real-time control that makes it easy to manage the movement of data between any source and any destination. It is data source agnostic, supporting disparate and distributed sources of differing formats, schemas, protocols, speeds and sizes such as machines, geo location devices, click streams, files, social feeds, log files and videos and more. This is perfect for the data marketplace architecture where different providers have different types of data to offer.

- Stream Processing/Analytics – In the open source world, there are several powerful options available for comprehensive stream processing and analytics. Apache Kafka has been known to deliver data movement speeds of millions of transactions per second. Apache Storm, Apache Spark Streaming and other similar projects offer complex event processing and predictive analytics capabilities. Some data consumers of this data marketplace architecture may just purchase the data and do the analysis offline themselves. Other consumers may want to do detailed analytics on the data directly through the marketplace.

Summing up, this architecture is ideal for a data marketplace powered by Blockchain. However, this may vary based on specific industry verticals where this is adopted. It may also start changing as Blockchain itself evolves as a technology. Lastly, remember that data privacy and compliance regulations will also largely dictate the adoption or inhibition of such a model.