Introduction

Cloudera Data Platform (CDP) unifies the technologies from Cloudera Enterprise Data Hub (CDH) and Hortonworks Data Platform (HDP). As part of that unification process, Cloudera merged the YARN Scheduler functionality from the legacy platforms, creating a Capacity Scheduler that better services all customers. In merging this scheduler functionality, Cloudera significantly reduced the time and effort to migrate from CDH and HDP. Enabling this combined functionality allows customers to minimize expensive testing and manual conversion operations in the migration, and reduces the overall risk that can occur when switching from one methodology to another.

In the first part of this blog series, we described the fine-tuning of Capacity Scheduler deployed in “relative mode” in CDP Private Cloud Base to mimic some of the Fair Scheduler behavior from before the upgrade. In this part, we will discuss the fine-tuning of Capacity Scheduler in the new “weight mode” that was introduced in CDP Private Cloud Base 7.1.6. This mode will be most familiar to CDH users, and was created to help ease their transition to CDP.

As mentioned previously Cloudera provides the fs2cs conversion utility, which makes the transition from Fair Scheduler to Capacity Scheduler much easier. And with CDP Private Cloud Base 7.1.6, the default mode of conversion from Fair Scheduler to Capacity Scheduler when using the fs2cs utility is now switched to the new “weight mode.” Even with the addition of this new mode in Capacity Scheduler, the fs2cs conversion utility cannot convert every Fair Scheduler configuration into a corresponding Capacity Scheduler configuration. So some manual fine-tuning is required to ensure that the resulting scheduling configuration fits your organization’s internal resource allocation goals and workload SLAs. In this blog we will discuss the fine-tuning of Capacity Scheduler in weight mode to mimic some of the Fair Scheduler behavior from prior to the CDP upgrade.

Weight mode in Capacity Scheduler in CDP

Prior to CDP Private Cloud Base 7.1.6, Capacity Scheduler had two modes of defining queue resource allocation: using percentage values (relative mode), or using absolute resource vectors (absolute mode). Both these modes are very rigid and have strict rules on the resource allocation while creating queues. For example, for each parent queue the sum of all child queue capacities should add up to 100% (in relative mode) or the exact resource value defined in parent (in absolute mode). So when adding a new queue under a parent, capacities of all or many child queues might have to be adjusted so as to not go above the total capacity of the parent.

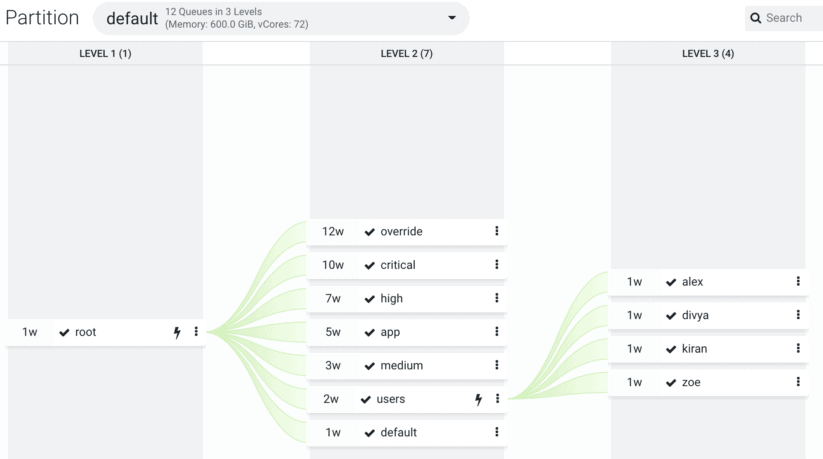

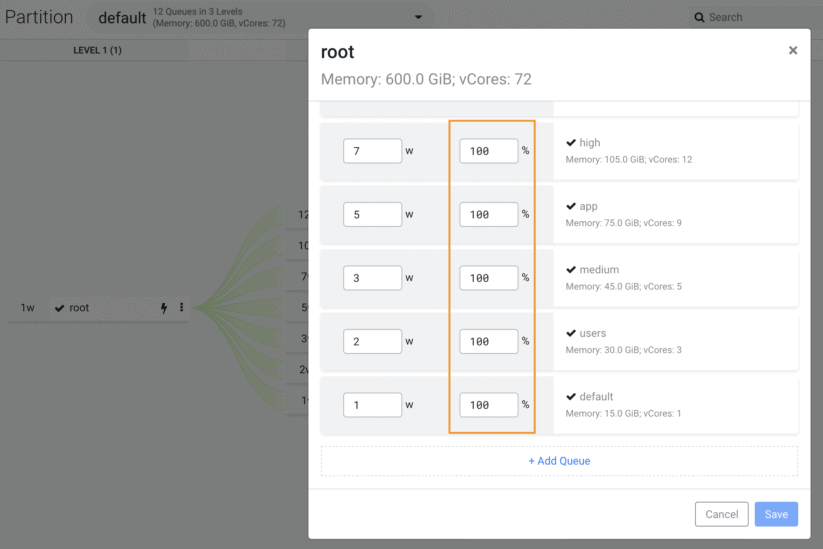

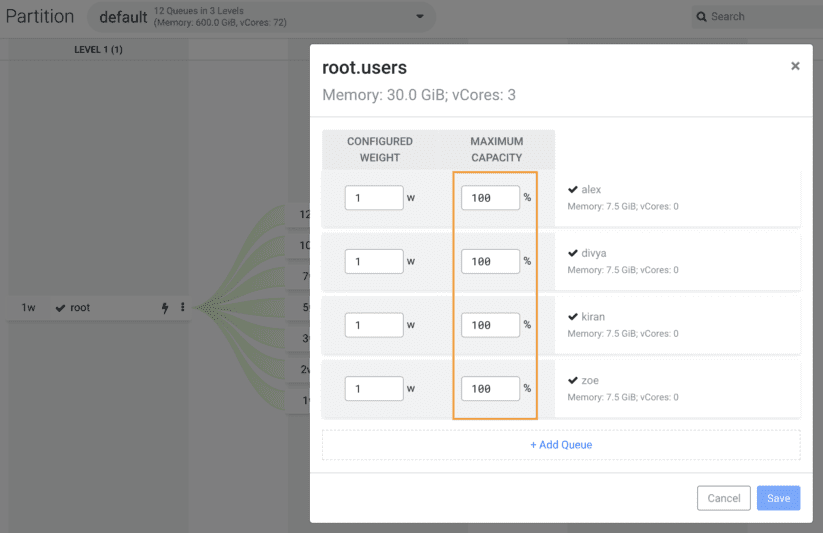

CDP Private Cloud Base 7.1.6 added a new weight mode for resource allocation to queues. In this mode, the capacity value for each queue would be specified in fractions of total resources available within a parent queue, called weights. This new mode of resource allocation in Capacity Scheduler is very similar to the weighted queues in CDH Fair Scheduler. Since weights determine the resources relative to the sibling queues under a parent, any number of extra queues can be added freely under a parent without having to adjust any capacities. Each time a new queue is added, any existing sibling queues’ capacities will automatically change accordingly. It should be noted that the maximum capacity for each queue in weight mode in Capacity Scheduler is still defined as a percentage value. This is required to provide maximum elasticity in the Capacity Scheduler while adding new queues.

Example: using the fs2cs conversion utility in weight mode

You can use the fs2cs conversion utility to automatically convert certain Fair Scheduler configurations to Capacity Scheduler configurations as a part of the Upgrade Cluster Wizard in Cloudera Manager. Refer the official Cloudera documentation for usage details of fs2cs. This tool can also be used to generate a Capacity Scheduler configuration during a CDH to CDP side-car migration. Starting from CDP Private Cloud Base 7.1.6 onwards, Capacity Scheduler created during an upgrade using fs2cs conversion tool defaults to the Weight Mode. Relative mode would still be the default configuration for any new clusters built directly on CDP.

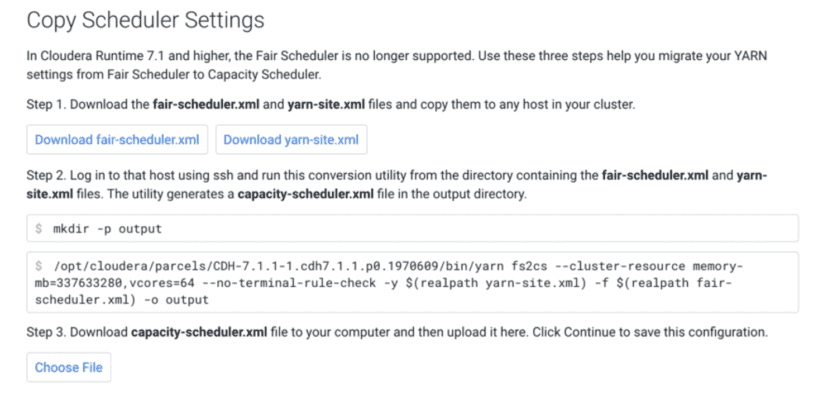

- Download the Fair Scheduler configuration files from the Cloudera Manager.

- Use the fs2cs conversion utility to auto convert the structure of resource pools.

- Upload the generated Capacity Scheduler configuration files to save the configuration in Cloudera Manager:

Fair Scheduler configurations from CDH: before upgrade

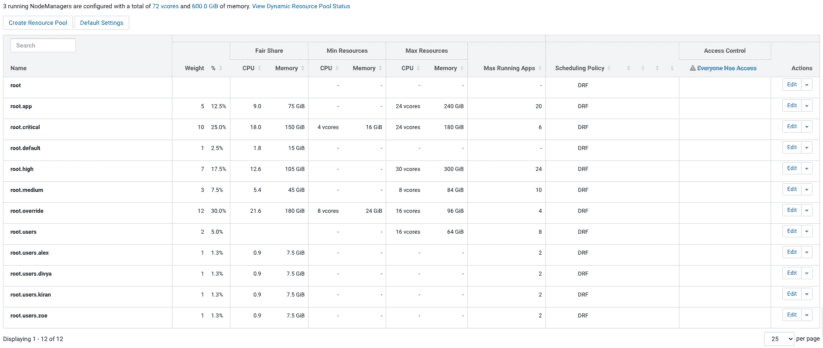

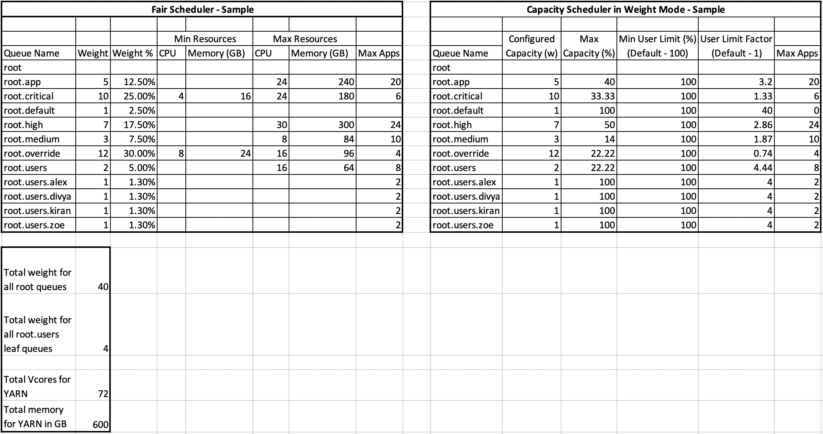

As an example, let us consider the following dynamic resource pools configuration defined for Fair Scheduler in CDH.

Capacity Scheduler in weight mode from CDP: after upgrade

As part of the upgrade to CDP, the fs2cs conversion utility converts the Fair Scheduler configurations to the corresponding weight mode in Capacity Scheduler. The following screenshots show the resulting weight mode Capacity Scheduler configurations in YARN Queue Manager.

Observations (in weight mode for CS)

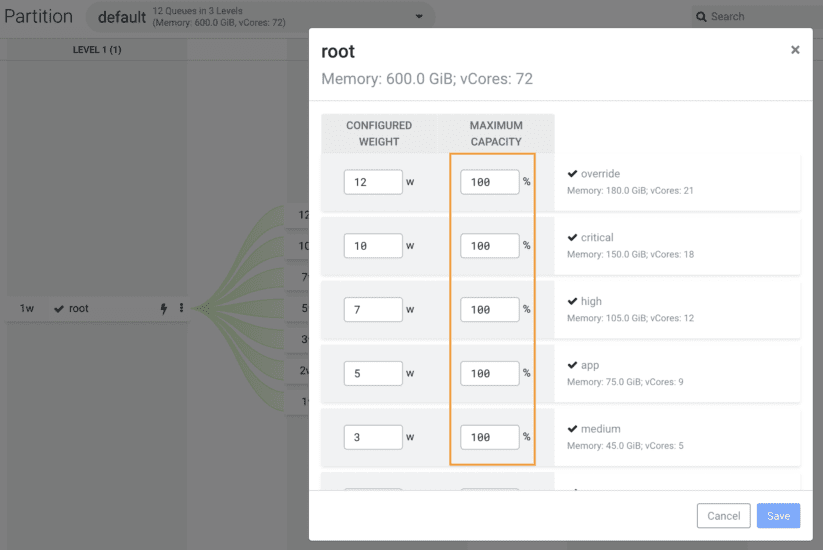

- All queues have their max capacity configured as 100% after the conversion using the fs2cs conversion utility.

- In FS, some of the queues had max resources configured using absolute values and those were hard limits.

- So hard limits for queues based on “max resources” that were present in FS in CDH need some fine-tuning after migration to CS in CDP.

- In CS the maximum capacity is based on the parent’s queue, while in FS “max resources” is configured as a global limit.

- All queues have the user limit factor set to 1 (which is the default) after the conversion using the fs2cs conversion utility.

- Setting this value to 1 means that one user can only use up to the configured capacity of the queue.

- If a single user needs to go beyond the configured capacity and utilize up to its maximum capacity, then this value needs to be adjusted.

- In CDH, many applications would have been using a single tenant (user ID) to run their jobs on the cluster. In those cases, the default setting of 1 for user limit factor could mean even if the cluster has available capacity, jobs go into a pending state.

- One option to disable the user-limit-factor is to set its value to -1.

- Ordering policies within a specific queue.

- Capacity Scheduler supports two job ordering policies within a specific queue, FIFO (first in, first out) or fair. Ordering policies are configured on a per-queue basis. The default ordering policy in Capacity Scheduler is FIFO for any new queue getting added. But for queues getting converted using fs2cs, the ordering policy would be set to “fair” if DRF was being used as the scheduling policy in the corresponding Fair Scheduler configuration. To switch the ordering policy for a queue to fair, edit the queue properties in YARN Queue Manager and update the value for “yarn.scheduler.capacity.<queue-path>.ordering-policy.”

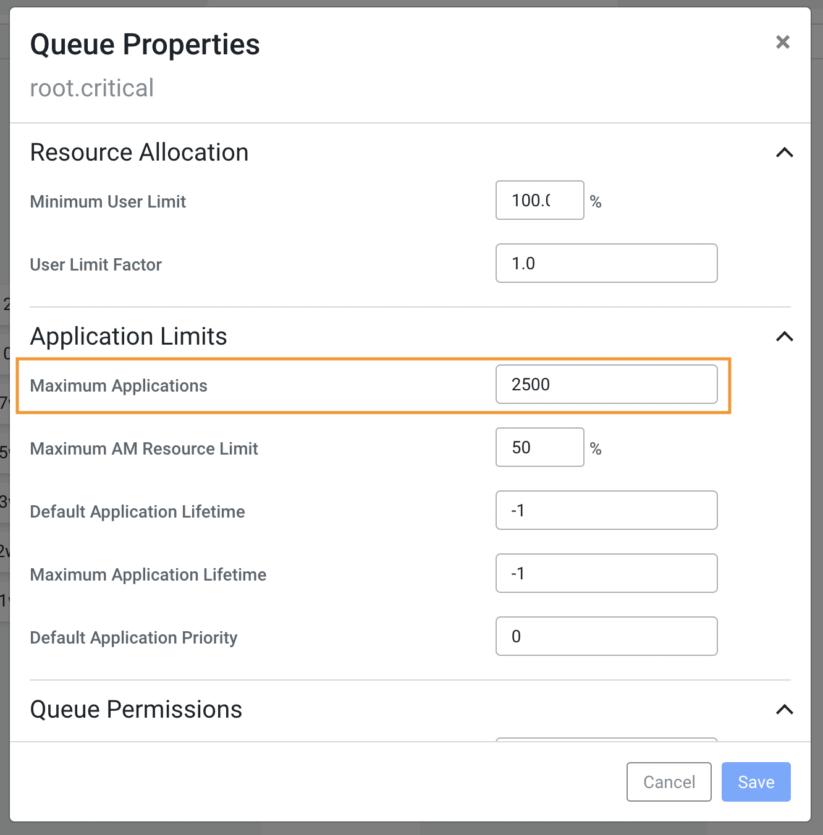

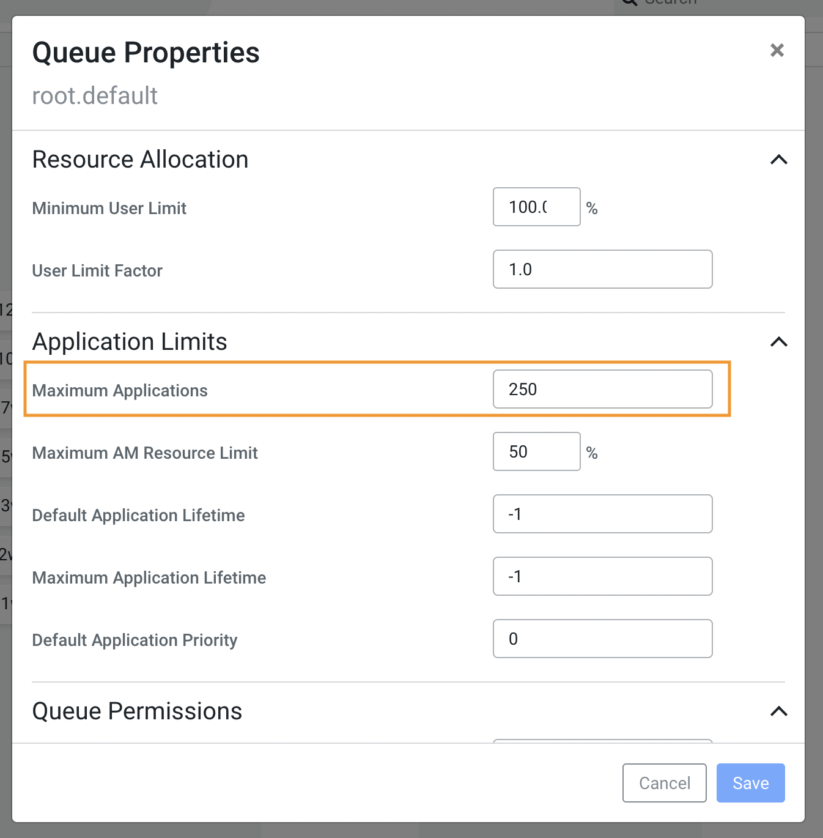

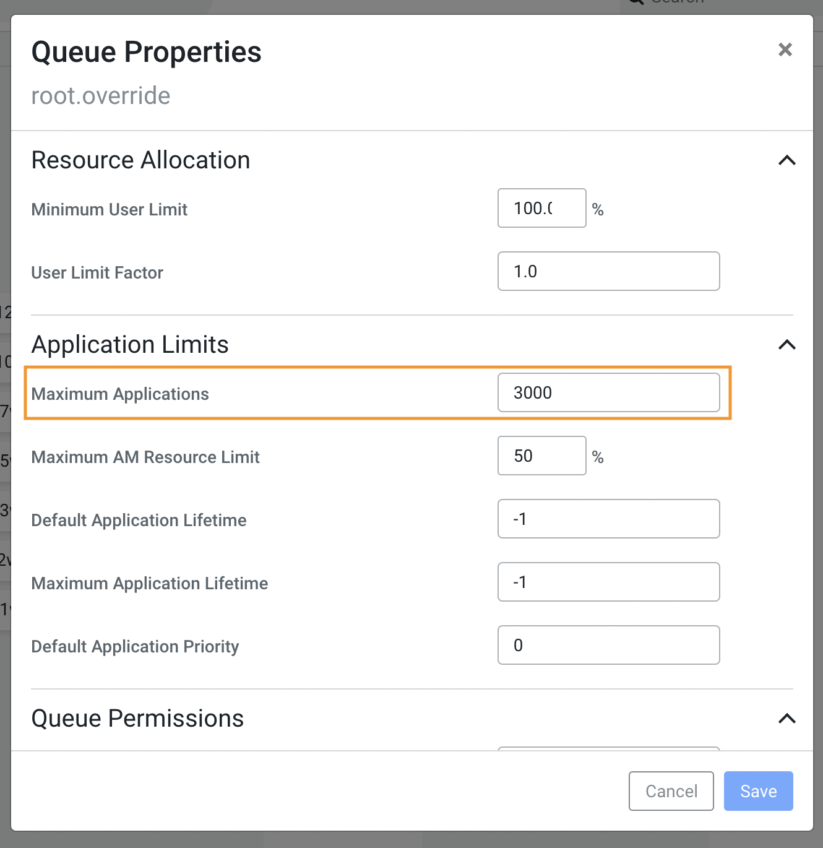

- With the introduction of dynamic queues in CS in CDP Private Cloud Base 7.1.6, the default “maximum applications” in a dynamic queue is 10,000. So rather than carrying over the “max running apps” value from CDH, this value in YARN Queue Manager UI is now being calculated based on the weight of the queue. In the example shown above all the sibling queue weights under the root queue add up to 40. So the factor for max applications for each queue would be (10,000 / 40 = 250). And so each queue would be given 250 x (weight of the queue) as the value for max applications. For the queue override, the weight is 12, so the max application is set to (250 x 12 = 3000). This change in behavior while migrating from FS to CS is currently under investigation.

Manual fine-tuning (in weight mode for CS)

As mentioned previously, there is no one-to-one mapping for all the Fair Scheduler and Capacity Scheduler configurations. A few manual configuration changes should be made in CDP Capacity Scheduler to simulate some of the CDH Fair Scheduler settings. For example, we can fine-tune the maximum capacity in the CDP Capacity Scheduler to set up some of the hard limits previously defined in CDH Fair Scheduler using the max resources. Also, in CDH there was no option to restrict resource consumption by individual users within a queue; one user could consume the entire resources within a queue. In such a situation, tuning of the configuration for user limit factor in CDP Capacity Scheduler is required to allow individual users to go beyond the configured capacity and up to the maximum capacity of the queue.

To achieve some of these above requirements we need to convert the weights specified for each queue into its corresponding configured capacity. This can be calculated as a percentage of the weight of the queue against all the weights of the corresponding sibling queues. This calculated value of configured capacity is required to calculate the values for the user limit factor of the queue.

We can use the calculations listed below as a starting point to fine-tune the CDP Capacity Scheduler in weight mode. This creates an environment with similar capacity limits for users that were previously defined in Fair Scheduler.

The calculations are done using the settings defined in YARN as well as in CDH Fair Scheduler.

- Configured capacity

- Configured capacity = Round([{configured weight for this queue in Capacity Scheduler} / {total of all weights for all sibling queues} * 100]) to two digits

- Max capacity – If maximum resources are defined as absolute values for vCores and memory in Fair Scheduler

- Max capacity = Round(max([{max vCores configured for this queue in Fair Scheduler} / {total vCores for YARN} * 100], [{max memory configured for this queue in Fair Scheduler} / {Total memory for YARN} * 100]))to 2 digits

- Max capacity – If maximum resources are defined as a common percentage for vCores and memory in Fair Scheduler

- Max Capacity = common percentage defined for max resources for this queue in Fair Scheduler

- Max capacity – If maximum resources are defined as separate percentages for vCores and memory in Fair Scheduler

- Max capacity = Max(percentage defined for max resources for vCores in Fair Scheduler for this queue, Percentage defined for max resources for memory in Fair Scheduler for this queue)

- User limit factor

- User limit factor = Round({calculated max capacity for this queue in Capacity Scheduler} / {configured capacity for this queue in Capacity Scheduler}) to two digits

- Maximum applications

- For each queue, copy over any defined value in Fair Scheduler for “max running apps” to the corresponding Capacity Scheduler property, “dynamic queue maximum applications”

Fine-tuned scheduler comparison (in weight mode for CS)

After upgrading to CDP, we can use the calculations suggested above along with the configurations previously present in CDH Fair Scheduler to fine-tune the CDP Capacity Scheduler. This fine-tuning effort simulates some of the previous CDH Fair Scheduler settings within the CDP Capacity Scheduler. If such a simulation is not required for your environment and use cases, discard this fine-tuning exercise. In such situations, an upgraded CDP environment with a new Capacity Scheduler presents an ideal environment to revisit and adjust some of the YARN queue resource allocations from scratch.

A side-by-side comparison of the CDH Fair Scheduler and fine-tuned CDP Capacity Scheduler used in the above example is provided below.

Summary

Capacity Scheduler is the default and supported YARN scheduler in CDP Private Cloud Base. When upgrading or migrating from CDH to CDP Private Cloud Base, the migration from Fair Scheduler to Capacity Scheduler is done automatically using the fs2cs conversion utility. From CDP Private Cloud Base 7.1.6 onwards, the fs2cs conversion utility converts into the new weight mode in Capacity Scheduler. In prior versions of CDP Private Cloud Base, the fs2cs utility converts to the relative mode in Capacity Scheduler. Because of the feature differences between Fair Scheduler and Capacity Scheduler, a direct one-to-one mapping of all configurations is not possible. In this blog, we presented some calculations that can be used as a starting point for the manual fine-tuning required to match CDP Capacity Scheduler settings in weight mode to some of the previously set thresholds in the Fair Scheduler.

To learn more about Capacity Scheduler in CDP, here are some helpful resources:

Comparison of Fair Scheduler with Capacity Scheduler

Nice article Kiran, great fan of how to you make things simple and native for everyone to understand

Thank you Kumar! Nice to hear that the content presented here is useful and easy to understand.