HDFS is core part of any Hadoop deployment and in order to ensure that data is protected in Hadoop platform, security needs to be baked into the HDFS layer. HDFS is protected using Kerberos authentication, and authorization using POSIX style permissions/HDFS ACLs or using Apache Ranger.

Apache Ranger (https://hwxjojo.staging.wpengine.com/hadoop/ranger/) is a centralized security administration solution for Hadoop that enables administrators to create and enforce security policies for HDFS and other Hadoop platform components.

How Ranger policies work for HDFS?

In order to ensure security in HDP environments, we recommend all of our customers to implement Kerberos, Apache Knox and Apache Ranger.

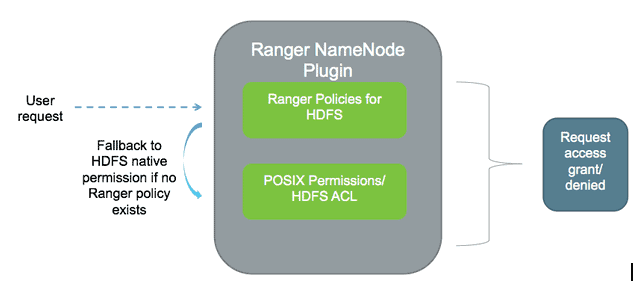

Apache Ranger offers a federated authorization model for HDFS. Ranger plugin for HDFS checks for Ranger policies and if a policy exists, access is granted to user. If a policy doesn’t exist in Ranger, then Ranger would default to native permissions model in HDFS (POSIX or HDFS ACL). This federated model is applicable for HDFS and Yarn service in Ranger.

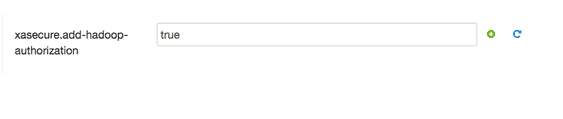

For other services such as Hive or HBase, Ranger operates as the sole authorizer which means only Ranger policies are in effect. The option for fallback model is configured using a property in Ambari → Ranger → HDFS config → Advanced ranger-hdfs-security

The federated authorization model enables customers to safely implement Ranger in an existing cluster without affecting jobs which rely on POSIX permissions. We recommend to enable this option as the default model for all deployments.

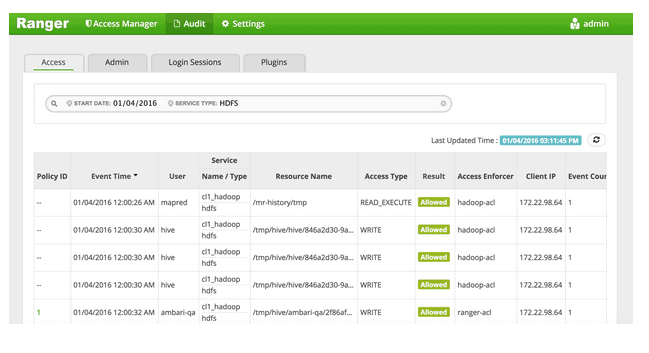

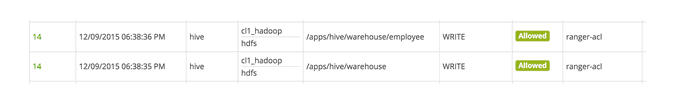

Ranger’s user interface makes it easy for administrators to find the permission (Ranger policy or native HDFS) that provides access to the user. Users can simply navigate to Ranger→ Audit and look for the values in the enforcer column of the audit data. If the populated value in Access Enforcer column is “Ranger-acl”, it indicates that a Ranger policy provided access to the user. If the Access Enforcer value is “Hadoop-acl”, then the access was provided by native HDFS ACL or POSIX permission.

Best practices for HDFS authorization

Having a federated authorization model may create a challenge for security administrators looking to plan a security model for HDFS.

After Apache Ranger and Hadoop have been installed, we recommend administrators to implement the following steps:

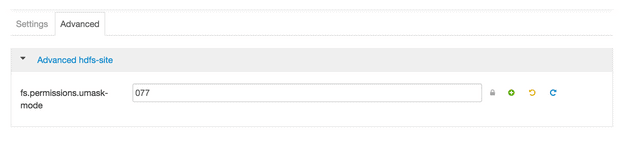

- Change HDFS umask to 077

- Identify directory which can be managed by Ranger policies

- Identify directories which need to be managed by HDFS native permissions

- Enable Ranger policy to audit all records

Here are the steps again in detail.

- Change HDFS umask to 077 from 022. This will prevent any new files or folders to be accessed by anyone other than the owner

Administrators can change this property via Ambari:

The umask default value in HDFS is configured to 022, which grants all the users read permissions to all HDFS folders and files. You can check by running the following command in recently installed Hadoop

$ hdfs dfs -ls /apps

Found 3 items

drwxrwxrwx – falcon hdfs 0 2015-11-30 08:02 /apps/falcon

drwxr-xr-x – hdfs hdfs 0 2015-11-30 07:56 /apps/hbase

drwxr-xr-x – hdfs hdfs 0 2015-11-30 08:01 /apps/hive

- Identify the directories that can be managed by Ranger policies

We recommend that permission for application data folders (/apps/hive, /apps/Hbase) as well as any custom data folders be managed through Apache Ranger. The HDFS native permissions for these directories need to be restrictive. This can be done through changing permissions in HDFS using chmod.

Example:

$ hdfs dfs -chmod -R 000 /apps/hive

$ hdfs dfs -chown -R hdfs:hdfs /apps/hive

$ hdfs dfs -ls /apps/hive

Found 1 items

d——— – hdfs hdfs 0 2015-11-30 08:01 /apps/hive/warehouse

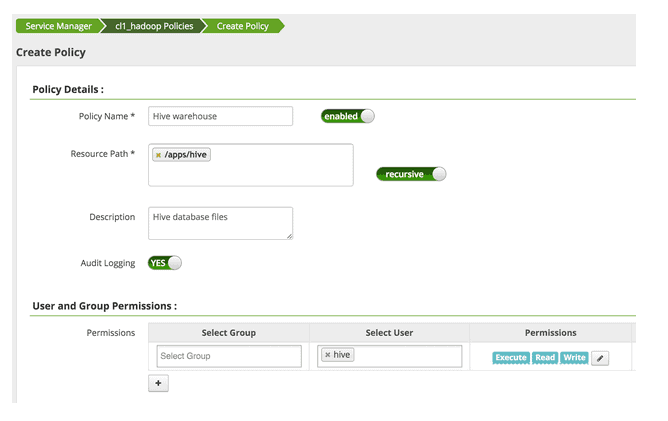

Then navigate to Ranger admin and give explicit permission to users as needed. For example:

Administrators should follow the same process for other data folders as well. You can validate whether your changes are in effect by doing the following:

- Connect to HiveServer2 using beeline

- Create a table

- create table employee( id int, name String, ssn String);

- Go to ranger, and check the HDFS access audit. The enforcer should be ‘ranger-acl’

- Identify directories which can be managed by HDFS permissions

It is recommended to let HDFS manage the permissions for /tmp and /user folders. These are used by applications and jobs which create user level directories.

Here, you should also set the initial permission for /user folder to “700”, similar to the example below

hdfs dfs -ls /user

Found 4 items

drwxrwx— – ambari-qa hdfs 0 2015-11-30 07:56 /user/ambari-qa

drwxr-xr-x – hcat hdfs 0 2015-11-30 08:01 /user/hcat

drwxr-xr-x – hive hdfs 0 2015-11-30 08:01 /user/hive

drwxrwxr-x – oozie hdfs 0 2015-11-30 08:02 /user/oozie

$ hdfs dfs -chmod -R 700 /user/*

$ hdfs dfs -ls /user

Found 4 items

drwx—— – ambari-qa hdfs 0 2015-11-30 07:56 /user/ambari-qa

drwx—— – hcat hdfs 0 2015-11-30 08:01 /user/hcat

drwx—— – hive hdfs 0 2015-11-30 08:01 /user/hive

drwx—— – oozie hdfs 0 2015-11-30 08:02 /user/oozie

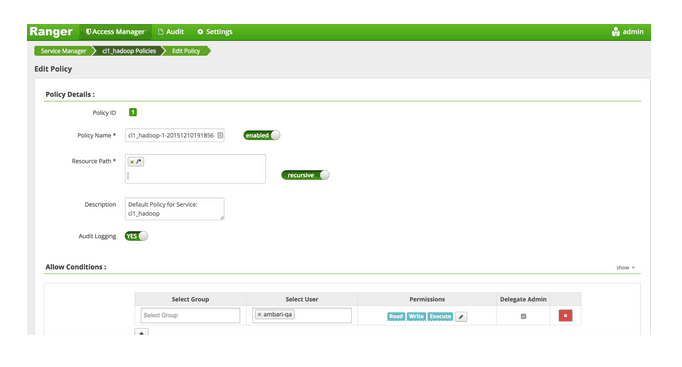

- Ensure auditing for all HDFS data.

Auditing in Apache Ranger can be controlled as a policy. When Apache Ranger is installed through Ambari, a default policy is created for all files and directories in HDFS and with auditing option enabled.This policy is also used by Ambari smoke test user “ambari-qa” to verify HDFS service through Ambari. If administrators disable this default policy, they would need to create a similar policy for enabling audit across all files and folders.

Summary

Securing HDFS files through permissions is a starting point for securing Hadoop. Ranger provides a centralized interface for managing security policies for HDFS. Security administrators are recommended to use a combination of HDFS native permissions and Ranger policies to provide comprehensive coverage for all potential use cases. Using the best practices outlined in this blog, administrators can simplify the access control policies for administrative and user directories, files in HDFS.

Great article!

Thank you.

Yes, absolutely great article!

I apply every single point of this best practice to my Hadoop environment.

Thank you also!