This blog post was published on Hortonworks.com before the merger with Cloudera. Some links, resources, or references may no longer be accurate.

Apache Hive is the de facto standard for SQL in Hadoop with more enterprises relying on this open source project than any other alternative. Stinger.next, a community based effort, is delivering true enterprise SQL at Hadoop scale and speed.

With Hive’s prominence in the enterprise, security within Hive has come under greater focus from enterprise users. They have come to expect fine grain access control and auditing within Hive. Apache Ranger provides centralized security administration for Hadoop, and it enables fine grain access control and deep auditing for Apache components such as Hive, HBase, HDFS, Storm and Knox.

This blog covers the best practices for configuring security for Hive with Apache Ranger and focuses on the use cases of data analysts accessing Hive, covering three scenarios:

- Data analysts accessing only Hiveserver2, with limited access to HDFS files

- Data analysts accessing both Hiveserver2, and HDFS files through Pig/MR jobs

- Data analysts accessing Hive CLI

For each scenario, we will illustrate how to configure Hive and Ranger and discuss how security is handled. You can use either deployment: Sandbox or HDP 2.2 cluster installed using Apache Ambari. Note the pre-requisites below.

Prerequisites

-

- HDP 2.2 Sandbox: If you are using the HDP 2.2 Sandbox, ensure that you disable the global “allow policies” in Ranger before configuring any security policies. The global “allow policy” is the default in the sandbox, to let users access Hive and HDFS without any permission checks.

OR

- HDP 2.2 cluster: Ranger plugins for HDFS and Hive as well as Ranger admin installed manually (documentation for Ranger install can be found here).

Scenario 1 – HiveServer2 access with limited HDFS access

In this scenario, many analysts access data through HiveServer2, though specific administrators may have direct access to HDFS files.

Column level access control over Hive data is a major requirement. You can enable column level security access by following these steps:

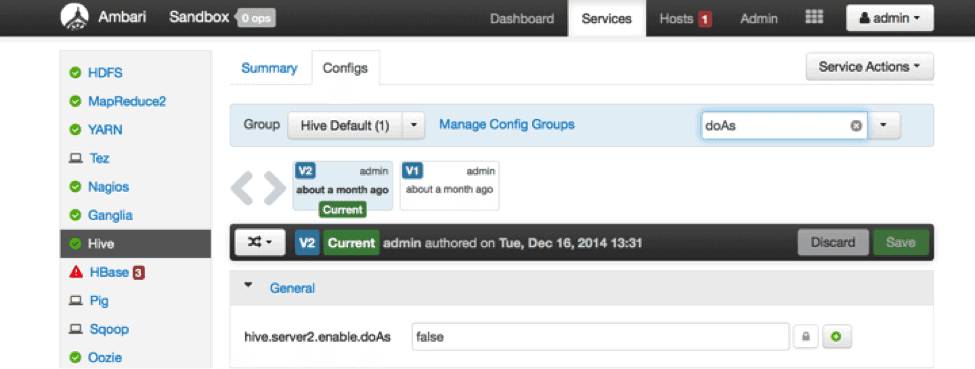

Step 1. Hive Configuration

In Ambari –> Hive-> Config, ensure the hive.server2.enable.doAs is set to “false”. What this means is that Hiveserver2 will run MR jobs in HDFS as “hive” user. Permissions in HDFS files related to Hive can be given only to “hive” users, and no analyst would be able to access HDFS files directly.

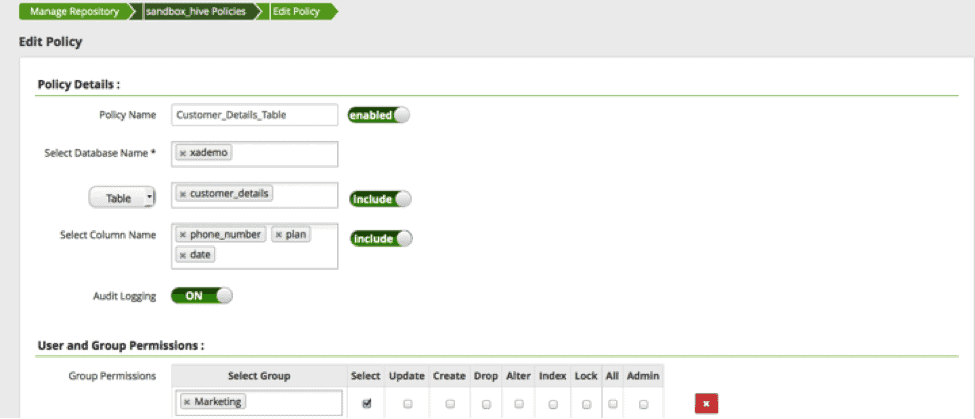

Step 2. Ranger configuration

With Ranger installed, you can configure a policy at a column level as shown below:

In this example, the marketing group has only access to “phone number”, “plan” and “date” columns in the “customer_details” table.

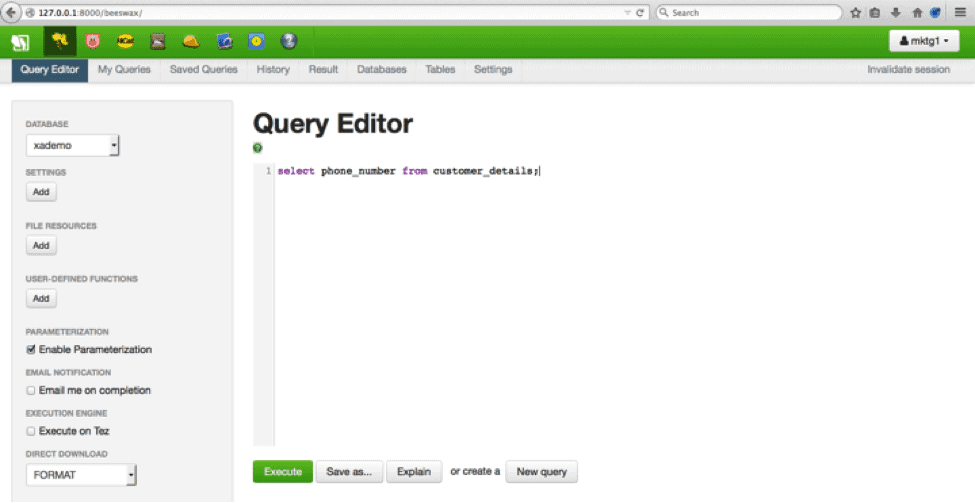

Step 3.Run a query

You can use Hue or Beeline to run a query against this table. In this example from the sandbox, we have used user “mktg1” to run the query against this table.

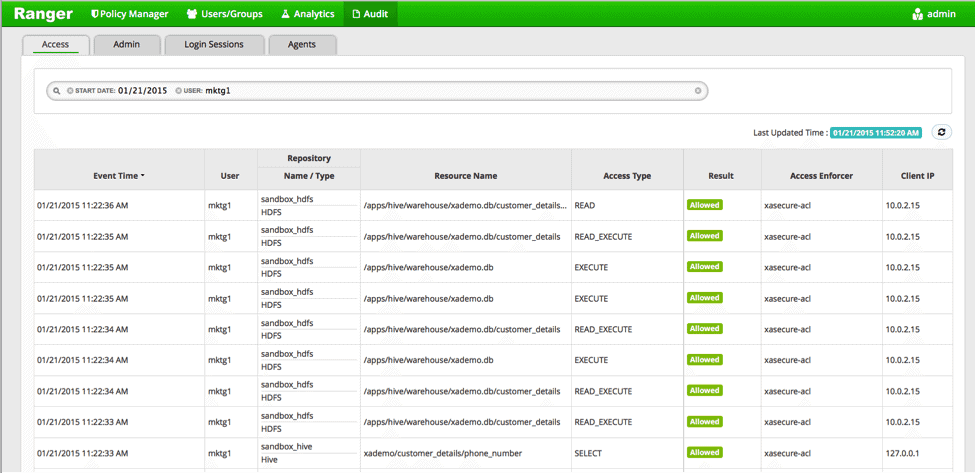

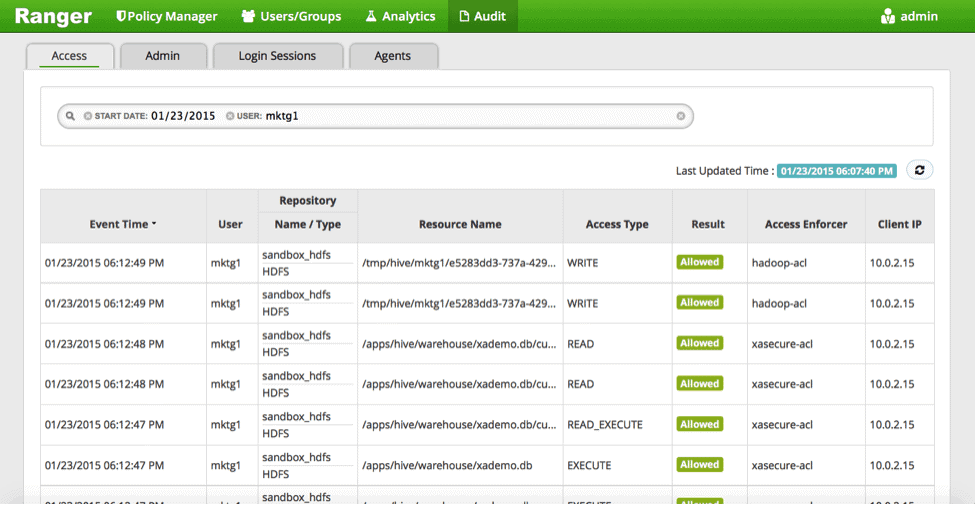

After successfully running the query, check the audit logs in Ranger

You will see the query running in Hive as the original user (“mktg1” in this case), while the related tasks in HDFS will be executed as the “hive” user.

With Ranger enabled, the only way data analysts can view data would be through Hive and the access in Hive would be controlled at the column level. Administrators who need access at HDFS level can be given permissions through Ranger policies for HDFS or through HDFS ACLs.

Scenario 2 – Hiveserver2 and HDFS access

In this scenario, analysts use Hiveserver2 to run SQL queries while also running Pig/MR jobs that run directly on HDFS data. In this case, we would need to enable permissions within Hive as well as HDFS

As in previous scenarios, ensure that Hive and Ranger is installed and Ambari is up and running. If you are using the sandbox, ensure that any global policies in Ranger have been disabled.

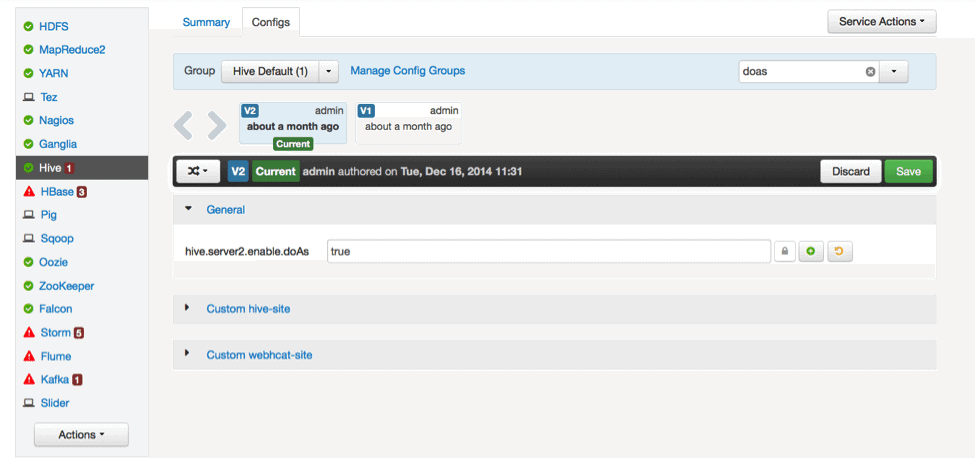

Step 1. Configuration Changes: hive-site.xml or in Ambari → Hive → Config

In Ambari –> Hive-> Config, ensure the hive.server2.enable.doAs is set to “true”. What this means is that Hiveserver2 will run MR jobs in HDFS as the original user.

Make sure to restart Hive service in Ambari after changing any configuration.

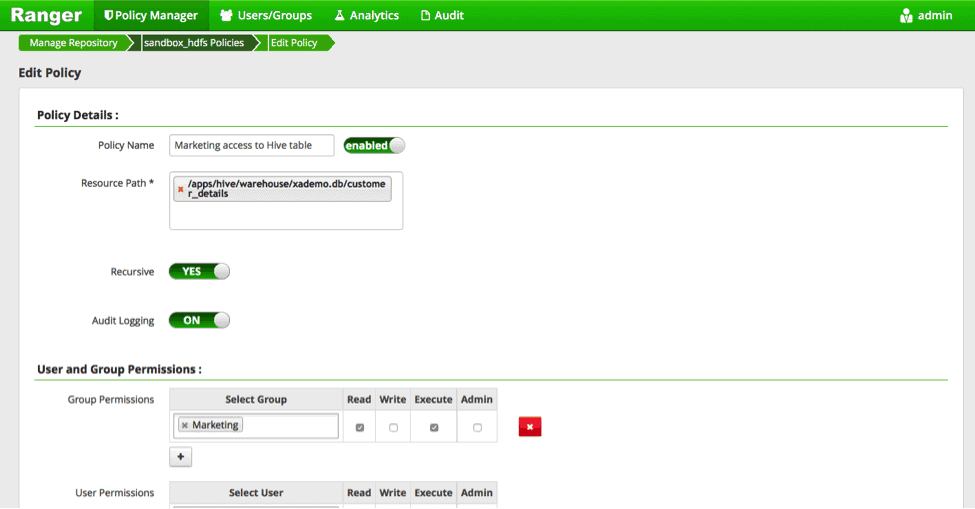

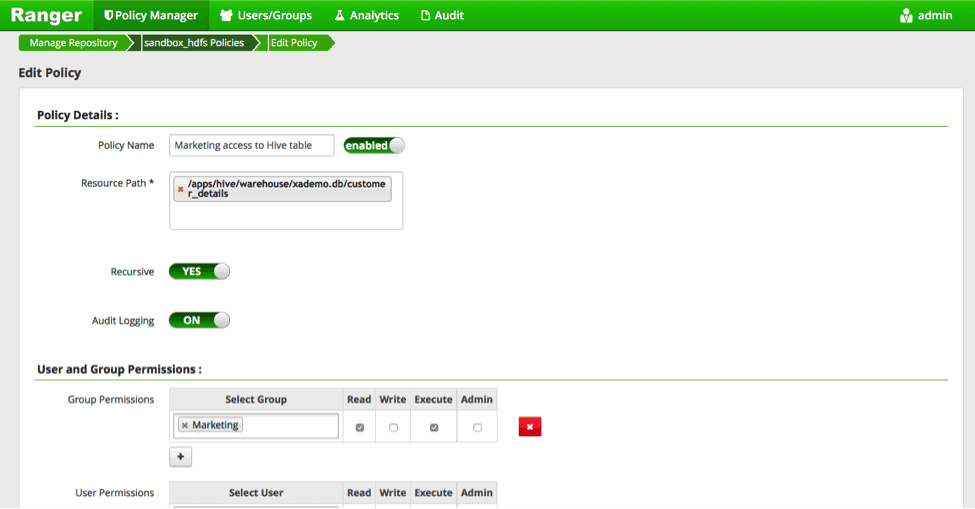

Step 2. In Ranger, within HDFS, create permissions for files pertaining to hive tables

In the example below, we will be giving the marketing team “read” permission to the file corresponding to the Hive table “customer_details”

The users can access data through HDFS commands as well.

Step 3. check the audit logs in Ranger

. You will see audit entries in Hive and HDFS with the original user’s ID.

Scenario 3 – Hive CLI access

If the analysts use Hive CLI as the predominant method for running queries, we need to configure security differently.

Hive CLI loads hive configuration into the client and gets data directly from HDFS or through map reduce/Tez tasks. The best way to protect Hive CLI would be to enable permissions for HDFS files/folders mapped to the Hive database and tables. In order to secure metastore, it is also recommended to turn on storage-based authorization.

Please note that Ranger Hive plugin only applies to Hiveserver2. Hive CLI should be protected using permissions at the HDFS folder/file level using Ranger or HDFS ACLs.

-

- First identify the files corresponding to tables in Hive. You can look through the directory /apps/hive/warehouse

- Set permissions for this folder in Ranger -> HDFS Policies

- Run queries through Hive CLI

sandbox ~]# su - mktg1

[mktg1@sandbox ~]$ hive

hive> use xademo;

OK

Time taken: 9.855 seconds

hive> select phone_number from customer_details;

OK

PHONE_NUM

5553947406

7622112093

5092111043

9392254909

7783343634

- Check audit entries in Ranger

- Run any DDL commands through Hive CLI.

[root@sandbox ~]# su - it1

[it1@sandbox ~]$ hive

hive> use xademo;

OK

Time taken: 12.175 seconds

hive> drop table customer_details;

FAILED: SemanticException Unable to fetch table customer_details. java.security.AccessControlException: Permission denied: user=it1, access=READ, inode=”/apps/hive/warehouse/xademo.db/customer_details”:hive:hdfs:drwx——

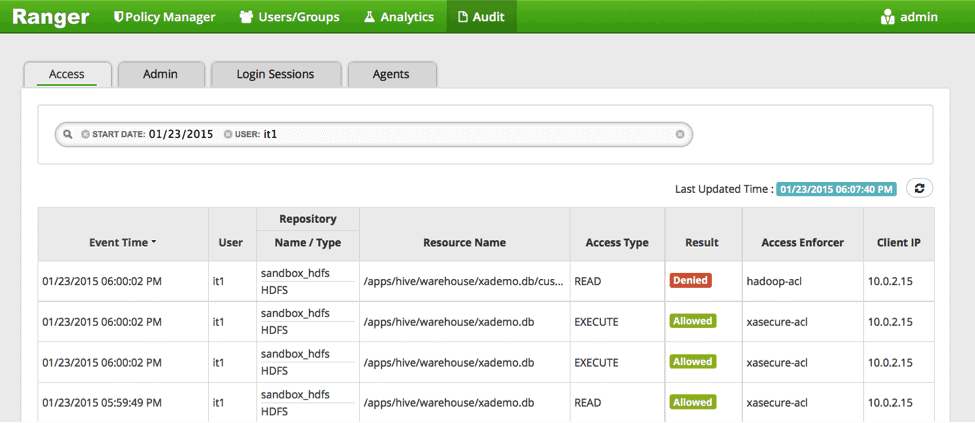

The action drop table is denied due to lack of permission at the HDFS level. It can be verified in the Ranger audit logs:

Summary

Hive will continue to evolve as the predominant application for accessing data within Hadoop. With Apache Ranger, you can configure policies to support fine grain access control in Hive and HDFS and secure your data from unauthorized access. Use this blog as a guide to configure security policies that best support your data access needs and use cases.