Cloudera is trusted by regulated industries and Government organisations around the world to store and analyze petabytes of highly sensitive or confidential information about people, healthcare data, financial data or just proprietary information sensitive to the customer itself.

Anybody who is storing customer information, healthcare, financial or sensitive proprietary information will need to ensure they are taking steps to protect that data and that includes detecting and preventing inadvertent or malicious access. According to research by The Ponemon Institute the average global cost of Insider Threats rose by 31% in two years to $11.45 million, and the frequency of incidents spiked by 47% in the same time period. A 2019 report identified that companies are more worried about inadvertent insider breaches(71%), negligent data breaches (65%), and malicious intent by bad actors (60%) than they are about compromised accounts/machines (9%).

Regulations such as the GDPR, CCPA, HIPAA, PCI DSS, and FIPS-200 all require that organizations take appropriate measures to protect sensitive information, which can include the three pillars of:

- Encryption – at rest and on the wire – ensuring that unauthenticated actors cannot get access to the data

- Access control (strong authentication and authorization) – ensuring that users are who they say they are (authentication) and that they can only get access to what they are allowed to access (authorization)

- Audit and Accounting – knowing who accessed what, and when, and who changed permissions or access control settings and potentially alerting to data breaches as they are occurring rather than after the fact.

In the Cloudera Data Platform, we excel at providing end-to-end security through the Cloudera Shared Data Experience (SDX). In CDP:

- All wire protocols can be encrypted using TLS or SASL based encryption

- All data at rest can be encrypted using HDFS Transparent Data Encryption (Private Cloud) or object store encryption (Public Cloud)

- All user accesses are authenticated via Kerberos/SPNEGO or SAML in both Public and Private Cloud.

- All data access is authorized via Attribute-Based Access Control or Role-Based Access Control with Apache Ranger as part of SDX.

- All data access and data access controls are audited, again with Apache Ranger.

Protective Monitoring

Through an effective Protective Monitoring program companies can ensure that they have visibility of who is accessing, or trying to access, what data and from what devices across their entire IT estate. This can be accomplished via:

- Compliance and Reporting – after the fact reports on who is access specific data assets

- Digital forensics and incident response – responding to regulators or Information Commissioners after a breach has been identified

- Advanced threat detection – real-time monitoring of access events to identify changes in behavior on a user level, data asset level, or across systems. Some SIEM platforms such as Securonix include these types of capabilities.

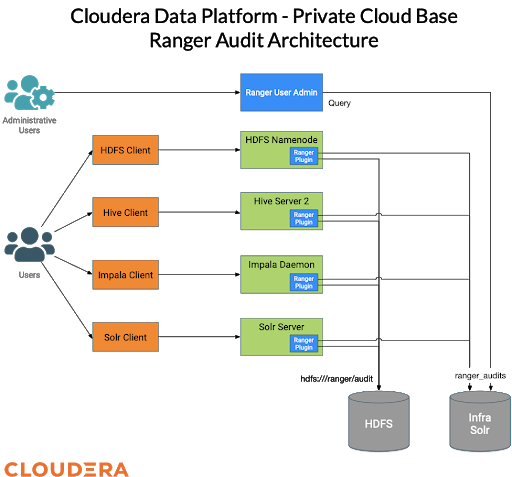

Auditing in the Cloudera Data Platform

All of the data access components in CDP send audit events to Apache Ranger, where they are stored and can be searched up to a configurable retention period.

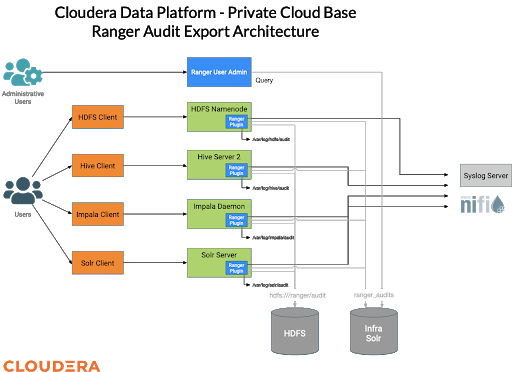

In this blog we are going to demonstrate how these audit events can be streamed to a third-party SIEM platform via syslog or they can be written to a local file where existing SIEM agents may be able to pick them up. In this architecture we will configure the plugin on each of the services to export the audit events to a remote syslog server and to write to local disk.

An example of a remote syslog server capable of performing complex filtering and routing logic is a Cloudera Flow NiFi Server running the ListenSyslog processor as demonstrated here.

We are going to do this by configuring the Ranger plugin to write its events to log4j and then configure the log4j settings on each of the services to add file and syslog appenders.

HDFS

HDFS audits all of the file interactions by all services. Using Cloudera Manager we will set the following settings:

| HDFS Service Advanced Configuration Snippet (Safety Valve) for ranger-hdfs-audit.xml |

Name: xasecure.audit.destination.log4j Value: true Name: xasecure.audit.destination.log4j.logger Value: ranger.audit |

| NameNode Logging Advanced Configuration Snippet (Safety Valve) |

log4j.appender.RANGER_AUDIT=org.apache.log4j.DailyRollingFileAppender log4j.appender.RANGER_AUDIT.File=/var/log/hadoop-hdfs/ranger-hdfs-audit.log log4j.appender.RANGER_AUDIT.layout=org.apache.log4j.PatternLayout log4j.appender.RANGER_AUDIT.layout.ConversionPattern=%m%n log4j.logger.ranger.audit=INFO,RANGER_AUDIT,SYSAUDIT log4j.appender.SYSAUDIT=org.apache.log4j.net.SyslogAppender log4j.appender.SYSAUDIT.threshold=INFO log4j.appender.SYSAUDIT.syslogHost=<sysloghost> log4j.appender.SYSAUDIT.layout=org.apache.log4j.PatternLayout log4j.appender.SYSAUDIT.layout.conversionPattern=%d{MMM dd HH:mm:ss} ${hostName}HDFS: %m%n log4j.appender.SYSAUDIT.filter.a=org.apache.log4j.varia.LevelRangeFilter log4j.appender.SYSAUDIT.filter.a.LevelMin=INFO log4j.appender.SYSAUDIT.filter.a.LevelMax=INFO |

HiveServer 2

All SQL submitted to HiveServer2 will be audited by this plugin. The configuration for HiveServer2 uses a different syntax to other services, due to HiveServer2’s use of Log4j2. Using Cloudera Manager we will set the following settings on the Hive on Tez service:

| Hive Service Advanced Configuration Snippet (Safety Valve) for ranger-hive-audit.xml |

Name: xasecure.audit.destination.log4j Value: true Name: xasecure.audit.destination.log4j.logger Value: ranger.audit |

| HiveServer2 Logging Advanced Configuration Snippet (Safety Valve) |

appenders=console, DRFA, redactorForRootLogger, RANGERAUDIT, SYSAUDIT loggers = Ranger logger.Ranger.name = ranger.audit logger.Ranger.level = INFO logger.Ranger.appenderRefs = SYSAUDIT, RANGERAUDIT logger.Ranger.appenderRef.RANGERAUDIT.ref = RANGERAUDIT logger.Ranger.appenderRef.SYSAUDIT.ref = SYSAUDIT appender.RANGERAUDIT.type=file appender.RANGERAUDIT.name=RANGERAUDIT appender.RANGERAUDIT.fileName=/var/log/hive/ranger-audit.log appender.RANGERAUDIT.filePermissions=rwx------ appender.RANGERAUDIT.layout.type=PatternLayout appender.RANGERAUDIT.layout.pattern=%d{ISO8601} %q %5p [%t] %c{2} (%F:%M(%L)) - %m%n appender.SYSAUDIT.type=Syslog appender.SYSAUDIT.name=SYSAUDIT appender.SYSAUDIT.host = <sysloghost> appender.SYSAUDIT.port = 514 appender.SYSAUDIT.protocol = UDP appender.SYSAUDIT.layout.type=PatternLayout appender.SYSAUDIT.layout.pattern=%d{MMM dd HH:mm:ss} ${hostName} Hive: %m%n |

Impala

Impala Daemons will log all Impala SQL statements. Again, this will be configured via Cloudera Manager:

| Impala Service Advanced Configuration Snippet (Safety Valve) for ranger-impala-audit.xml |

Name: xasecure.audit.destination.log4j Value: true Name: xasecure.audit.destination.log4j.logger Value: ranger.audit |

| Impala Daemon Logging Advanced Configuration Snippet (Safety Valve) |

log4j.appender.RANGER_AUDIT=org.apache.log4j.DailyRollingFileAppender log4j.appender.RANGER_AUDIT.File=/var/log/impalad/ranger-impala-audit.log log4j.appender.RANGER_AUDIT.layout=org.apache.log4j.PatternLayout log4j.appender.RANGER_AUDIT.layout.ConversionPattern=%m%n log4j.logger.ranger.audit=INFO,RANGER_AUDIT,SYSAUDIT log4j.appender.SYSAUDIT=org.apache.log4j.net.SyslogAppender log4j.appender.SYSAUDIT.threshold=INFO log4j.appender.SYSAUDIT.syslogHost=<sysloghost> log4j.appender.SYSAUDIT.layout=org.apache.log4j.PatternLayout log4j.appender.SYSAUDIT.layout.conversionPattern=%d{MMM dd HH:mm:ss} ${hostName}Impala: %m%n log4j.appender.SYSAUDIT.filter.a=org.apache.log4j.varia.LevelRangeFilter log4j.appender.SYSAUDIT.filter.a.LevelMin=INFO log4j.appender.SYSAUDIT.filter.a.LevelMax=INFO |

Solr

The Solr server will log all queries submitted to the Solr API. Again, this will be configured via Cloudera Manager:

| Solr Service Advanced Configuration Snippet (Safety Valve) for ranger-solr-audit.xml |

Name: xasecure.audit.destination.log4j Value: true Name: xasecure.audit.destination.log4j.logger Value: ranger.audit |

| Solr Server Logging Advanced Configuration Snippet (Safety Valve) |

appenders=console, DRFA, redactorForRootLogger, RANGERAUDIT, SYSAUDIT loggers = Ranger logger.Ranger.name = ranger.audit logger.Ranger.level = INFO logger.Ranger.appenderRefs = SYSAUDIT, RANGERAUDIT logger.Ranger.appenderRef.RANGERAUDIT.ref = RANGERAUDIT logger.Ranger.appenderRef.SYSAUDIT.ref = SYSAUDIT appender.RANGERAUDIT.type=file appender.RANGERAUDIT.name=RANGERAUDIT appender.RANGERAUDIT.fileName=/var/log/solr/ranger-solr.log appender.RANGERAUDIT.filePermissions=rwx------ appender.RANGERAUDIT.layout.type=PatternLayout appender.RANGERAUDIT.layout.pattern=%d{ISO8601} %q %5p [%t] %c{2} (%F:%M(%L)) - %m%n appender.SYSAUDIT.type=Syslog appender.SYSAUDIT.name=SYSAUDIT appender.SYSAUDIT.host = <sysloghost> appender.SYSAUDIT.port = 514 appender.SYSAUDIT.protocol = UDP appender.SYSAUDIT.layout.type=PatternLayout appender.SYSAUDIT.layout.pattern=%d{MMM dd HH:mm:ss} ${hostName} Solr: %m%n |

Hue

Hue is not currently integrated with Ranger, however can audit events to file, including user logon events and when users download the results of queries. This can be enabled via Cloudera Manager:

| Hue Service Advanced Configuration Snippet (Safety Valve) for hue_safety_valve.ini |

[desktop] audit_event_log_dir=/var/log/hue/audit/hue-audit.log |

Example Outputs

Once these settings have been configured we can test to see that the events are being sent correctly.

The events below were recorded by an Rsyslog server running on a remote server with a custom configuration:

HDFS

2021-05-04T03:25:36-07:00 host1.example.com HDFS: {"repoType":1,"repo":"cm_hdfs","reqUser":"teststd","evtTime":"2021-05-04 03:25:35.069","access":"open","resource":"/tstest/testfile2","resType":"path","action":"read","result":1,"agent":"hdfs","policy":-1,"reason":"/tstest/testfile2","enforcer":"hadoop-acl","cliIP":"172.27.172.2","reqData":"open/CLI","agentHost":"host1.example.com","logType":"RangerAudit","id":"41a20548-c55d-4169-ac80-09c1cca8265e-0","seq_num":1,"event_count":1,"event_dur_ms":1,"tags":[],"additional_info":"{\"remote-ip-address\":172.27.172.2, \"forwarded-ip-addresses\":[], \"accessTypes\":[read]","cluster_name":"CDP PvC Base Single-node Cluster"} 2021-05-04T03:29:27-07:00 host1.example.com HDFS: {"repoType":1,"repo":"cm_hdfs","reqUser":"teststd","evtTime":"2021-05-04 03:29:22.375","access":"open","resource":"/tstest/testfile3","resType":"path","action":"read","result":0,"agent":"hdfs","policy":-1,"reason":"/tstest/testfile3","enforcer":"hadoop-acl","cliIP":"172.27.172.2","reqData":"open/CLI","agentHost":"host1.example.com","logType":"RangerAudit","id":"e6806644-1b66-4066-ae0d-7f9d0023fbbb-0","seq_num":1,"event_count":1,"event_dur_ms":1,"tags":[],"additional_info":"{\"remote-ip-address\":172.27.172.2, \"forwarded-ip-addresses\":[], \"accessTypes\":[read]","cluster_name":"CDP PvC Base Single-node Cluster"}

In the above example, the second access was denied (result:0).

Hive

2021-05-04T03:35:25-07:00 host1.example.com Hive: {"repoType":3,"repo":"cm_hive","reqUser":"admin","evtTime":"2021-05-04 03:35:23.220","access":"SELECT","resource":"default/sample_07/description,salary","resType":"@column","action":"select","result":1,"agent":"hiveServer2","policy":8,"enforcer":"ranger-acl","sess":"303bbfbe-3538-4ebe-ab48-c52c80f23a35","cliType":"HIVESERVER2","cliIP":"172.27.172.2","reqData":"SELECT sample_07.description, sample_07.salary\r\nFROM\r\n sample_07\r\nWHERE\r\n( sample_07.salary \u003e 100000)\r\nORDER BY sample_07.salary DESC\r\nLIMIT 1000","agentHost":"host1.example.com","logType":"RangerAudit","id":"b6903fd2-49bd-4c8e-bad6-667ae406f301-0","seq_num":1,"event_count":1,"event_dur_ms":1,"tags":[],"additional_info":"{\"remote-ip-address\":172.27.172.2, \"forwarded-ip-addresses\":[]","cluster_name":"CDP PvC Base Single-node Cluster","policy_version":1}

Impala

2021-05-04T03:32:01-07:00 host1.example.com Impala: {"repoType":3,"repo":"cm_hive","reqUser":"admin","evtTime":"2021-05-04 03:31:54.666","access":"select","resource":"default/sample_07","resType":"@table","action":"select","result":1,"agent":"impala","policy":8,"enforcer":"ranger-acl","cliIP":"::ffff:172.27.172.2","reqData":"SELECT s07.description, s07.salary, s08.salary,\r s08.salary - s07.salary\r FROM\r sample_07 s07 JOIN sample_08 s08\r ON ( s07.code \u003d s08.code)\r WHERE\r s07.salary \u003c s08.salary\r ORDER BY s08.salary-s07.salary DESC\r LIMIT 1000","agentHost":"host1.example.com","logType":"RangerAudit","id":"f995bc52-dbdf-4617-96f6-61a176f6a727-0","seq_num":0,"event_count":1,"event_dur_ms":1,"tags":[],"cluster_name":"CDP PvC Base Single-node Cluster","policy_version":1}

Solr

In the Solr audits, by default only the fact that the query happened is audited:

{"repoType":8,"repo":"cm_solr","reqUser":"admin","evtTime":"2021-05-04 02:33:22.916","access":"query","resource":"twitter_demo","resType":"collection","action":"query","result":1,"agent":"solr","policy":39,"enforcer":"ranger-acl","cliIP":"172.27.172.2","agentHost":"host1.example.com","logType":"RangerAudit","id":"951c7dea-8ae7-49a5-8539-8c993651f75c-0","seq_num":1,"event_count":2,"event_dur_ms":199,"tags":[],"cluster_name":"CDP PvC Base Single-node Cluster","policy_version":2}

However if document level authorization is enabled in Solr then we will also see the query text as well:

2021-05-04T06:23:00-07:00 host1.example.com Solr: {"repoType":8,"repo":"cm_solr","reqUser":"admin","evtTime":"2021-05-04 06:22:55.366","access":"query","resource":"testcollection","resType":"collection","action":"others","result":0,"agent":"solr","policy":-1,"enforcer":"ranger-acl","cliIP":"172.27.172.2","reqData":"{! q\u003dtext:mysearchstring doAs\u003dadmin df\u003d_text_ echoParams\u003dexplicit start\u003d0 rows\u003d100 wt\u003djson}","agentHost":"host1.example.com","logType":"RangerAudit","id":"6b14c79f-e30d-4635-bd07-a5d116ee4d0f-0","seq_num":1,"event_count":1,"event_dur_ms":1,"tags":[],"cluster_name":"CDP PvC Base Single-node Cluster"}

Hue

These lines are recorded directly from the Hue audit log file.

{"username": "admin", "impersonator": "hue", "eventTime": 1620124241293, "operationText": "Successful login for user: admin", "service": "hue", "url": "/hue/accounts/login", "allowed": true, "operation": "USER_LOGIN", "ipAddress": "10.96.85.63"} {"username": "admin", "impersonator": "hue", "eventTime": 1620131105118, "operationText": "User admin downloaded results from query-impala-46 as xls", "service": "notebook", "url": "/notebook/download", "allowed": true, "operation": "DOWNLOAD", "ipAddress": "10.96.85.63"}

Summary

Auditing and Accounting is a regulatory security control for organizations that are storing and processing customer, healthcare, financial or proprietary information against the growing threat of insider actions (both unintentional and malicious).

In this blog, we have discussed ways of sending audit events in CDP on to an external SIEM using both file-based and Syslog based audit generation.

For further information on configuring and using Apache Ranger please review the CDP documentation.

For the Hive on Tez section, I believe you’re missing

xasecure.audit.log4j.is.enabled: true

Do we have one for HBase service? I tried to re produce using above config as reference. The audit file getting created but there are no entries in it.