Evaluating which streaming architectural pattern is the best match to your use case is a precondition for a successful production deployment.

The Apache Hadoop ecosystem has become a preferred platform for enterprises seeking to process and understand large-scale data in real time. Technologies like Apache Kafka, Apache Flume, Apache Spark, Apache Storm, and Apache Samza are increasingly pushing the envelope on what is possible. It is often tempting to bucket large-scale streaming use cases together but in reality they tend to break down into a few different architectural patterns, with different components of the ecosystem better suited for different problems.

In this post, I will outline the four major streaming patterns that we have encountered with customers running enterprise data hubs in production, and explain how to implement those patterns architecturally on Hadoop.

Streaming Patterns

The four basic streaming patterns (often used in tandem) are:

- Stream ingestion: Involves low-latency persisting of events to HDFS, Apache HBase, and Apache Solr.

- Near Real-Time (NRT) Event Processing with External Context: Takes actions like alerting, flagging, transforming, and filtering of events as they arrive. Actions might be taken based on sophisticated criteria, such as anomaly detection models. Common use cases, such as NRT fraud detection and recommendation, often demand low latencies under 100 milliseconds.

- NRT Event Partitioned Processing: Similar to NRT event processing, but deriving benefits from partitioning the data—like storing more relevant external information in memory. This pattern also requires processing latencies under 100 milliseconds.

- Complex Topology for Aggregations or ML: The holy grail of stream processing: gets real-time answers from data with a complex and flexible set of operations. Here, because results often depend on windowed computations and require more active data, the focus shifts from ultra-low latency to functionality and accuracy.

In the following sections, we’ll get into recommended ways for implementing such patterns in a tested, proven, and maintainable way.

Streaming Ingestion

Traditionally, Flume has been the recommended system for streaming ingestion. Its large library of sources and sinks cover all the bases of what to consume and where to write. (For details about how to configure and manage Flume, Using Flume, the O’Reilly Media book by Cloudera Software Engineer/Flume PMC member Hari Shreedharan, is a great resource.)

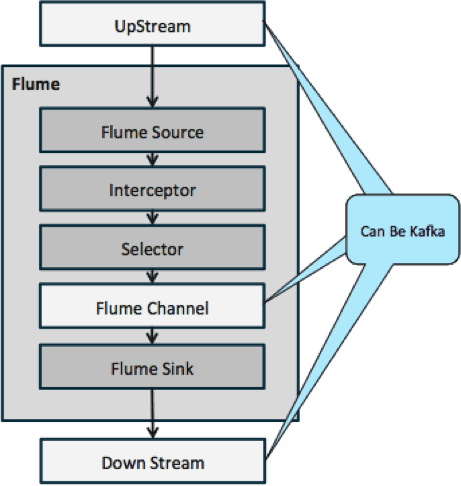

Within the last year, Kafka has also become popular because of powerful features such as playback and replication. Because of the overlap between Flume’s and Kafka’s goals, their relationship is often confusing. How do they fit together? The answer is simple: Kafka is a pipe similar to Flume’s Channel abstraction, albeit a better pipe because of its support for the features mentioned above. One common approach is to use Flume for the source and sink, and Kafka for the pipe between them.

The diagram below illustrates how Kafka can serve as the UpStream Source of Data to Flume, the DownStream destination of Flume, or the Flume Channel.

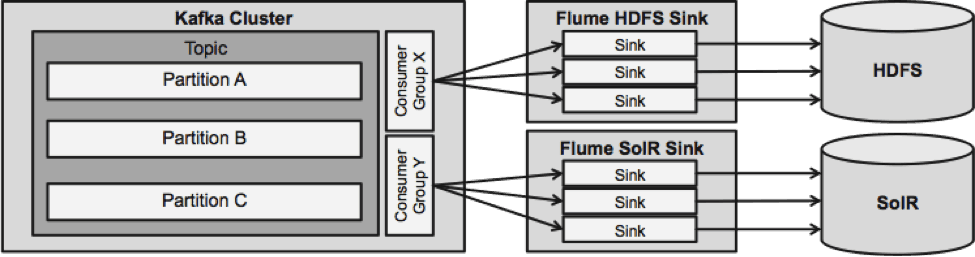

The design illustrated below is massively scalable, battle hardened, centrally monitored through Cloudera Manager, fault tolerant, and supports replay.

One thing to note before we go to the next streaming architecture is how this design gracefully handles failure. The Flume Sinks pull from a Kafka Consumer Group. The Consumer group track the Topic’s offset with help from Apache ZooKeeper. If a Flume Sink is lost, the Kafka Consumer will redistribute the load to the remaining sinks. When the Flume Sink comes back up, the Consumer group will redistribute again.

NRT Event Processing with External Context

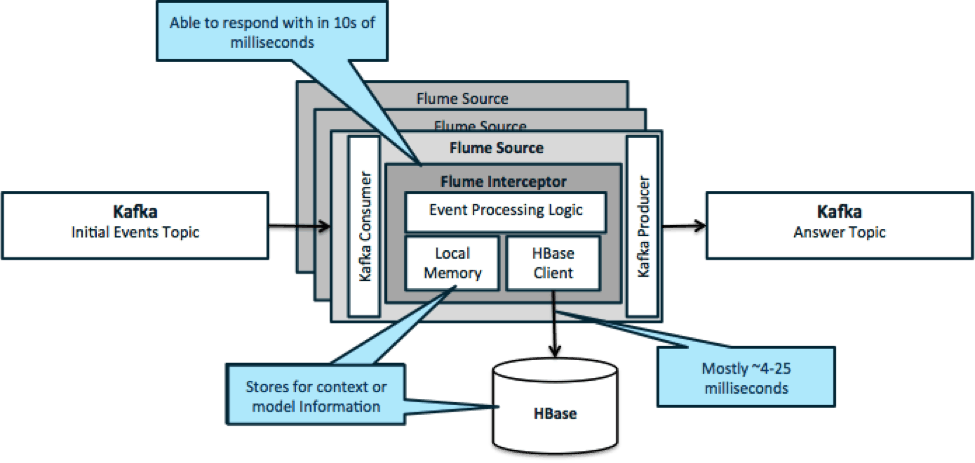

To reiterate, a common use case for this pattern is to look at events streaming in and make immediate decisions, either to transform the data or to take some sort of external action. The decision logic often depends on external profiles or metadata. An easy and scalable way to implement this approach is to add a Source or Sink Flume interceptor to your Kafka/Flume architecture. With modest tuning, it’s not difficult to achieve latencies in the low milliseconds.

Flume Interceptors take events or batches of events and allow user code to modify or take actions based on them. The user code can interact with local memory or an external storage system like HBase to get profile information needed for decisions. HBase usually can give us our information in around 4-25 milliseconds depending on network, schema design, and configuration. You can also set up HBase in a way that it is never down or interrupted, even in the case of failure.

Implementation requires nearly no coding beyond the application-specific logic in the interceptor. Cloudera Manager offers an intuitive UI for deploying this logic through parcels as well as hooking up, configuring, and monitoring the services.

NRT Partitioned Event Processing with External Context

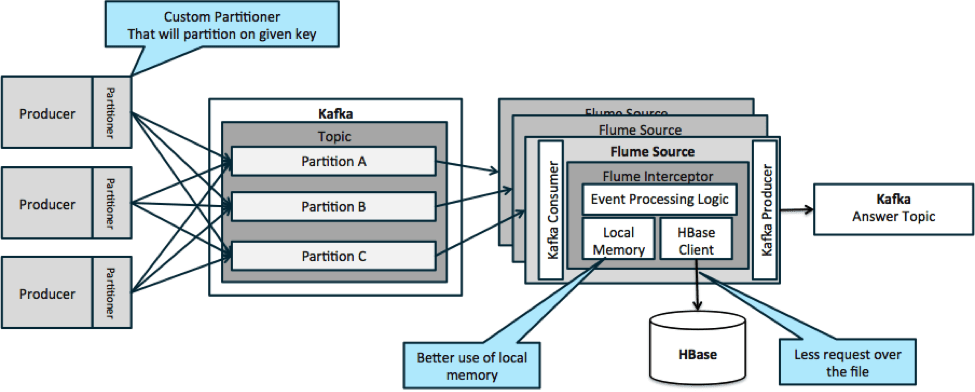

In the architecture illustrated below (unpartitioned solution), you would need to call out frequently to HBase because external context relevant to particular events does not fit in local memory on the Flume interceptors.

However, if you define a key to partition your data, you can match incoming data to the subset of the context data that is relevant to it. If you partition the data 10 times, then you only need to hold 1/10th of the profiles in memory. HBase is fast, but local memory is faster. Kafka enables you to define a custom partitioner that it uses to split up your data.

Note that Flume is not strictly necessary here; the root solution here just a Kafka consumer. So, you could use just a consumer in YARN or a Map-only MapReduce application.

Complex Topology for Aggregations or ML

Up to this point, we have been exploring event-level operations. However, sometimes you need more complex operations like counts, averages, sessionization, or machine-learning model building that operate on batches of data. In this case, Spark Streaming is the ideal tool for several reasons:

- It’s easy to develop compared to other tools.Spark’s rich and concise APIs make building out complex topologies easy.

- Similar code for streaming and batch processing.With a few changes, the code for small batches in real time can be used for enormous batches offline. In addition to reducing code size, this approach reduces the time needed for testing and integration.

- There’s one engine to know.There is a cost that goes into training staff on the quirks and internals of distributed processing engines. Standardizing on Spark consolidates this cost for both streaming and batch.

- Micro-batching helps you scale reliably. Acknowledging at a batch level allows for more throughput and allows for solutions without the fear of a double-send. Micro-batching also helps with sending changes to HDFS or HBase in terms of performance at scale.

- Hadoop ecosystem integration is baked in.Spark has deep integration with HDFS, HBase, and Kafka.

- No risk of data loss.Thanks to the WAL and Kafka, Spark Streaming avoids data loss in case of failure.

- It’s easy to debug and run.You can debug and step through your code Spark Streaming in a local IDE without a cluster. Plus, the code looks like normal functional programing code so it doesn’t take much time for a Java or Scala developer to make the jump. (Python is also supported.)

- Streaming is natively stateful.In Spark Streaming, state is a first-class citizen, meaning that it’s easy to write stateful streaming applications that are resilient to node failures.

- As the de facto standard, Spark is getting long-term investment from across the ecosystem.

At the time of this writing, there were approximately 700 commits to Spark as a whole in the last 30 days—compared to other streaming frameworks such as Storm, with 15 commits during the same time. - You have access to ML libraries.

Spark’s MLlib is becoming hugely popular and its functionality will only increase. - You can use SQL where needed.

With Spark SQL, you can add SQL logic to your streaming application to reduce code complexity.

Conclusion

There is a lot of power in streaming and several possible patterns, but as you have learned in this post, you can do really powerful things with minimal coding if you know which pattern matches up with your use case best.

Ted Malaska is a Solutions Architect at Cloudera, a contributor to Spark, Flume, and HBase, and a co-author of the O’Reilly book, Hadoop Applications Architecture.