Get an overview of the available mechanisms for backing up data stored in Apache HBase, and how to restore that data in the event of various data recovery/failover scenarios

With increased adoption and integration of HBase into critical business systems, many enterprises need to protect this important business asset by building out robust backup and disaster recovery (BDR) strategies for their HBase clusters. As daunting as it may sound to quickly and easily backup and restore potentially petabytes of data, HBase and the Apache Hadoop ecosystem provide many built-in mechanisms to accomplish just that.

In this post, you will get a high-level overview of the available mechanisms for backing up data stored in HBase, and how to restore that data in the event of various data recovery/failover scenarios. After reading this post, you should be able to make an educated decision on which BDR strategy is best for your business needs. You should also understand the pros, cons, and performance implications of each mechanism. (The details herein apply to CDH 4.3.0/HBase 0.94.6 and later.)

Note: At the time of this writing, Cloudera Enterprise 4 offers production-ready backup and disaster recovery functionality for HDFS and the Hive Metastore via Cloudera BDR 1.0 as an individually licensed feature. HBase is not included in that GA release; therefore, the various mechanisms described in this blog are required. (Cloudera Enterprise 5, currently in beta, offers HBase snapshot management via Cloudera BDR.)

Backup

HBase is a log-structured merge-tree distributed data store with complex internal mechanisms to assure data accuracy, consistency, versioning, and so on. So how in the world can you get a consistent backup copy of this data that resides in a combination of HFiles and Write-Ahead-Logs (WALs) on HDFS and in memory on dozens of region servers?

Let’s start with the least disruptive, smallest data footprint, least performance-impactful mechanism and work our way up to the most disruptive, forklift-style tool:

The following table provides an overview for quickly comparing these approaches, which I’ll describe in detail below.

| Performance Impact | Data Footprint | Downtime | Incremental Backups | Ease of Implementation | Mean Time To Recovery (MTTR) | |

| Snapshots | Minimal | Tiny | Brief (Only on Restore) | No | Easy | Seconds |

| Replication | Minimal | Large | None | Intrinsic | Medium | Seconds |

| Export | High | Large | None | Yes | Easy | High |

| CopyTable | High | Large | None | Yes | Easy | High |

| API | Medium | Large | None | Yes | Difficult | Up to you |

| Manual | N/A | Large | Long | No | Medium | High |

As of CDH 4.3.0, HBase snapshots are fully functional, feature rich, and require no cluster downtime during their creation. My colleague Matteo Bertozzi covered snapshots very well in his blog entry and subsequent deep dive. Here I will provide only a high-level overview.

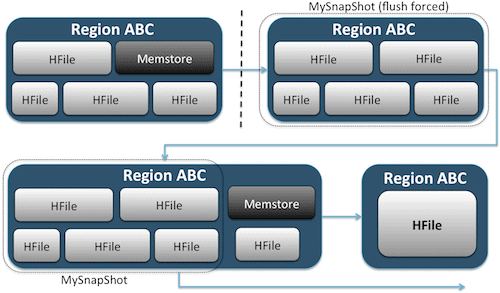

Snapshots simply capture a moment in time for your table by creating the equivalent of UNIX hard links to your table’s storage files on HDFS (Figure 1). These snapshots complete within seconds, place almost no performance overhead on the cluster, and create a minuscule data footprint. Your data is not duplicated at all but merely cataloged in small metadata files, which allows the system to roll back to that moment in time should you need to restore that snapshot.

Creating a snapshot of a table is as simple as running this command from the HBase shell:

hbase(main):001:0> snapshot 'myTable', 'MySnapShot'

After issuing this command, you’ll find some small data files located in /hbase/.snapshot/myTable (CDH4) or /hbase/.hbase-snapshots (Apache 0.94.6.1) in HDFS that comprise the necessary information to restore your snapshot. Restoring is as simple as issuing these commands from the shell:

hbase(main):002:0> disable 'myTable' hbase(main):003:0> restore_snapshot 'MySnapShot' hbase(main):004:0> enable 'myTable'

Note: As you can see, restoring a snapshot requires a brief outage as the table must be offline. Any data added/updated after the restored snapshot was taken will be lost.

If your business requirements are such that you must have an offsite backup of your data, you can utilize the exportSnapshot command to duplicate a table’s data into your local HDFS cluster or a remote HDFS cluster of your choosing.

Snapshots are a full image of your table each time; no incremental snapshot functionality is currently available.

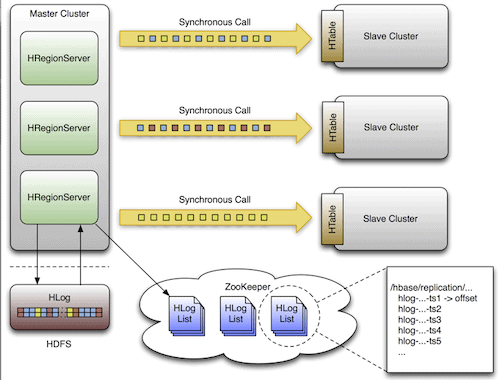

HBase replication is another very low overhead backup tool. (My colleague Himanshu Vashishtha covers replication in detail in this blog post.) In summary, replication can be defined at the column-family level, works in the background, and keeps all edits in sync between clusters in the replication chain.

Replication has three modes: master->slave, master<->master, and cyclic. This approach gives you flexibility to ingest data from any data center and ensures that it gets replicated across all copies of that table in other data centers. In the event of a catastrophic outage in one data center, client applications can be redirected to an alternate location for the data utilizing DNS tools.

Replication is a robust, fault-tolerant process that provides “eventual consistency,” meaning that at any moment in time, recent edits to a table may not be available in all replicas of that table but are guaranteed to eventually get there.

Note: For existing tables, you are required to first manually copy the source table to the destination table via one of the other means described in this post. Replication only acts on new writes/edits after you enable it.

(From Apache’s Replication page)

HBase’s Export tool is a built-in HBase utility that enables the easy exporting of data from an HBase table to plain SequenceFiles in an HDFS directory. It creates a MapReduce job that makes a series of HBase API calls to your cluster, and one-by-one, gets each row of data from the specified table and writes that data to your specified HDFS directory. This tool is more performance-intensive for your cluster because it utilizes MapReduce and the HBase client API, but it is feature rich and supports filtering data by version or date range – thereby enabling incremental backups.

Here is a sample of the command in its simplest form:

hbase org.apache.hadoop.hbase.mapreduce.Export

Once your table is exported, you can copy the resulting data files anywhere you’d like (such as offsite/off-cluster storage). You can also specify a remote HDFS cluster/directory as the output location of the command, and Export will directly write the contents to the remote cluster. Please note that this approach will introduce a network element into the write path of the export, so you should confirm your network connection to the remote cluster is reliable and fast.

The CopyTable utility is covered well in Jon Hsieh’s blog entry, but I will summarize the basics here. Similar to Export, CopyTable creates a MapReduce job that utilizes the HBase API to read from a source table. The key difference is that CopyTable writes its output directly to a destination table in HBase, which can be local to your source cluster or on a remote cluster.

An example of the simplest form of the command is:

hbase org.apache.hadoop.hbase.mapreduce.CopyTable --new.name=testCopy test

This command will copy the contents of a table named “test” to a table in the same cluster named “testCopy.”

Note that there is a significant performance overhead to CopyTable in that it uses individual “puts” to write the data, row-by-row, into the destination table. If your table is very large, CopyTable could cause memstore on the destination region servers to fill up, requiring memstore flushes that will eventually lead to compactions, garbage collection, and so on.

In addition, you must take into account the performance implications of running MapReduce over HBase. With large data sets, that approach might not be ideal.

HTable API (such as a custom Java application)

As is always the case with Hadoop, you can always write your own custom application that utilizes the public API and queries the table directly. You can do this through MapReduce jobs in order to utilize that framework’s distributed batch processing advantages, or through any other means of your own design. However, this approach requires a deep understanding of Hadoop development and all the APIs and performance implications of using them in your production cluster.

Offline Backup of Raw HDFS Data

The most brute-force backup mechanism — also the most disruptive one — involves the largest data footprint. You can cleanly shut down your HBase cluster and manually copy all the data and directory structures residing in /hbase in your HDFS cluster. Since HBase is down, that will ensure that all data has been persisted to HFiles in HDFS and you will get an accurate copy of the data. However, incremental backups will be nearly impossible to obtain as you will not be able to ascertain what data has changed or been added when attempting future backups.

It is also important to note that restoring your data would require an offline meta repair because the .META. table would contain potentially invalid information at the time of restore. This approach also requires a fast reliable network to transfer the data offsite and restore it later if needed.

For these reasons, Cloudera highly discourages this approach to HBase backups.

Disaster Recovery

HBase is designed to be an extremely fault-tolerant distributed system with native redundancy, assuming hardware will fail frequently. Disaster recovery in HBase usually comes in several forms:

- Catastrophic failure at the data center level, requiring failover to a backup location

- Needing to restore a previous copy of your data due to user error or accidental deletion

- The ability to restore a point-in-time copy of your data for auditing purposes

As with any disaster recovery plan, business requirements will drive how the plan is architected and how much money to invest in it. Once you’ve established the backups of your choice, restoring takes on different forms depending on the type of recovery required:

- Failover to backup cluster

- Import Table/Restore a snapshot

- Point HBase root directory to backup location

If your backup strategy is such that you’ve replicated your HBase data to a backup cluster in a different data center, failing over is as easy as pointing your end-user applications to the backup cluster with DNS techniques.

Keep in mind, however, that if you plan to allow data to be written to your backup cluster during the outage period, you will need to make sure that data gets back to the primary cluster when the outage is over. Master-to-master or cyclic replication will handle this process automatically for you, but a master-slave replication scheme will leave your master cluster out of sync, requiring manual intervention after the outage.

Along with the Export feature described previously, there is a corresponding Import tool that can take the data previously backed up by Export and restore it to an HBase table. The same performance implications that applied to Export are in play with Import as well. If your backup scheme involved taking snapshots, reverting back to a previous copy of your data is as simple as restoring that snapshot.

You can also recover from a disaster by simply modifying the hbase.root.dir property in hbase-site.xml and pointing it to a backup copy of your /hbase directory if you had done the brute-force offline copy of the HDFS data structures. However, this is also the least desirable of restore options as it requires an extended outage while you copy the entire data structure back to your production cluster, and as previously mentioned, .META. could be out of sync.

Conclusion

In summary, recovering data after some form of loss or outage requires a well-designed BDR plan. I highly recommend that you thoroughly understand your business requirements for uptime, data accuracy/availability, and disaster recovery. Armed with detailed knowledge of your business requirements, you can carefully choose the tools that best meet those needs.

Selecting the tools is only the beginning, however. You should run large-scale tests of your BDR strategy to assure that it functionally works in your infrastructure, meets your business needs, and that your operations teams are very familiar with the steps required before an outage happens and you find out the hard way that your BDR plan will not work.

If you’d like to comment on or discuss this topic further, use our community forum for HBase.

Further reading:

- Jon Hsieh’s Strata + Hadoop World 2012 presentation

- HBase: The Definitive Guide (Lars George)

- HBase In Action (Nick Dimiduk/Amandeep Khurana)