Introduction

This blog is intended to serve as an ethics sheet for the task of AI-assisted comic book art generation, inspired by “Ethics Sheets for AI Tasks.” AI-assisted comic book art generation is a task I proposed in a blog post I authored on behalf of my employer, Cloudera. I’m a research engineer by trade and have been involved in software creation in some way or another for most of my professional life. I’m also an amateur artist, and frankly, not a very skilled one. It’s my belief that AI practitioners like me and my colleagues have a responsibility to carefully and critically consider the implications of their work and research. Therefore, in conjunction with my blog post, I felt the need to author an ethics statement in the form of this ethics sheet.

This blog is not intended to be comprehensive, but rather intentionally brief. Indeed one shortcoming of that is, outside of my colleagues, I didn’t engage different stakeholders in its creation, like other artists and AI ethicists. In my mind, it addresses a critical deficiency in the proposal of a new AI task, but a more comprehensive and representative analysis would be better, especially if the task is developed further. My hope is that this document spurs a conversation among a diverse group, and that the results of that conversation may be incorporated into a document yet to be authored.

Moreover, while I endeavor to take a critical view of AI-assisted comic book art generation, I believe it’s impossible for me to write with complete objectivity. Like everyone, my socialization and lived experiences leave me with biases and blind spots. Therefore in the interest of full disclosure, I want to briefly articulate my personal feelings on the task, so that readers may have some context on the conclusions I draw, and even which questions I choose to examine.

In short, I’m very much for AI art systems, while recognizing that not all such systems are ethical. I’m for them because I believe they have the potential to make creating art more accessible. In particular, I selfishly want creating art to be more accessible to me, but I also want to make it more accessible for others. However, if such a system creates inordinate suffering, for example by suddenly putting lots of professional artists out of work, that is clearly ethically problematic. But it is not a foregone conclusion that AI art systems will create such suffering—the devil is in the details.

Scope, motivation, and benefits

A “comic book” in this context is a story told visually through a series of images, and optionally (though often) in conjunction with written language, e.g., in speech bubbles or as captions. A comic book is also a work of art, and so considerations about systems for creating art generally also apply to systems for creating comic books.

For the purpose of this paper, “comic book” should be considered synonymous with graphic novels, comic strips, web comics, and perhaps more. Examples include classic superhero comic books, contemporary works like Prince of Cats, and webcomics like Dinosaur Comics.

The task of AI-assisted comic book art generation is to create a series of images that will be used, perhaps with further modification, as the basis for a comic book. The benefit of this task is to lower the labor and skill burden in generating images of a desired quality. Creating images is of course only one aspect of making a comic book—typically they are made collaboratively by a writer and an illustrator. Here the focus is narrowly on the aspect of creating images.

Ethical considerations

Why automate? Who benefits?

Developing the artistic skills to render an image in a desired quality is not a trivial undertaking. It can take a long time and a lot of practice. If you take classes, attend an art school, or otherwise engage in a program of formal study in order to meet your artistic goals, it could be a significant financial burden as well. Even once a desired skill level is achieved, the act of creating an individual image may represent a significant amount of labor. Automation can potentially ease the labor burden by rendering images more quickly than an unaided human can alone, and similarly ease the skill development burden by rendering images in a desired quality that an artist may not be able to easily achieve on their own.

Moreover, creating even digital art is a physical act that some people may be capable of performing only with great effort compared to others. Automation that eases the physical burden of creating art levels the playing field. People who aspire to be professional artists but who do not meet a certain standard of productivity expected in a professional setting with conventional tools and techniques may benefit from automation that improves their productivity. This leveling is not unique to AI, but true of many productivity-enhancing technologies. The term “computer,” for example, used to refer to a person who performed mathematical calculations by hand. But the calculating potential of a room full of computers would be blown out of the water by the smartphone in your pocket, enabling people or organizations who can’t sustain a significantly sized workforce to solve the kinds of scientific and engineering problems that would have been out of their purview in the early 20th century.

Under a capitalist system of values, businesses and individuals that are consumers of art also benefit from increased productivity. Automation may provide a certain quantity of art at a lower cost, compared to producing it conventionally. For laborers, this may be threatening to their job security as the market may only sustain a certain level of production. Conscientious creators of AI systems for producing art should try to predict and assess the actual impact of their systems on relevant labor markets in order to avoid or mitigate harmful outcomes. For example, it may be preferable to create automated systems that incorporate human labor in order to produce higher quality results, as opposed to systems that dispense with human labor but produce lower quality results.

Aside from productivity, I feel there is an inherent urgency to storytelling. Stories can affect discourse, as well as inspire, educate, and otherwise move people. A storyteller may have a social agenda that is time-sensitive. Comics books are a storytelling medium, so automation that enables them to be produced more quickly allows storytellers to pursue their agenda more effectively.

Access—who can use it?

The state of the art in AI systems for artistic tasks almost universally use deep-learning models, which presuppose a significant amount of compute resources both to create them, and once created to continue to use them for producing images. Access to these resources is decidedly not equitable, with significant disparities geographically, along class lines, and along racial and ethnic lines. Creators of systems for AI-assisted comic book art generation that wish for equitable access to their systems should make an effort to address such disparities, for example by making extra effort to reach affected populations.

Data—where does it come from?

Deep learning models are data hungry, and state-of-the-art systems like DALL·E 2 are trained with massive data sets of images scraped from the internet. The content of these data sets may introduce problematic bias into a model’s results. Moreover, collecting large data sets from the internet raises a host of ethical issues centered around privacy, consent, acknowledgement, and remuneration.

Because the data set used to train deep learning models plays such a crucial role in the quality of their performance, it is tempting for creators of AI systems that wish to create highly skilled systems to disregard ethical concerns around assembling a data set—especially if they perceive that more data will produce better results, or if they perceive an ethically problematic source to have high-quality data.

A conscientious AI system designer should pay special attention to how they collect their data. To discuss this aspect in detail is beyond the scope of this document, but perhaps a good place to start is to explore alternatives to collecting large, high-quality data sets outside of scraping them from the internet. For AI systems for creating art, this could include commissioning data sets.

It’s also typical for artists to acknowledge their artistic influences. In extreme cases, failure to acknowledge an influence may be considered plagiarism. Most AI systems today lack the facility to indicate which elements of their training set influenced a result. Designers of AI systems for art should identify which images in their training sets strongly influenced a result using metrics of image similarity, in order to both credit the influence appropriately (perhaps monetarily) and facilitate avoiding plagiarism.

Misuse—what could go wrong?

It’s impossible to anticipate every risk or mode of misuse, but those below were suggested in my readings and by my colleagues. These risks don’t admit easy answers, but it’s important to have a clear-eyed perspective on how technology could be misused.

An end to human creativity?

AI systems for art in general promise to reduce the labor burden of creating art, and if the labor burden is sufficiently small AI systems could come to dominate the production of art. Humans can envision things they have never seen before and render those novel images as art, but it’s not known to what extent deep learning systems can analogously render images that are not representative of their training set. If humans and AI are fundamentally capable of producing distinct sets of art, then one concern is that an overreliance on AI will diminish the body of possible creative works by excluding those that humans are capable of making and AI is not.

Let’s take a moment to examine the assumptions of this concern. For one, it presumes that an artwork is improved in value by a small labor burden. But the value of an artwork encompasses many different subjective and potentially incomparable modalities—monetary value, aesthetic value, and sentimental value to name a few. AI promises to impact the value significantly in some contexts—for example, a newsroom with a tight deadline that wants to include original art for an article—but not all valuations of art place a high importance on a small labor burden. Indeed the opposite may be true. On that basis alone it’s hard to imagine AI completely replacing human artists using non-AI techniques for the production of art—there will always be a population that places high value on more labor-intensive artworks even as AI devalues labor in other contexts.

As for what is possible for AI systems to produce, it is hard to answer this question precisely without reference to a specific method. Certainly machine learning systems are far more likely to produce a certain class of images by design, but I am not aware of any effort to map out that class of images from a theoretical standpoint. So it’s an open question as to whether the bodies of work that AI and humans can produce in principle are even distinct.

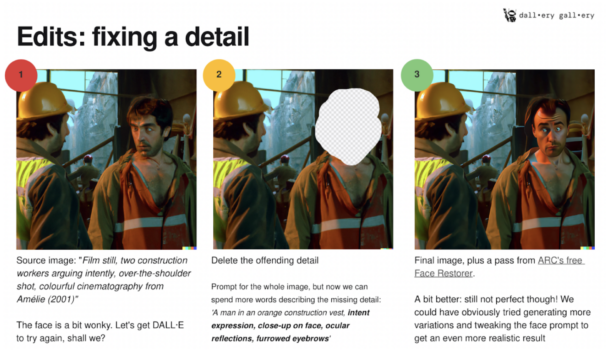

So what should a conscientious system designer take from this? Supposing that AI systems do come to dominate art production, and that AI alone is incapable of producing some class of artworks, then one potential solution is to incorporate human input into the AI system in order to expand the range of possible outputs. This mode of AI-assisted art creation is already suggested by systems like DALL·E. Check out this example from The DALL·E 2 Prompt Book:

In the above example, the user removed an undesired detail and asked DALL·E to fill it in with something else. But if an AI system proved incapable, then it’s always possible for a human to do it themselves, producing a novel artwork that synthesizes AI and human skill. A conscientious system designer should therefore anticipate the need for human input into art-producing systems.

Disinformation and propaganda

Some storytellers may have a malicious social agenda, for example to spread disinformation or inculcate harmful ideas in society. If AI-assisted systems for creating comic books help storytellers realize their agenda more easily, then it also helps malicious storytellers. Of all the concerns, these are the most difficult to address since the very things that make a system useful for non-malicious purposes generally also make them useful for malicious purposes.

The risk of being able to pass off false information as true using AI systems is not unique to comic books, but exists already in the form of deepfakes and natural language processing (NLP) systems that can create entire “news” articles with misleading information. Therefore conscientious system designers should look to the ethical discussions around deepfakes and other instances where AI is misused for guidance.

AI systems may also be used to produce malicious propaganda that does not strictly rely on being falsely considered “real,” but employs other techniques of manipulation. For example one may produce a narrative that engenders sympathy to violent ideologies, with the goal of radicalizing readers. One possible way to mitigate this risk, one that is already employed in existing systems, is to filter the inputs and outputs of the system to preclude objectionable material. This is largely a reactive measure, though, since it’s likely that real systems will admit unknown exploits, notwithstanding that the nature of “objectionable” is not fixed.

Conclusion

It’s clear that even for a lighthearted task like comic book art generation, there are many ethical considerations that a conscientious system designer needs to engage with, beyond “merely” producing a system that is highly skilled. I look forward to researching and learning more about the ethical considerations around the task of AI-assisted comic book art generation, and around AI systems for art more generally.

If you enjoyed this blog and would like to learn more about the various research projects that Cloudera Fast Forward Labs is pursuing, go check out the CFFL blog and/or sign up for the CFFL Newsletter.

1 Mohammad, Saif M. “Ethics Sheets for AI Tasks.” arXiv, March 19, 2022. https://doi.org/10.48550/arXiv.2107.01183.

2 I am not the first, although I believe my formulation of the task is novel. See Yang, Xin, Zongliang Ma, Letian Yu, Ying Cao, Baocai Yin, Xiaopeng Wei, Qiang Zhang, and Rynson W. H. Lau. “Automatic Comic Generation with Stylistic Multi-Page Layouts and Emotion-Driven Text Balloon Generation.” arXiv, January 26, 2021. https://doi.org/10.48550/arXiv.2101.11111. See also https://bleedingcool.com/comics/lungflower-graphic-novel-drawn-by-a-i-algorithm-is-first-to-publish/.

3 Not least so that people actually read it.

4 Unless your desired quality is “unskilled,” I guess.

5 Maybe it sounds counterintuitive, but the fact is that humans are still better than state-of-the-art AI systems at many tasks. For such a complex task as creating art it is not far fetched that superior results will be achieved when a system admits human intervention for tasks that AI struggles with. See Korteling, J. E. (Hans)., G. C. van de Boer-Visschedijk, R. A. M. Blankendaal, R. C. Boonekamp, and A. R. Eikelboom. “Human- versus Artificial Intelligence.” Frontiers in Artificial Intelligence 4 (2021). https://www.frontiersin.org/articles/10.3389/frai.2021.622364.

6 Srinuan, Chalita, and Erik Bohlin. “Understanding the Digital Divide: A Literature Survey and Ways Forward.” 22nd European Regional ITS Conference, Budapest 2011: Innovative ICT Applications – Emerging Regulatory, Economic and Policy Issues. 22nd European Regional ITS Conference, Budapest 2011: Innovative ICT Applications – Emerging Regulatory, Economic and Policy Issues. International Telecommunications Society (ITS), 2011. https://ideas.repec.org/p/zbw/itse11/52191.html.

7 IEEE Spectrum. “DALL ·E 2’s Failures Are the Most Interesting Thing About It,” July 14, 2022. https://spectrum.ieee.org/openai-dall-e-2.

8 https://www.engadget.com/dall-e-generative-ai-tracking-data-privacy-160034656.html

9 Perceptual similarity is a good candidate. See Johnson, Justin, Alexandre Alahi, and Li Fei-Fei. “Perceptual Losses for Real-Time Style Transfer and Super-Resolution.” In Computer Vision – ECCV 2016, edited by Bastian Leibe, Jiri Matas, Nicu Sebe, and Max Welling, 694–711. Lecture Notes in Computer Science. Cham: Springer International Publishing, 2016. https://doi.org/10.1007/978-3-319-46475-6_43.

10 For example, when I see an oil painting I like, part of my appreciation for the artwork stems from my recognition of the skill and time it took to produce. It’s not cheapened by the fact that it may be possible to render a similar digital image with less labor.

11 However an empirical effort has emerged in the form of prompt engineering for systems like DALL-E. See https://dallery.gallery/the-dalle-2-prompt-book/.