This is part 1 of a three-part (Part 2, Part 3) series of doing Ultra Fast OLAP Analytics with Apache Hive and Druid.

Unlock Sub-Second SQL Analytics over Terabytes of Data with Hive and Druid

Modern corporations are increasingly looking for near real time analytics and insights to make actionable decisions. To fuel this, this blog introduces Ultra fast analytics with Apache Hive and Druid.

We’ll show how the Hive/Druid integration delivers ultra-fast SQL analytics that can be consumed from your favorite BI tool to get accelerated business results. And we will show benchmark results of BI queries running in just milliseconds over a 1TB dataset.

What is Druid?

Druid is a high-performance, column-oriented, distributed data store, which is well suited for user-facing analytic applications and real-time architectures. Druid is included as a technical preview in HDP 2.6 and you can read more about Druid on our project page, or at the project website.

What Makes Druid great for Low Latency Analytics?

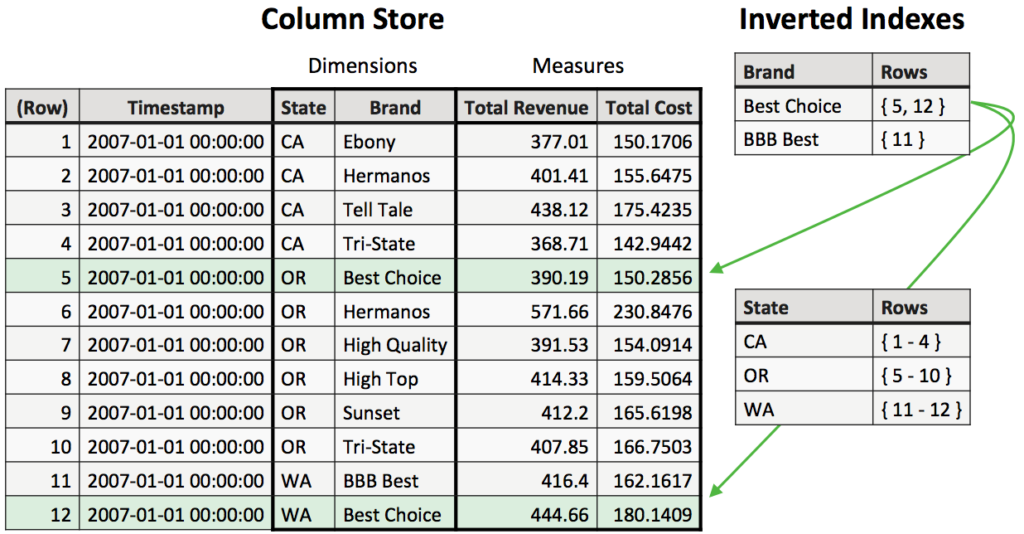

Druid is great for low latency analytics because it combines the best qualities of a column store and inverted indexing. The benefits of column stores are well known, because they minimize I/O costs for analytical queries.

Many analytical applications require drilling down to extremely fine levels of detail, e.g. “How much did we sell of Brand ‘Best Choice’ on this particular date, broken down by State”. A query like this needs 5 or 10 rows out of potentially billions of total rows. An inverted index makes it possible to load only those specific rows you need to satisfy the query.

Figure 1: Druid combines the best qualities of a column store and inverted indexing

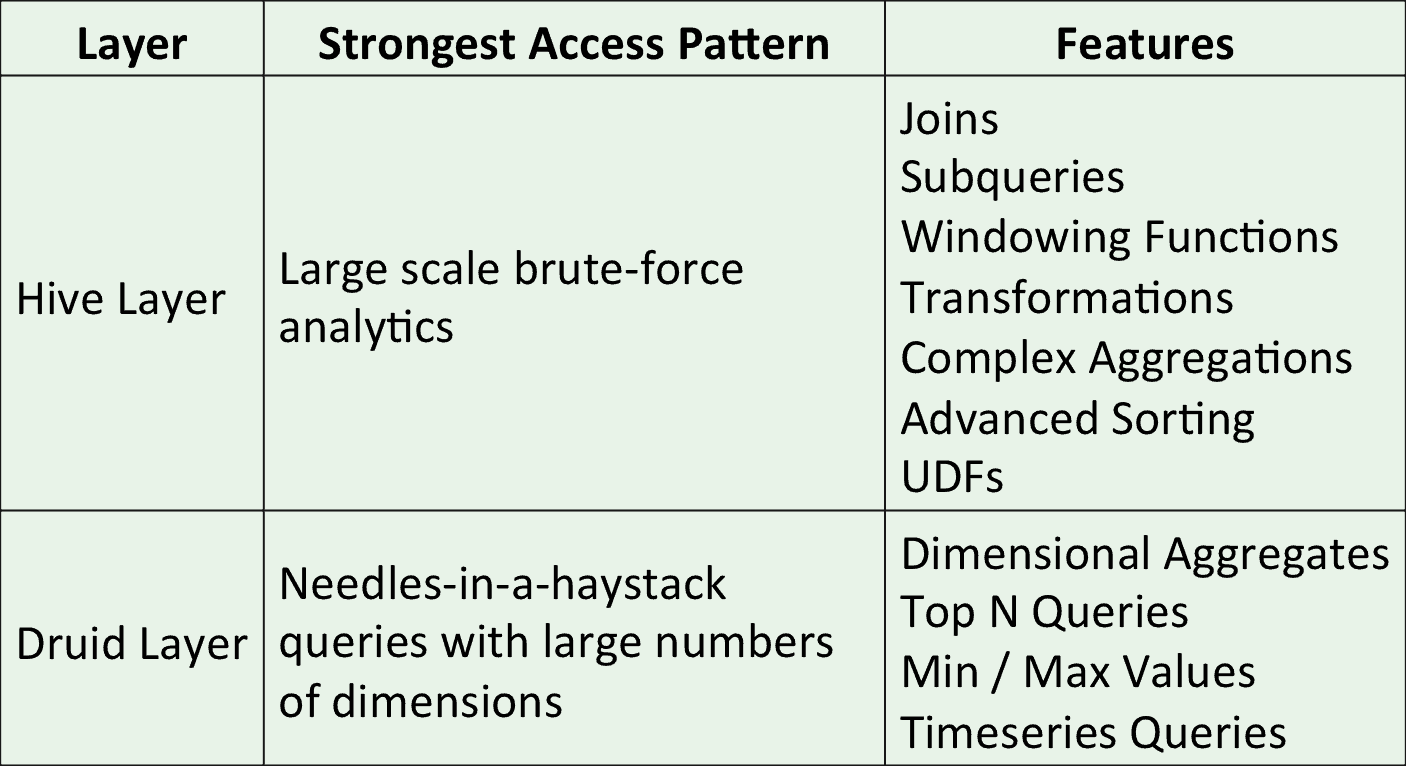

This is a huge departure from the existing crop of SQL-on-Hadoop solutions like Apache Hive or SparkSQL which rely on columnar storage to provide high-throughput aggregation, but do not deal well with finding the “needles in the haystack”. Druid was built from the ground up to be great at this: As more dimensions are added, fewer rows are needed and Druid queries run even faster.

Druid Sounds Great! Should I Use It For Everything?

Druid’s strong points are very compelling but there are many important problems that Druid does not attempt to solve. Most important of these is joins. Druid has some basic capabilities to join its internal data against small dimension tables loaded from external systems (this is called query-time lookup in Druid terms.) This ability aside, the Druid authors make it clear that large-scale join support is not a priority.

Second, Druid’s SQL native implementation is extremely new. Though progress is being made, SQL is a huge and complex language and it is unlikely that we will see comprehensive SQL analytical capabilities such as set operators and windowing functions any time soon. In addition, we can expect that Druid’s SQL implementation will not support heavy-duty joins.

Integrating Apache Hive and Druid

Although scalable joins and comprehensive SQL are not core project goals for Druid, they are core project goals for Apache Hive. Integrating these projects blends their capabilities nicely. More importantly perhaps, querying through Hive means that any analytics tool can now query Druid using mature and high-quality ODBC/JDBC drivers that Hortonworks provides.

Apache Hive supports federation between native and external data sources through its StorageHandler interfaces while Apache Calcite provides a flexible way to optimize and re-write queries to divide work among different engines based on their strengths. Substantial progress has been made, with Read Path Integration allowing you to query arbitrary Druid tables from Hive and Write Path Integration allowing you to create and update Druid tables from Hive.

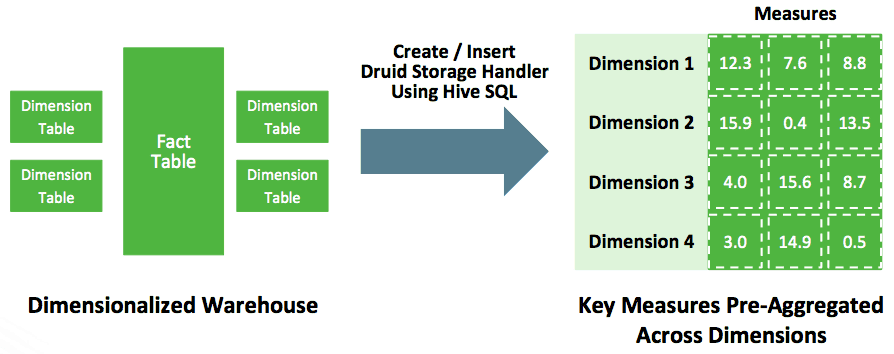

In the detailed example below we will use the Write Path Integration to create OLAP cubes based on data in Hive. After doing that, the OLAP cube appears as an ordinary Hive table and can be queried with any Hive SQL expression, thanks to the Read Path Integration.

Figure 2: Pre-Aggregate into Druid using Hive SQL

The result is that Druid provides essentially an OLAP Index over data in Hive, providing pre-aggregation and fast access to the needles-in-a-haystack, thanks to Druid’s inverted indexing.

Fast or Deep

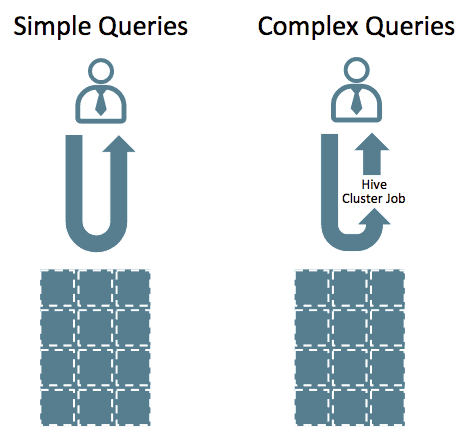

We mentioned how Apache Calcite divides work according to the engine’s strengths. Simple queries can be answered directly from Druid and benefit from Druid’s extensive OLAP optimizations. More complex operations will push work down into Druid when it can, then run the remaining bits of the query in Hive within the YARN cluster.

Figure 3: Simple queries are pushed directly to Druid

What does “simple” mean in this context? Druid is well suited for things like Top N queries, time-series queries and for simple aggregations grouped by defined dimensions. Thanks to the Calcite integration, Hive is able to cement in any gaps in the SQL-like capabilities Druid exposes.

Figure 4: Types of Analytics

Future Directions

Hive / Druid integration remains an active area. Some improvements you can expect include:

- Materialized View Navigation: You noticed above that we re-wrote the original query to target the OLAP index. This is good enough for some use cases, feel free to hook Tableau or other BI tools up to these tables because they act like regular Hive tables. This doesn’t work for transparent acceleration. In the future, we will use the Calcite layer to transparently re-write queries to use the OLAP index when possible, you can track this work in CALCITE-1731.

- Indexing Speed: Building the OLAP index from Hive is bottlenecked because there is a 1-1 relationship between segments and reducers. If you use coarse-grained segments like monthly you will experience long load times. For example, building the index for this benchmark at 1 TB takes about 3 hours or so. In the future we will support parallel writes to segments, which should reduce build times 5x or more.

- Seamless Lambda Architectures: Our goal is fast, flexible analytics capable of combining real-time and historical data. HDP 2.6 lays a solid foundation by unlocking deep SQL over data streamed to Druid, while at the same time simplifying historical data maintenance with ACID MERGE. In the future, look for better ability to perform joins between real-time and historical data, and simplified ingestion / retention into the historical layer.

Hortonworks makes Druid Easy

Druid is available as a Technical Preview in HDP 2.6. In addition to the Hive/Druid integration, Hortonworks has made it easy to deploy, configure and monitor Druid using Apache Ambari, making it easy to get started. With these simplifications, a knowledgeable Hadoop user should be able to reproduce anything in this document within a few hours with the help of the materials on GitHub. We encourage you to try Druid, in HDP or on Hortonworks Data Cloud and give us your feedback in the Hortonworks Community.