Impala’s speed now beats the fastest SQL-on-Hadoop alternatives. Test for yourself!

Since the initial beta release of Cloudera Impala more than one year ago (October 2012), we’ve been committed to regularly updating you about its evolution into the standard for running interactive SQL queries across data in Apache Hadoop and Hadoop-based enterprise data hubs. To briefly recap where we are today:

- Impala is being widely adopted.

Impala has been downloaded by people in more than 5,000 unique organizations, and Cloudera Enterprise customers from multiple industries and Fortune 100 companies have deployed it as supported infrastructure, integrated with their existing BI tools, in business-critical environments. Furthermore, MapR and Amazon Web Services (via its Elastic MapReduce service) recently announced support for Impala in their own platforms. - Impala has demonstrated production readiness.

Impala has proved out its superior performance for interactive queries in a concurrent-workloads environment, while providing reliable resource sharing compared to batch-processing alternatives like Apache Hive. - Impala sports an ever-increasing list of enterprise features.

Planned new enterprise features are materializing rapidly and according to plan — including the addition of fine-grained, role-based authorization, user-defined functions (UDFs), cost-based optimization, and pre-built analytic functions.

In this update, you’ll read about some exciting performance results (based on an open decision-support benchmark derived from TPC-DS) that not only prove out Impala’s continuing superiority over the latest releases of Hive (0.12) for interactive queries, but also query performance across open Hadoop data faster than that of a leading proprietary analytic DBMS across data in its native columnar data store. (Yes, you read that right!)

Thus far, we consider this milestone the best evidence yet that Impala is unique in its ability to surpass the performance of all SQL-on-Hadoop alternatives, while delivering the value propositions of Hadoop (fractional cost, unlimited scalability, unmatched flexibility) — a combination that the creators of those alternatives (including remote-query approaches and siloed DBMS/Hadoop hybrids) can never hope to achieve.

First, let’s take take a look at the latest results compared to those of Hive 0.12 (aka “Stinger”).

Impala versus Hive

The results below show that Impala continues to outperform all the latest publicly available releases of Hive (the most current of which runs on YARN/MR2).

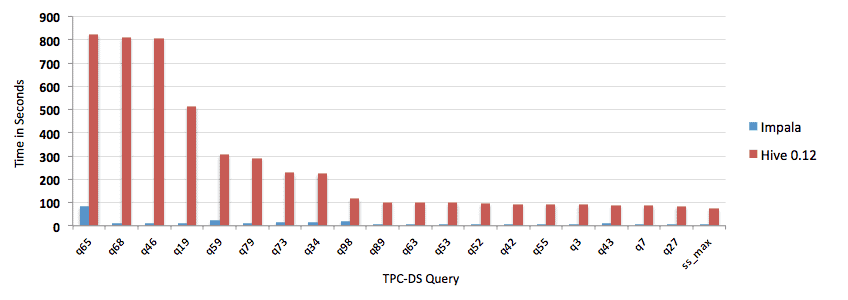

For this analysis, we ran Hive 0.12 on ORCFile data sets, versus Impala 1.1.1 running against the same data set in Parquet (the general-purpose, open source columnar storage format for Hadoop). To obtain the best possible results from Hive, we converted the TPC-DS queries into SQL-92 style joins, manually optimized the join order, and added an explicit partition predicate. (To ensure a direct comparison, the same modifications were run against Impala.)

The data set in question comprised 3TB (TPC-DS scale factor 3,000) across five typical Hadoop DataNodes (dual-socket, 8-core, 16-thread CPU; 96GB memory; 1Gbps Ethernet; 12 x 2TB disk drives). As in our previous analysis, the query set was intentionally diverse in order to ensure unbiased results, including a variety of fairly standard joins (from one to seven in number) and aggregations, as well as complex multi-level aggregations and inline views (and categorized into Interactive Exploration, Reports, and Deep Analytics buckets). Five runs were done, and aggregated into the numbers you see below.

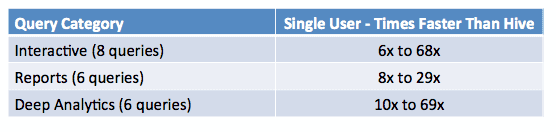

As you’ll see in the chart below, Impala outperformed Hive on every query, and by very considerable margins:

Impala versus Hive 0.12/Stinger

(Lower bars are better)

In summary, Impala outperformed Hive by 6x to 69x (and by an average of 24x) depending on the category involved:

Clearly, Impala’s performance continues to lead that of Hive. Now, let’s move on to the even more exciting results!

Impala versus DBMS-Y

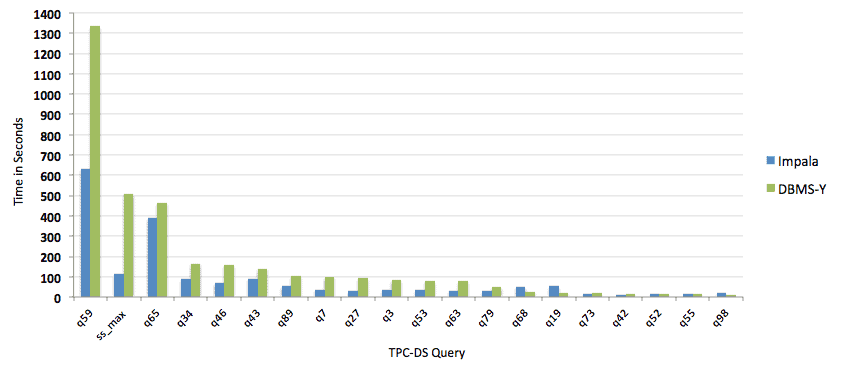

For this milestone, we tested a popular commercial analytic DBMS (referred to here as “DBMS-Y” due to a restrictive proprietary licensing agreement) against Impala 1.2.2 on a 30TB set of TPC-DS data (scale factor 30,000) — maintaining the same query complexity and unbiased pre-selection characteristics as in the Impala versus Hive analysis above. The queries were not customized for optimal Impala join order; rather, we used the same format as provided in the official TPC-DS kit.

In this experiment, we:

- Pre-selected a diverse set of 20 TPC-DS queries before obtaining any results, to ensure an unbiased comparison.

- Installed DBMS-Y (with OS filesystem) and Impala (with CDH) on precisely the same cluster (20 nodes with 96GB memory per node).

- Loaded all the data into the DBMS-Y proprietary columnar data store and compared it to data in Parquet.

- Ran the set of 20 queries on each of the two systems individually (with the other one completely shut down to prevent any interference).

- Validated that execution plans from both systems were common-sense and routine — to avoid comparing a “garbage” plan to a “good” plan.

- Removed currently unsupported analytic functions and added an explicit predicate to the WHERE clauses that expresses a partition filter on the fact table. (Window functions and dynamic partition pruning are both on the Impala near-term roadmap.)

To obtain the best possible results from DBMS-Y, we modified the original TPC-DS queries to one-month boundaries to avoid penalizing DBMS-Y for its inability to handle daily partitions on the 30TB data set. We ran the queries against DBMS-Y both with and without an explicit partition filter and chose the best result. (In a few cases, excluding the partition filter led to better DBMS-Y performance.)

As you can see from the results below, Impala outperformed DBMS-Y by up to 4.5x (and by an average of 2x), with only three queries performing more slowly on Impala:

Impala versus DBMS-Y

(Lower bars are better)

To repeat: on 17 of 20 examples, Impala queries on open Hadoop data were faster than DBMS-Y queries on its native, proprietary data – with some being nearly 5x as fast.

Multiple Impala customers have reported results similar to those above against DBMS-Y and other popular commercial DBMS vendors. We’ve published the benchmarking tests for you to confirm for yourself here!

Experiments with Linear Scalability

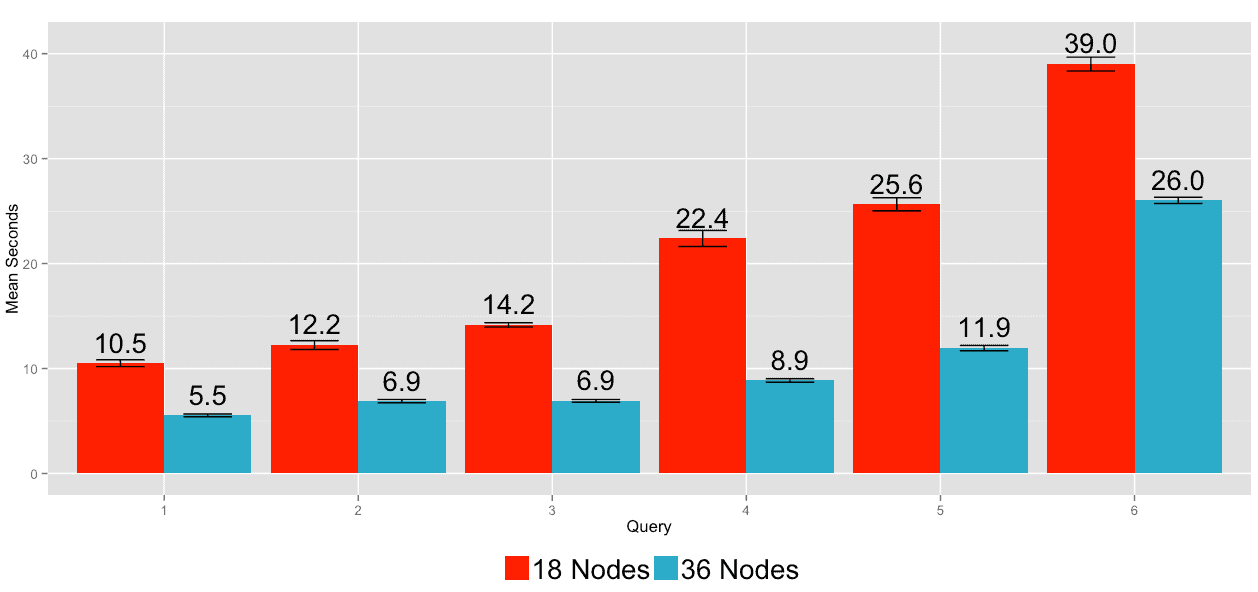

With that extremely impressive outcome, we could have called it a day — but we didn’t stop there. Next, we wanted to document what Impala users have reported with respect to Impala’s linear scalability in response time, concurrency, and data scale. For this set of tests, we used:

- Two clusters of the same hardware: one of 18 nodes and one of 36 nodes

- 15TB-scale and 30TB-scale TPC-DS data sets

- A multi-user workload of TPC-DS queries selected from the “Interactive” bucket described previously

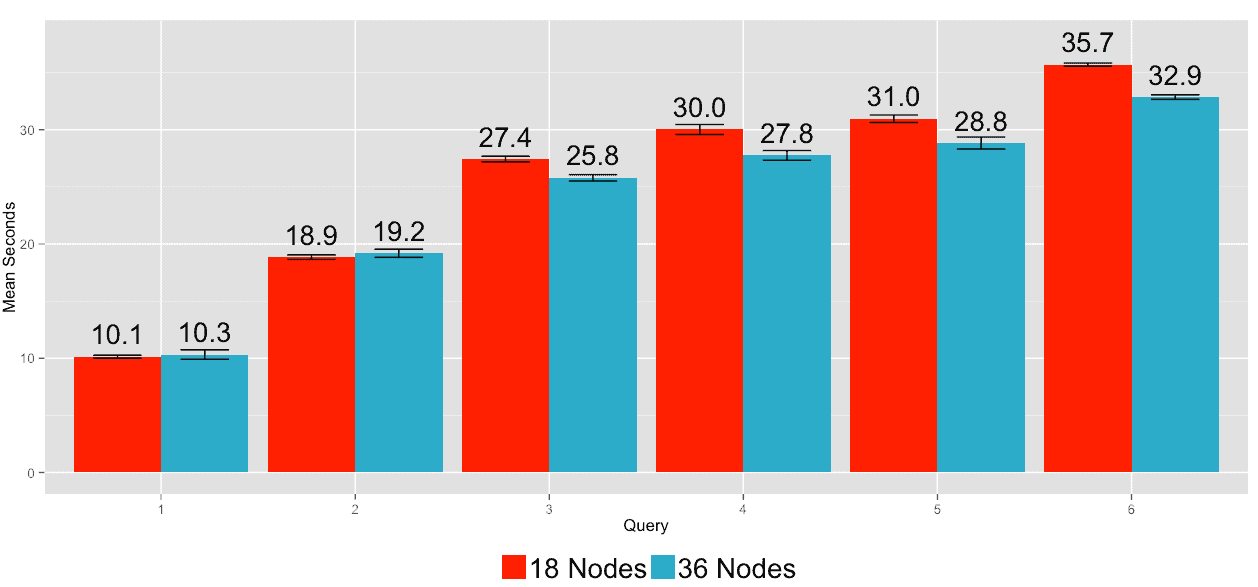

The first set of results below demonstrates that you can simply add machines to Impala to achieve the desired latency. For example, if you compare a set of queries on 15TB with 18 nodes to the same queries running against 15TB with 36 nodes, you’ll find that the response time is approximately 2x better with 2x the number of nodes:

2x the Hardware (Expectation: Cut response times in half)

Take-away: When the number of nodes in the cluster is doubled, expect the response time for queries to be reduced by half.

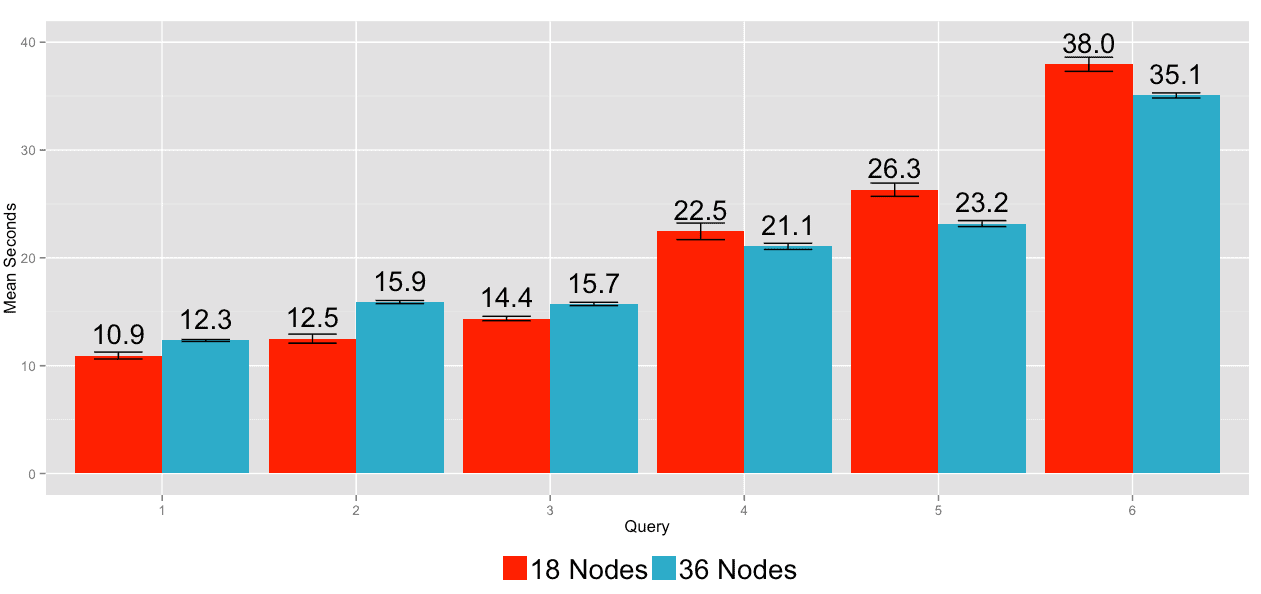

The next results demonstrate that you can simply add more machines to the cluster to scale up the number of concurrent users while maintaining latency. Here we ran the same set of queries and 15TB data set against both the 18-node and 36-node clusters, but we doubled the level of concurrency with the 36-node cluster:

2x the Users, 2x the Hardware (Expectation: Constant response times)

Take-away: Assuming constant data, expect that doubling the number of users and hardware will result in constant response time.

The final results below demonstrate that you can simply add new machines to your cluster as your Big Data sets grow and expect to achieve the same response times. In this experiment, we ran the same queries on the 18-node and 36-node clusters, but this time we ran against a 15TB data set with the 18-node cluster and a 30TB data set with the 36-node cluster:

2x the Data, 2x the Hardware (Expectation: Constant response times)

Take-away: When both the data and the number of nodes in the cluster are doubled, expect the response time for queries to remain constant.

Based on the above, you can safely conclude that Impala’s linear scalability is well established.

Conclusion

To briefly summarize, based on our latest performance analysis:

- Impala handily outperformed the latest public release of Hive (0.12 – aka Stinger).

- Impala (on the open Parquet file format for Hadoop) performance, on average, substantially surpassed that of DBMS-Y (on its proprietary columnar data store).

- Impala scaled linearly and predictably as users, data, or hardware increased.

These results are a milestone in an already exciting year for Impala users. Up until now, users could count on superior performance for BI-style, interactive queries over batch-processing alternatives; even when running multiple workloads simultaneously. Today, with Impala 1.2.x and Parquet, they can also count on performance similar to that of an analytic DBMS on proprietary data, while retaining all the well known advantages of Hadoop — there’s no longer any need to compromise. Clearly, we’re entering a “golden age” for BI users and customers!

Although we’re proud of these results, we encourage you to do your own testing — whether utilizing the methodology described above, or your own approach. (It’s unfortunate that this goal is made more difficult to reach by the common use of restrictive licensing agreements that make direct benchmarking impossible.) We welcome any and all outcomes, because in the end, they can only make your Impala experience better.

When Impala 2.0 arrives in the first half of 2014, you’ll see further performance improvements, as well as the addition of popular SQL functionality like analytic window functions. Impala is well on its way to becoming one of the main access points to the Enterprise Data Hub.

The authors would like to thank Arun Singla (Engineering Manager, Performance at Cloudera) for his contributions to this project.

Justin Erickson is a director of product management at Cloudera.

Marcel Kornacker is the creator/tech lead of Impala at Cloudera.

Greg Rahn and Yanpei Chen are performance engineers at Cloudera.