The rise of Big Data has been pushing search engines to handle ever-increasing amounts of data. While building Cloudera Search, one of the things we considered in Cloudera Engineering was how we would incorporate Apache Solr with Apache Hadoop in a way that would enable near-real-time indexing and searching on really big data.

Eventually, we built Cloudera Search on Solr and Apache Lucene, both of which have been adding features at an ever-faster pace to aid in handling more and more data. However, there is no silver bullet for dealing with extremely large-scale data. A common answer in the world of search is “it depends,” and that answer applies in large-scale search as well. The right architecture for your use case depends on many things, and your choice will generally be guided by the requirements and resources for your particular project.

We wanted to make sure that one simple scaling strategy that has been commonly used in the past for large amounts of time-series data would be fairly simple to set up with Cloudera Search. By “time-series data,” I mean logs, tweets, news articles, market data, and so on — data that is continuously being generated and is easily associated with a current timestamp.

One of the keys to this strategy is a feature that Cloudera recently contributed to Solr: collection aliasing. The approach involves using collection aliases to juggle collections in a very scalable little “dance.” The architecture has some limitations, but for the right use cases, it’s an extremely scalable option. I also think there are some areas of the dance that we can still add value to, but you can already do quite a bit with the current functionality.

Inside Collection Aliasing

Collection aliasing allows you to setup a virtual collection that actually points to one or more real collections. For example, you could have an alias called “articles” that when queried actually searched “magazines” and “blogs”. Aliases meant for read operations can refer to one or more real collections. Aliases meant for updates should only map to one real collection. You may use a collection alias in any place that you would normally specify a real collection name.

One of the main benefits to aliases is that you can use the aliases from any search client applications and then switch which actual collections those aliases refer to on the fly without requiring updates to the clients.

Collection aliases are only available when using SolrCloud mode and are manipulated via a simple HTTP API:

Create

http://localhost:8983/solr/admin/collections?action=CREATEALIAS&name=AliasName&collection=ListOfCollectionsDelete

http://localhost:8983/solr/admin/collections?action=DELETEALIAS&name=AliasNameList

To be done: SOLR-4968

A Collection Composed of Collections

One way to take advantage of Collections Aliases is to make a virtual collection out of multiple real SolrCloud collections. Each real collection will act as a logical shard of the full virtual collection. As you will be putting time-series data into this virtual collection, this approach allows you to treat the collection for different time ranges differently. It also allows you to control the size of the single collection that will be accepting new data and providing near-real-time search. A smaller near-real-time collection will perform better and independently of the total size of the virtual collection.

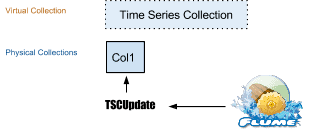

Initially, there will be just one collection. You can use a variety of naming patterns for the real collection names. For illustrative purposes, I’ve simply chosen to use ColN as the collection names.

Each of these collections should use the same set of configurations files – they are in fact, many collections acting as a single collection, so this makes sense. Using a single set of config files will ensure you can search across the collections and lets you update settings for the virtual collection in a single place.

The first collection is the one that will collect all the incoming time series data. You will likely be using something like Apache Flume with Cloudera Morphlines to load in the data, but the following technique is not specific to any particular data loading method.

You will want at least two aliases to control access to your large virtual collection: an update alias and a read alias.

Note: This technique is meant for cases where the data is coming in live – not for bulk loading existing data. You can bulk load and use this architecture with further effort, but it is beyond the scope of this post.

Update Alias

Create alias TSCUpdate -> Col1

http://localhost:8983/solr/admin/collections?action=CREATEALIAS&name=TSCUpdate&collection=Col1

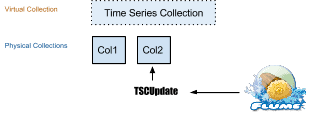

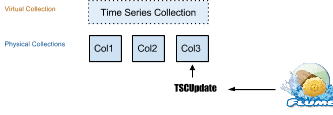

Whatever the manner you are loading in the data, you will want to create a single update alias to use as the configured collection name to send updates to. In the diagram below, I have chosen TSCUpdate as the name of the update alias.

You can use this update alias to seamlessly switch which physical collection actually receives the incoming updates. In the above diagram, Col1 is the only physical collection and so that is what the TSCUpdate points to.

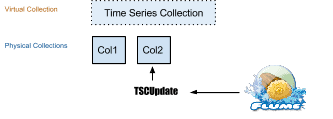

As the data comes in, it will be directed at the update alias and fill the Col1 collection for X amount of time. At time X, Col2 will be created and the update alias set to it. Col1 is now a static index with older data and Col2 accepts all the incoming data. One of the simplifying features of this strategy is that data updates are not generally expected. Rather than update existing documents, new documents just keep flowing in.

Note: To update an alias, you simply use CREATEALIAS again on an existing alias.

http://localhost:8983/solr/admin/collections?action=CREATEALIAS&name=TSCUpdate&collection=Col2

After another interval of X, Col3 will be created and the update alias TSCUpdate directed to it.

http://localhost:8983/solr/admin/collections?action=CREATEALIAS&name=TSCUpdate&collection=Col3

The newest data that is coming in will often also be the most searched data. With this strategy, you can keep the most recent data in a relatively small collection while older data is kept in static collections that can be packed onto fewer machines and/or merged together. Static data is very friendly to caching. The collections with more recent data might have higher replication than older data to support a higher throughput. You could remove extra replicas for older collections. In general, you can tune different time regions by collection for your use case. You can also explicitly and flexibly leave large time regions out of queries.

Note: It may take a while to create and delete collections when it’s time to update the update collection alias. You might want to schedule the heavy lifting to happen before your time interval is up, and then simply do the alias updating (which is very fast) on the interval.

Read Alias

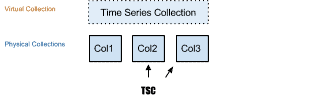

Create Alias - TSC -> Col1.

http://localhost:8983/solr/admin/collections?action=CREATEALIAS&name=TSC&collection=Col1

You will also want to use read side aliases. With read size aliases, you can explicitly leave large sections of the total data out of your queries as well as search all of the data using a single virtual collection name. You might set up a few of these aliases to allow clients to search over different time windows of the data.

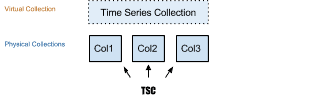

To search Time Series Collection in the figure below, you would have to search over Col1, Col2, and Col3. With our read side alias, you could easily do this by simply specifying a collection of TSC.

http://localhost:8983/solr/admin/collections?action=CREATEALIAS&name=TSC&collection=Col2,Col3

Because Col1, Col2, and Col3 will hold essentially ordered time series data, you can also search a smaller index by ignoring older data. For example, you could only search over Col2 and Col3, ignoring the data that first filled up Col1 for most queries. If you think about something like Twitter or a large news aggregator, you can imagine that most inquires will be directed at newer data, and so most searches might not need to touch data from 2005.

For queries that must go back further, you can explicitly search over Col1, Col2, and Col3.

http://localhost:8983/solr/admin/collections?action=CREATEALIAS&name=TSC&collection=Col1,Col2,Col3

The most common read side alias would probably be a rolling window that was always updated to reference the most recent N collections.

Note: If your use case is very sensitive to the boundary of the time intervals of your collections, you may have to include another collection on either end of your search window and use range queries against the timestamp field to narrow in on the small number of updates that may have missed the boundary window.

Merging

Because all collections but the current one are static, merging collections becomes relatively straightforward. You might do this so that older data is hosted on less hardware or so that you won’t have to search across so many collections. Older data might not see the same load as more recent data, and a slightly worse response time for older data might be reasonable in any case. For example, you might have a single collection for the most recent day, then weekly collections for the past year, then monthly or yearly collections for data older than that. To turn the single day collections into collections that span multiple days, you will have to periodically merge indexes in the background. You can use static indexes and collection aliasing to do this.

How to Merge

Solr has a feature for merging each shard in a collection via an HTTP API call. Typically, the merge has to use local indexes. With Cloudera Search, the command is more powerful because collections live in HDFS, and so merges can easily be done with remote collections.

You can easily move collections by creating an empty matching collection and merging into it, and you can easily combine collections by merging two into a single new matching empty collection.

We are currently missing some sugar in that you will have to manually merge each shard in a collection. I’m sure Solr and Cloudera Search will eventually offer the ability to merge by collection name and automatically figure out and execute the correct shard merges.

Some useful Java example code to look at is our GoLive feature in the Solr-MR contrib, which Cloudera is contributing to Solr. It looks up the right shard urls for a collection and individually merges each shard. MapReduceIndexerTool is one such informative class to explore.

- https://github.com/cloudera/search/blob/master/search-mr/src/main/java/org/apache/solr/hadoop/MapReduceIndexerTool.java and ZooKeeperInspector

- https://github.com/cloudera/search/blob/master/search-mr/src/main/java/org/apache/solr/hadoop/ZooKeeperInspector.java

Optimizing

Collections perform better when each shard of the collection is made up of as few index segments as possible. When you add data to a Solr index, new segments are created and merged together over time. If you have a static index, it often makes a lot of sense to merge each shard down to one segment. This is what an optimize does.

One strategy to handle this transparently is to merge the collection into a new collection that is located on separate machines and then optimize that new collection while still serving requests against the first collection. You can optimize in place, but it can be a fairly resource intensive operation. When the optimize is done, update the read alias to reference the new collection and remove the first collection.

Re-indexing

Collection aliases are also useful for re-indexing – especially when dealing with static indices. You can re-index in a new collection while serving from the existing collection. Once the re-index is complete, you simply swap in the new collection and then remove the first collection using your read side aliases.

Scheduling

This strategy begs for you to schedule collection creation and deletion, collection alias updates, and possibly an optimize and/or merging step. The scheduled logic that is kicked off can range from very simple to very complicated, but in either case, it’s probably best to contain it in it a small driver program or two. The driver(s) can be either very simple or very sophisticated depending on your needs. You may want to write the driver(s) in Java as you can reuse Solr’s Java code to read from ZooKeeper about the cluster. However, for the simplest setups, anything will do: a shell script, python, perl, whatever you have that can execute basic http requests against your cluster.

You can then use your favorite scheduler to kick off “intervals” of your driver – cron is a reliable and popular option for scheduling on UNIX/Linux, and there’s always Apache Oozie.

Conclusion

Here you have learned a simple but very effective architecture for handling huge amounts of some near-real-time data. The techniques are not necessarily specific to time series data either; that is just one of the simpler applications.

Keep an eye out for enhanced support for this strategy in the future – perhaps even the ability to accomplish something similar within a single collection using custom sharding. Finally, for someone willing to dig in a little, these techniques can also be used in a wide range of use cases beyond those described above.

Mark Miller (@heismark) is a Software Engineer at Cloudera and a Committer/PMC Member of the Solr project.