In Part 1 of this series about Apache HBase snapshots, you learned how to use the new Snapshots feature and a bit of theory behind the implementation. Now, it’s time to dive into the technical details a bit more deeply.

What is a Table?

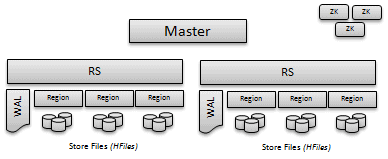

An HBase table comprises a set of metadata information and a set of key/value pairs:

- Table Info: A manifest file that describes the table “settings”, like column families, compression and encoding codecs, bloom filter types, and so on.

- Regions: The table “partitions” are called regions. Each region is responsible for handling a contiguous set of key/values, and they are defined by a start key and end key.

- WALs/MemStore: Before writing data on disk, puts are written to the Write Ahead Log (WAL) and then stored in-memory until memory pressure triggers a flush to disk. The WAL provides an easy way to recover puts not flushed to disk on failure.

- HFiles: At some point all the data is flushed to disk; an HFile is the HBase format that contains the stored key/values. HFiles are immutable but can be deleted on compaction or region deletion.

(Note: To learn more about the HBase Write Path, take a look at the HBase Write Path blog post.)

What is a Snapshot?

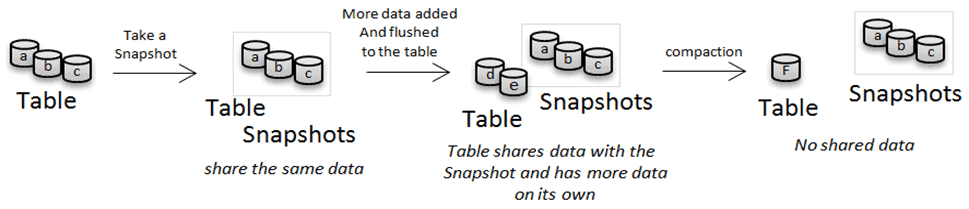

A snapshot is a set of metadata information that allows the admin to get back to a previous state of the table it is taken on. A snapshot is not a copy of the table; the simplest way to think about it is as a set of operations to keep track of metadata (table info and regions) and the data (HFiles, memstore, WALs). No copies of the data are involved during the snapshot operation.

- Offline Snapshots: The simplest case for taking a snapshot is when a table is disabled. Disabling a table means that all the data is flushed on disk, and no writes or reads are accepted. In this case, taking a snapshot is just a matter of going through the table metadata and the HFiles on disk and keeping a reference to them. The master performs this operation, and the time required is determined mainly by the time required by the HDFS namenode to provide the list of files.

- Online Snapshots: In most situations, however, tables are enabled and each region server is handling put and get requests. In this case the master receives the snapshot request and asks each region server to take a snapshot of the regions for which it is responsible.

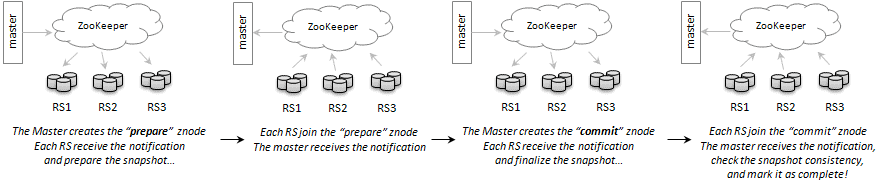

The communication between the master and region servers is done via Apache ZooKeeper using a two-phase commit-like transaction.The Master creates a znode that means “prepare the snapshot”. Each region server will process the request and prepare the snapshot for the regions from the table for which it is responsible. Once they are done, they add a sub-node to the prepare-request znode with the meaning, “I’m done”.

Once all the region servers have reported back their status, the master creates another znode that means “Commit snapshot”; each region server will finalize the snapshot and report the status as before joining the node. Once all the region servers have reported back, the master will finalize the snapshot and mark the operation as complete. In case of a region server reporting a failure, the master will create a new znode used to broadcast the abort message.

Since the region server is continuously processing new requests, different use cases may require different consistency models. For example, someone may be interested in a sloppy snapshot without the new data in the MemStore, someone else may want a fully consistent snapshot that requires locking writes for a while, and so on.

For this reason, the procedure to take a snapshot on the region server is pluggable. Currently, the only implementation present is “Flush Snapshot,” which performs a flush before taking a snapshot and guarantees only row-consistency. Other procedures with different consistency policies may be implemented in the future.

In the online case, the time required to take a snapshot is bounded by the time required by the slowest region server to perform the snapshot operation and report success back to the master. This operation is usually on the order of a few seconds.

Archiving

As we have seen before, HFiles are immutable. This allows us to avoid copying the data during the snapshot or clone operations, but during compaction they are removed and replaced by a compacted version. In this case, if you have a snapshot or a cloned table that is referencing one of those files, instead of deleting them they are moved to an “archive” location. If you delete a snapshot and no one else is referencing the files referenced by the snapshot, those files will be deleted.

Cloning and Restoring Tables

Snapshots can be seen as a backup solution where they can be used to restore/recover a table after a user or application error, but the snapshot feature can allow much more than a simple backup-and-restore. After cloning a table from a snapshot, you can write a MapReduce job or a simple application to selectively merge the differences, or what you think is important, into production. Another use case is that you can test schema changes or updates to the data without having to wait hours for a table copy and without ending up with lots of data duplicated on disk.

Clone a Table from a Snapshot

When an administrator performs a clone operation, a new table with the table-schema present in the snapshot is created pre-split with the start/end keys in the snapshot regions info. Once the table metadata is created, instead of copying the data in, the same trick as with the snapshot is used. Since the HFiles are immutable, just a reference to the source file is created; this allows the operation to avoid data copies and allows the clone to be edited without impacting the source table or the snapshot. The clone operation is performed by the master.

Restore a Table from a Snapshot

The restore operation is similar to the clone operation; you can think about it as deleting the table and cloning it from the snapshot. The restore operation brings back the old data present in the snapshot removing any data from the table that is not also in the snapshot, and also the schema of the table is reverted to that of the snapshot. Under the hood, the restore is implemented by doing a diff between the table state and the snapshot, removing files that are not present in the snapshot and adding references to the ones in the snapshot but not present in the current state. Also the table descriptor is modified to reflect the table “schema” at the moment of the snapshot. The restore operation is performed by the master and the table must be disabled.

Futures

Currently, the snapshot implementation includes all basic required functionality. As we have seen, new snapshot consistency policies for the online snapshots can provide more flexibility, consistency, or performance improvements. Better file management can reduce the load on the HDFS Name Node and improve disk space management. Furthermore, metrics, Web UI (Hue), and more are on the to-do list.

Conclusion

HBase snapshots add new functionality like the “procedure coordination” used by the online snapshot, or the copy-on-write snapshot, restore, and clones.

Snapshots provide a faster and better alternative to handmade “backup” and “cloning” solutions based on distcp or CopyTable. All the snapshot operations (snapshot, restore, clone) don’t involve data copies, resulting in quicker snapshots of the table and savings in disk space.

For more information about how to enable and use snapshots, please refer to the HBase operational management doc.

Matteo Bertozzi is a Software Engineer on the Platform team and an HBase Committer.