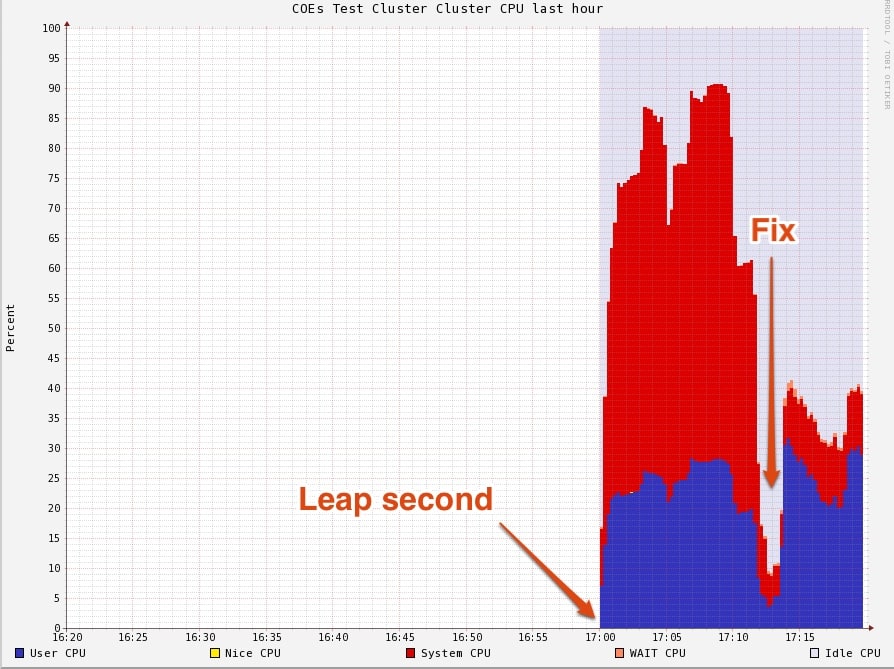

At 5 pm PDT on June 30, a leap second was added to the Universal Coordinated Time (UTC). Within an hour, Cloudera Support started receiving reports of systems running at 100% CPU utilization. The Support Team worked quickly to understand and diagnose the problem and soon published a solution. Bugs due to the leap second coupled with the Amazon Web Services outage would make this Cloudera’s busiest support weekend to date.

Since Hadoop is written in Java and closely interoperates with the underlying OS, Cloudera Support troubleshoots not only all 17 components in the Hadoop ecosystem, but also any underlying Linux and Java bugs. Last weekend many of our customers were affected by the now infamous “leap second” bugs. Initially, many assumed that Java and Linux would process the leap second gracefully. However, we soon discovered that this wasn’t the case and depending on the version of Linux being used, several distinct issues were observed.

Background

Leap seconds are added to the UTC to correct for Earth’s slowing rotation. The latest leap second was added last Saturday (6/30) at 23:59:60 UTC (5 pm PDT). Due to a missed function call in the Linux timekeeping code, the leap second was not accounted for properly. As a result, after the leap second, timers expired one second earlier than requested. Many applications use a recurring timer of 1 second or less; such timers expired immediately, causing the application to immediately try to set another timer, ad infinitum. This infinite loop led to CPU load spikes that launched 21 separate support tickets.

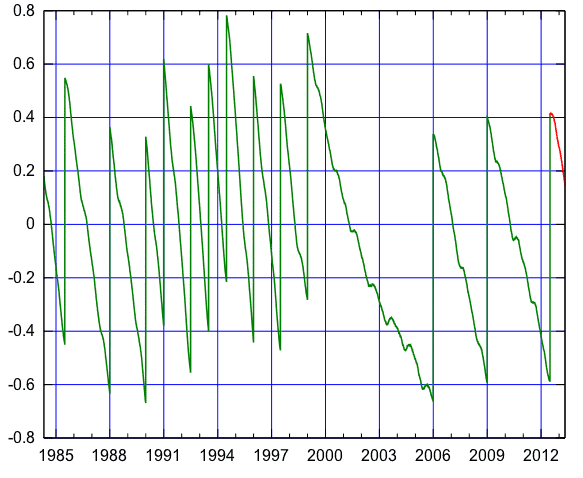

Plot showing the difference UT1?UTC in seconds. Vertical segments correspond to leap seconds. Red part of graph was prediction (future values) at the time the file was made. From http://en.wikipedia.org/wiki/Leap_second

Diagnosis

While the tickets streamed in with varied symptoms, the underlying theme was high CPU load, resulting in responsive but slow machines. Given the symptom vagueness, we focused on accurate diagnosis in the form of three verification checks:

- If the cluster is managed by Cloudera Manager (CM), Activity Monitoring will clearly indicate a load average close to 100%.

- Check the kernel message buffer (run dmesg) and look for output confirming the leap second injection:

Clock: inserting leap second 23:59:60 UTC

- Check CPU utilization through OS reporting mechanisms:

# ps -C java -o pid,pcpu,euser,comm --sort cpu

If the Java processes are using 100% of the CPU the system is likely experiencing the Leap Second problem.

Remedy

Just as varied as the symptoms were the suggested workarounds found in various official bug reports. Weighing the time to test each workaround against the urgency of reviving our customers’ production clusters, we decided to first pilot several workarounds on our internal Hadoop clusters, rather than on our customers’. Unfortunately we weren’t able to confirm that the workarounds repaired the issue in all cases. Internal testing revealed CPU usage returning to a high number after restarting applications, which used external components such as MySQL, or the ntpd daemon. Based on its observed effectiveness in our test environments, we opted to recommend issuing

'date -s "$(date)"'

to correctly set the real time clock, which immediately lowered the CPU load, followed by a rolling restart on all nodes in the Hadoop cluster in order to avoid any potential problems with other processes or kernel modules that could be affected.

Summary

With both diagnosis and remedy in hand, the Support Team executed swiftly, sharing knowledge internally and leveraging internal engineering and QA teams. Rapidly disseminating information, the Support Team spoke globally, 24/7, and with one voice. Once the team had determined a reliable diagnosis and repair procedure, resolution time for customers dropped dramatically: while the first customers waited nearly three hours for a full fix, this quickly decreased to under ten minutes.