Apache HBase Replication is a way of copying data from one HBase cluster to a different and possibly distant HBase cluster. It works on the principle that the transactions from the originating cluster are pushed to another cluster. In HBase jargon, the cluster doing the push is called the master, and the one receiving the transactions is called the slave. This push of transactions is done asynchronously, and these transactions are batched in a configurable size (default is 64MB). Asynchronous mode incurs minimal overhead on the master, and shipping edits in a batch increases the overall throughput.

This blogpost discusses the possible use cases, underlying architecture and modes of HBase replication as supported in CDH4 (which is based on 0.92). We will discuss Replication configuration, bootstrapping, and fault tolerance in a follow up blogpost.

Use cases

HBase replication supports replicating data across datacenters. This can be used for disaster recovery scenarios, where we can have the slave cluster serve real time traffic in case the master site is down. Since HBase replication is not intended for automatic failover, the act of switching from the master to the slave cluster in order to start serving traffic is done by the user. Afterwards, once the master cluster is up again, one can do a CopyTable job to copy the deltas to the master cluster (by providing the start/stop timestamps) as described in the CopyTable blogpost.

Another replication use case is when a user wants to run load intensive MapReduce jobs on their HBase cluster; one can do so on the slave cluster while bearing a slight performance decrease on the master cluster.

Architecture

The underlying principle of HBase replication is to replay all the transactions from the master to the slave. This is done by replaying the WALEdits (Write Ahead Log entries) in the WALs (Write Ahead Log) from the master cluster, as described in the next section. These WALEdits are sent to the slave cluster region servers, after filtering (whether a specific edit is scoped for replication or not) and shipping in a customized batch size (default is 64MB). In case the WAL Reader reaches the end of the current WAL, it will ship whatever WALEdits have been read till then. Since this is an asynchronous mode of replication, the slave cluster may lag behind from the master in a write heavy application by the order of minutes.

WAL/WALEdits/Memstore

In HBase, all the mutation operations (Puts/Deletes) are written to a memstore which belongs to a specific region and also appended to a write ahead log file (WAL) in the form of a WALEdit. A WALEdit is an object which represents one transaction, and can have more than one mutation operation. Since HBase supports single row-level transaction, one WALEdit can have entries for only one row. The WALs are repeatedly rolled after a configured time period (default is 60 minutes) such that at any given time, there is only one active WAL per regionserver.

IncrementColumnValue, a CAS (check and substitute) operation, is also converted into a Put when written to the WAL.

A memstore is an in-memory, sorted map containing keyvalues of the composing region; there is one memstore per each column family per region. The memstore is flushed to the disk as an HFile once it reaches the configured size (default is 64MB).

Writing to WAL is optional, but it is required to avoid data loss because in case a regionserver crashes, HBase may lose all the memstores hosted on that region server. In case of regionserver failure, its WALs are replayed by a Log splitting process to restore the data stored in the WALs.

For Replication to work, write to WALs must be enabled.

ClusterId

Every HBase cluster has a clusterID, a UUID type auto generated by HBase. It is kept in underlying filesystem (usually HDFS) so that it doesn’t change between restarts. This is stored inside the /hbase/hbaseid znode. This id is used to acheive master-master/acyclic replication. A WAL contains entries for a number of regions which are hosted on the regionserver. The replication code reads all the keyvalues and filters out the keyvalues which are scoped for replication. It does this by looking at the column family attribute of the keyvalue, and matching it to the WALEdit’s column family map data structure. In the case that a specific keyvalue is scoped for replication, it edits the clusterId parameter of the keyvalue to the HBase cluster Id.

ReplicationSource

The ReplicationSource is a java Thread object in the regionserver process and is responsible for replicating WAL entries to a specific slave cluster. It has a priority queue which holds the log files that are to be replicated. As soon as a log is processed, it is removed from the queue. The priority queue uses a comparator that compares the log files based on their creation timestamp, (which is appended to the log file name); so, logs are processed in the same order as their creation time (older logs are processed first). If there is only one log file in the priority queue, it will not be deleted as it represents the current WAL.

Role of Zookeeper

Zookeeper plays a key role in HBase Replication, where it manages/coordinates almost all the major replication activity, such as registering a slave cluster, starting/stopping replication, enqueuing new WALs, handling regionserver failover, etc. It is advisable to have a healthy Zookeeper quorum (at least 3 or more nodes) so as to have it up and running all the time. Zookeeper should be run independently (and not by HBase). The following figure shows a sample of replication related znodes structure in the master cluster (the text after colon is the data of the znode):

/hbase/hbaseid: b53f7ec6-ed8a-4227-b088-fd6552bd6a68 …. /hbase/rs/foo1.bar.com,40020,1339435481742: /hbase/rs/foo2.bar.com,40020,1339435481973: /hbase/rs/foo3.bar.com,40020,1339435486713: /hbase/replication: /hbase/replication/state: true /hbase/replication/peers: /hbase/replication/peers/1: zk.quorum.slave:281:/hbase /hbase/replication/rs: /hbase/replication/rs/foo1.bar.com.com,40020,1339435084846: /hbase/replication/rs/foo1.bar.com,40020,1339435481973/1: /hbase/replication/rs/foo1.bar.com,40020,1339435481973/1/foo1.bar.com.1339435485769: 1243232 /hbase/replication/rs/foo3.bar.com,40020,1339435481742: /hbase/replication/rs/foo3.bar.com,40020,1339435481742/1: /hbase/replication/rs/foo3.bar.com,40020,1339435481742/1/foo3.bar..com.1339435485769: 1243232 /hbase/replication/rs/foo2.bar.com,40020,1339435089550: /hbase/replication/rs/foo2.bar.com,40020,1339435481742/1: /hbase/replication/rs/foo2.bar.com,40020,1339435481742/1/foo2.bar..com.13394354343443: 1909033

Figure 1. Replication znodes hierarchy

As per Figure 1, there are three regionservers in the master cluster, namely foo[1-3].bar.com. There are three znodes related to replication:

- state: This znode tells whether replication is enabled or not. All fundamental steps (such as whether to enqueue a newly rolled log in a replication queue, read a log file to make WALEdits shipments, etc), check this boolean value before processing. This is set by the setting “hbase.replication” property to true in the hbase-conf.xml. Another way of altering its value is to use the “start/stop replication” command in hbase shell. This will be discussed in the second blogpost.

- peers: This znode has the connected peers/slaves as children. In the figure, there is one slave with the peerId = 1, and its value is the connection string (Zookeeper_quorum_of_slave:Zookeeper_client_port:root_hbase_znode), where the Zookeeper_quorum_of_slave is a comma separated list of zookeeper servers. The peerId znode name is the same as the one given while adding a peer.

- rs: This znode contains a list of active regionservers in the master cluster. Each regionserver znode has a list of WALs that are to be replicated, and the value of these log znodes is either null (in case log is not opened for replication yet), or the byte offset to the point where the log has been read. The byte offset value for a WAL znode indicates the byte offset of the corresponding WAL file up to which it has been read and replicated. As there can be more than one slave cluster, and replication progress can vary across them (one may be down for example), all the WALs are self contained in a peerId znode under rs. Thus, in the above figure, WALs znodes are under the are /rs//1, where “1” is the peerId.

Replication Modes

There are three modes for setting up HBase Replication:

- Master-Slave: In this mode, the replication is done in a single direction, i.e., transactions from one cluster are pushed to other cluster. Note that the slave cluster is just like any other cluster, and can have its own tables, traffic, etc.

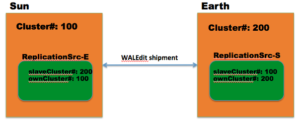

- Master-Master: In this mode, replication is sent across in both the directions, for different or same tables, i.e., both the clusters are acting both as master and slave. In the case that they are replicating the same table, one may think it may lead to a never ending loop, but this is avoided by setting the clusterId of a Mutation (Put/Delete) to the clusterId of the originating cluster. Figure 2 explains this by using two clusters, namely Sun, and Earth. In figure 2, we have two blocks representing the two HBase clusters. They have clusterId 100, and 200 respectively. Each of the clusters has an instance of ReplicationSource, corresponding to the slave cluster it wants to replicate to; it knows cluster #Ids of both the clusters.

Figure 2. Sun and Earth, two HBase clusters

Lets say cluster#Sun receives a fresh and valid mutation M on a table and column family which is scoped for replication in both the clusters. It will have a default clusterID (0L). The replication Source instance ReplicationSrc-E will set its cluster#Id equal to the originator id (100), and ships it to cluster#Earth. When cluster#Earth receives the mutation, it replays it and the mutation is saved in its WAL, as per the normal flow. The cluster#Id of the mutation is kept intact in this log file at cluster#Earth.The Replication source instance at cluster#Earth, (ReplicationSrc-S, will read the mutation and checks its cluster#ID with the slaveCluster# (100, equal to cluster#Sun). Since they are equal, it will skip this WALEdit entry.

- Cyclic: In this mode, there are more than two HBase clusters that are taking part in replication setup, and one can have various possible combinations of master-slave and master-master set up between any two clusters. The above two covers those cases well; one corner situation is when we have set up a cycle

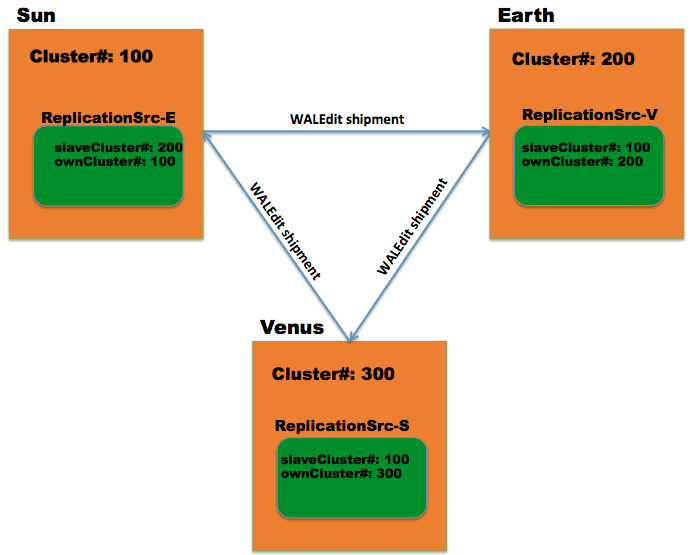

Figure 3. A circular replication set up

Figure 3. shows a circular replication setup, where cluster#Sun is replicating to cluster#Earth, cluster#Earth is replicating to cluster#Venus, and cluster#Venus is replicating to cluster#Sun.

Let’s say cluster#Sun receives a fresh mutation M and is scoped to replication across all the above clusters. It will be replicated to cluster#Earth as explained above in the master master replication. The replication source instance at cluster#Earth, ReplicationSrc-V, will read the WAL and see the mutation and replicate it to cluster#Venus. The cluster#Id of the mutation is kept intact (as of cluster#Sun), at cluster#Venus. At this cluster, the replication source instance for cluster#Sun, ReplicationSrc-S, will see that the mutation has the same clusterId as its slaveCluster# (as of cluster#Sun), and therefore, skip this from replicating.

Conclusion

HBase Replication is powerful functionality which can be used in a disaster recovery scenario. It was added as a preview feature in the 0.90 release, and has been evolving along with HBase in general, with addition of functionalities such as master-master replication, acyclic replication (both added in 0.92), and enabling-disabling replication at peer level (added in 0.94).

In the next blogpost, we will discuss various operational features such as Configuration, etc, and other gotchas with HBase Replication.

How does the HBase replication behaves during TRUNCATE operation ?

I tried truncating the table in Master but it was not reflecting in the Slave. Does TRUNCATE option needs any additional configurations for the replication ?

Replication only operates over client write operations that go through the WAL system, basically, PUT, DELETE and APPEND. Admin or any modification that is implemented server side, such as truncate, wouldn’t get replicated.