In the previous post, we talked about Kerberos authentication and explained how to configure a Kafka client to authenticate using Kerberos credentials. In this post we will look into how to configure a Kafka client to authenticate using LDAP, instead of Kerberos.

We will not cover the server-side configuration in this article but will add some references to it when required to make the examples clearer.

The examples shown here will highlight the authentication-related properties in bold font to differentiate them from other required security properties, as in the example below. TLS is assumed to be enabled for the Apache Kafka cluster, as it should be for every secure cluster.

security.protocol=SASL_SSL

ssl.truststore.location=/opt/cloudera/security/jks/truststore.jks

We use the Kafka-console-consumer for all the examples below. All the concepts and configurations apply to other applications as well.

LDAP Authentication

LDAP stands for Lightweight Directory Access Protocol and is an industry-standard application protocol for authentication. It’s one of the supported authentication mechanisms for Kafka on CDP.

LDAP authentication is also done through the SASL framework, similarly to Kerberos. SASL supports various authentication mechanisms, like GSSAPI, which we covered in the previous post, and PLAIN, which is the one we will use for LDAP authentication.

The following Kafka client properties must be set to configure the Kafka client to authenticate via LDAP:

# Uses SASL/PLAIN over a TLS encrypted connection security.protocol=SASL_SSL sasl.mechanism=PLAIN # LDAP credentials sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="alice" password="supersecret1"; # TLS truststore ssl.truststore.location=/opt/cloudera/security/jks/truststore.jks

The configuration above uses SASL/PLAIN for authentication and TLS (SSL) for data encryption. The choice of LDAP authentication is configured on the server-side handler for SASL/PLAIN, as we will see cover later in this section.

LDAP and Kerberos

LDAP and Kerberos are distinct authentication protocols, each with their pros and cons. The use of these protocols in a Kafka cluster, though, is not mutually exclusive. It’s a valid configuration to have both Kerberos and LDAP authentication enabled simultaneously for a cluster.

Identity directory services like Active Directory, RedHat IPA and FreeIPA, support both Kerberos and LDAP authentication and having both enabled for a Kafka cluster gives clients different alternatives for handling authentication.

LDAP can eliminate some of the complexity related to configuring Kerberos clients, like requiring Kerberos libraries to be installed on the client side and network connectivity to the Kerberos KDC in more restricted environments.

Ensure TLS/SSL encryption is being used

Differently from the Kerberos protocol, when LDAP is used for authentication the user credentials (username and password) are sent over the network to the Kafka cluster. Hence, when LDAP authentication is enabled for Kafka it is very important that TLS encryption is enabled and enforced for all the communication between Kafka clients. This will ensure that the credentials are always encrypted over the wire and don’t get compromised.

All the Kafka brokers must be configured to use the SASL_SSL security protocol for their SASL endpoints. The SASL_PLAINTEXT security protocol is a no no.

Enabling LDAP authentication on the Kafka Broker

LDAP authentication is not enabled by default for the Kafka brokers when the Kafka service is installed but it is pretty easy to configure it on the Cloudera Data Platform (CDP):

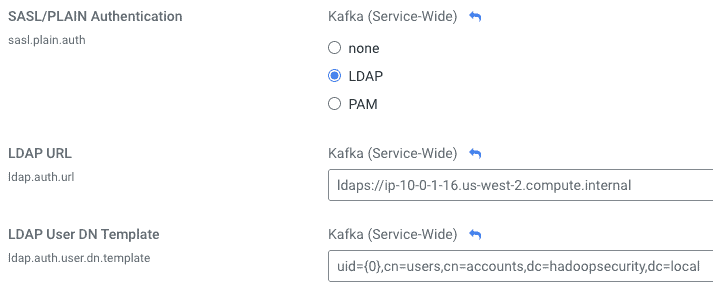

1. In Cloudera Manager, set the following properties in the Kafka service configuration to match your environment:

By selecting LDAP as the SASL/PLAIN Authentication option above, Cloudera Manager automatically configures Kafka Brokers to use the following SASL/PLAIN Callback Handler, which implements LDAP authentication:

By selecting LDAP as the SASL/PLAIN Authentication option above, Cloudera Manager automatically configures Kafka Brokers to use the following SASL/PLAIN Callback Handler, which implements LDAP authentication:

org.apache.kafka.common.security.ldap.internals.LdapPlainServerCallbackHandler

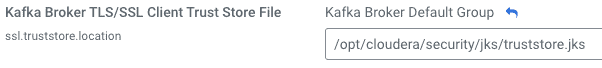

2. Kafka must connect to the LDAP server over a TLS connection (LDAPS). To ensure that the Kafka broker can trust the LDAP server certificate, add the LDAP server’s CA certificate to the truststore used by the Kafka service. You can find the location of the truststore in the following property in Cloudera Manager:

3. Run the following command (as root) to add the LDAP CA certificate to the truststore:

keytool \ -importcert \ -keystore /opt/cloudera/security/jks/truststore.jks \ -storetype JKS \ -alias ldap-ca \ -file /path/to/ldap-ca-cert.pem

4. Click on Kafka > Actions > Restart to restart the Kafka service and make the changes effective.

Limitations

The Kafka server’s LDAP callback handler uses Apache Shiro libraries to map the user ID (short login name) to the user entity in the LDAP realm. It does this by providing a “User DN template” that can be used to derive the user Distinguished Name in LDAP given the user short name:

For example, as mentioned in the library documentation, “if your directory uses an LDAP uid attribute to represent usernames, the User DN for the jsmith user may look like this:

uid=jsmith,ou=users,dc=mycompany,dc=com

in which case you would set this property with the following template value:

uid={0},ou=users,dc=mycompany,dc=com"

This restricts the use of the LDAP callback handlers to LDAP directories that have been configured in a way where the username is a component of the Distinguished Name. This is typically true for RedHat IPA and FreeIPA implementations. In Active Directory, however, Distinguished Names are, by default, of the form:

CN=Smith, John, ou=users, dc=mycompany, dc=com

They contain the user’s full name instead of the user id, which makes it impossible to derive it from the short username through a simple pattern. Fortunately, for the Active Directory case, <username>@<domain> is also a valid LDAP username, besides the Distinguished Name. If you are using Active Directory, you can set the LDAP User DN template to the following (using the mycompany.com example above):

{0}@mycompany.com

If your LDAP directory doesn’t accept usernames that can be constructed as described above, consider using Kerberos authentication instead of LDAP.

Example

The following is an example using the Kafka console consumer to read from a topic using LDAP authentication:

# Complete configuration file for LDAP auth $ cat ldap-client.properties security.protocol=SASL_SSL sasl.mechanism=PLAIN sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="alice" password="supersecret1"; ssl.truststore.location=/opt/cloudera/security/jks/truststore.jks # Connect to Kafka using LDAP auth $ kafka-console-consumer \ --bootstrap-server host-1.example.com:9093 \ --topic test \ --consumer.config ./ldap-client.properties

NOTE: The configuration file above contains sensitive credentials. Ensure that the file permissions are set so that only the file owner can read it.

What if I don’t have a Kerberos or LDAP server?

Kerberos and LDAP authentication are the industry standards that are, by far, the most common authentication mechanisms we see being used with Kafka across our customer base. They are not the only ones, though.

Kerberos and LDAP require the Kafka cluster to be integrated with backend directory service and sometimes this is not available in certain environments. When that’s the case, it’s still possible to set up authentication for the Kafka cluster using other methods, like mutual TLS authentication or SASL/PLAIN with a password file backend.

We will continue to explore other authentication alternatives in the next post. In the meantime, if you are interested in understanding Cloudera’s Kafka offering, download this white paper.

Hi Andre,

Is it possible to have multiple authentication methods active on the same kafka cluster (LDAP, Kerberos, SSL, …) ?

If so, is is up to the client to choose the authentication method?

Thanks

Hi, Tome,

Yes, it is possible. You can have multiple listeners, each one configured with a different security protocol. Also, a SASL listener can be configured to handle multiple authentication mechanisms (e.g. Kerberos and LDAP).

And, yes, in that case the client can choose the listener they connect to and the authentication mechanism they use.

Regards,

André