Apache Hadoop Distributed File System (HDFS) is the most popular file system in the big data world. The Apache Hadoop File System interface has provided integration to many other popular storage systems like Apache Ozone, S3, Azure Data Lake Storage etc. Some HDFS users want to extend the HDFS Namenode capacity by configuring Federation of Namenodes. Other users prefer other alternative file systems like Apache Ozone or S3 due to their scaling benefit. However migrating or moving some of the existing HDFS data to other file systems is a difficult task and may involve application metadata updates. For example, Apache Hive stores the file system paths (including its scheme) in their meta stores. ⥉Migrating file systems thus requires a metadata update.

The View File System implemented to provide a unified view for the HDFS Federated Namenodes to solve the scaling problem. By design, it also supports any Hadoop compatible file system as target mount point. There are two challenges with the View File System.

- The View File System requires it to have it’s own scheme “viewfs”. This scheme change requires users to update their metadata so we are left with the same problem⥉.

- It requires mount table configurations to copy across client nodes.

Solution:

Our goal is to have a global view file system that can reach HDFS, Ozone, S3, and other compatible file systems, while maintaining the existing file system as base file system. With this approach, the user does not need to update their file system path uris.

The solution to the problem one is that we extended the ViewFileSystem and created a new class called ViewFileSystemOverloadScheme. This new implementation will allow users to continue to use their existing fs.defaultFS configured scheme or any new scheme name instead of using the fixed scheme “viewfs”. Mount link configuration key, value formats are the same as in ViewFS Guide. Many existing HDFS users like Hive, HBase, Impala used DistributedFileSystem specific APIs. So, to access DFS specific APIs, applications typed to DistributedFileSystem and invoked the DFS specific APIs. To better handle such issues, we introduced ViewDistributedFileSystem which extends the DistributedFileSystem and uses ViewFileSystemOverloadScheme internally.

As a solution to the second problem, we implemented central mount table reading in ViewFileSystemOverloadScheme. So, administrators need not copy the mount-table.xml configuration file to 1000s of client nodes. Instead, they can keep the mount-table configuration file in a Hadoop compatible file system. Keeping the configuration file in a central place makes administrators’ life easier as they can update the mount-table in a single place.

To understand how to enable the ViewDistributedFileSystem and the mount point configurations, please read the community article.

Most of this work has been done in the Umbrella JIRA: HDFS-15289

Use Case: Moving Apache Hive partition data to Apache Ozone and adding mount point in Apache Hadoop HDFS

In this use case, we will explore the Hive usage with the ViewDistributedFileSystem. The idea is that we would like to move one or few large partition data from HDFS to the Ozone cluster. We wanted to add the mount points with respect to the base cluster(hdfs://ns1) pointing to the ozone cluster(o3fs://bucket.volume.ozone1/). The base cluster(hdfs://ns1) will be added as a linkFallback.

<property> <name>fs.viewfs.mounttable.ns1.linkFallback</name> <value>hdfs://ns1/</value> </property> |

Application calls will go to target file systems based on mount link paths. If there are no mount links mapping matching to the given path, then the linkFallback target(hdfs://ns1) will be chosen to delegate the calls. Typically we follow the same folder structure at Ozone cluster(target fs) as well.

Let’s say you have an existing hdfs cluster(hdfs://ns1) used by Hive and many other components. Let’s call it a base cluster.

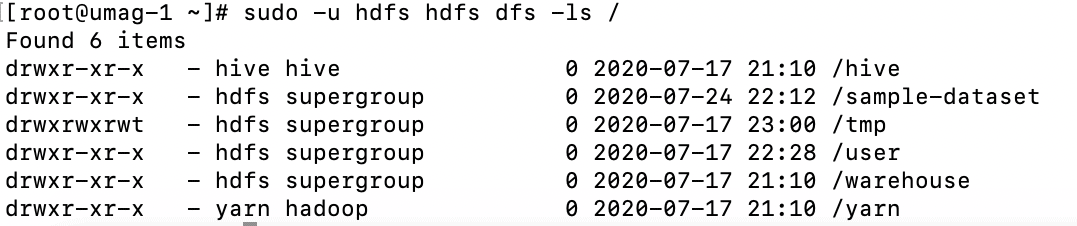

Base Cluster: hdfs://ns1 contains the following folders at root.

Copy the sample-sales.csv file into HDFS location(hdfs://ns1/sample-dataset)

| order_id | user_id | Item | state |

| 1234 | u1 | iphone7 | CA |

| 2345 | u1 | ipad | CA |

| 3456 | u2 | desktop | NY |

| 4567 | u3 | iphone6 | TX |

| 1345 | u1 | iphone7 | CA |

| 2456 | u4 | ipad | NY |

| 3567 | u5 | iphone11 | CA |

| 4678 | u6 | iphone | TX |

| 5891 | u3 | iphonexr | CA |

| 5899 | u3 | iphonexr | CA |

| 6990 | u2 | iphone11 | CA |

Let’s create a Hive table with this sample-sales.csv location.

Now let’s create an external table in Hive called “sales”

CREATE EXTERNAL TABLE sales (order_id BIGINT, user_id STRING, item STRING, state STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE LOCATION ’/sample-dataset/’; |

The next step is to create a managed table partitioned by “state”. The following query should create the managed partition table called “sales_by_state”.

CREATE TABLE sales_by_state (order_id BIGINT, user_id STRING, item STRING) PARTITIONED BY (state STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE; |

Once we have a “sales_by_state” table created, we can load the partitioning data into the table “sales_by_state” by the following query.

INSERT OVERWRITE TABLE sales_by_state PARTITION(state) SELECT order_id, user_id, item, state FROM sales; |

Now if you look at the HDFS for “sales_by_state” table folder location where hive maintains it’s managed table folders in hdfs, you would notice separate partitioned folders created for each state.

[root@umag-1 ~]# sudo -u hdfs hdfs dfs -ls /warehouse/tablespace/managed/hive/sales_by_state Found 3 items drwxr-xr-x - hive hive 0 2020-07-24 23:04 /warehouse/tablespace/managed/hive/sales_by_state/state=CA drwxr-xr-x - hive hive 0 2020-07-24 23:04 /warehouse/tablespace/managed/hive/sales_by_state/state=NY drwxr-xr-x - hive hive 0 2020-07-24 23:04 /warehouse/tablespace/managed/hive/sales_by_state/state=TX |

Here the key point about this use case is that we can choose some of the partition folders to move to the remote cluster, i.e Apache Ozone and add a mount point. In some scenarios if a partition is having huge data or the current base cluster is at its peak in the space usage, then the user may decide to move some of the partition data to Apache Ozone.

Let’s look at the following steps to understand better about the idea.

In this example, I want to move the partition “state=CA” to the ozone cluster.

First step we have to create the same folder structure of the partition in the Ozone cluster.

[root@umag-1 ~]# sudo -u hdfs hdfs dfs -mkdir o3fs://bucket.volume.ozone1/warehouse/tablespace/managed/hive/sales_by_state/

|

Note: You may have to create all parent folders recursively. Also it’s not mandatory to create the same folder structure, but we recommend having the same structure for easy identification and managing the data where we copied.

Second step is to move the data from the HDFS cluster to the Apache Ozone cluster. We can use distcp tool

[root@umag-1 ~]# sudo -u hdfs hadoop distcp /warehouse/tablespace/managed/hive/sales_by_state/state=CA o3fs://bucket.volume.ozone1/warehouse/tablespace/managed/hive/sales_by_state/

|

By this time, you should have the data copied into Apache Ozone.

[root@umag-1 ~]# sudo -u hdfs hdfs dfs -ls o3fs://bucket.volume.ozone1/warehouse/tablespace/managed/hive/sales_by_state/state=CA Found 1 items drwxrwxrwx - hdfs hdfs 0 2020-07-24 23:22 o3fs://bucket.volume.ozone1/warehouse/tablespace/managed/hive/sales_by_state/state=CA/base_0000001 |

Since we copied the data into Ozone cluster already, let’s delete the partition data from the base HDFS cluster.

[root@umag-1 ~]# sudo -u hdfs hdfs dfs -rmr /warehouse/tablespace/managed/hive/sales_by_state/state=CA

|

After deleting the partition, we will have only two partitions data left in base HDFS cluster

[root@umag-1 ~]# sudo -u hdfs hdfs dfs -ls /warehouse/tablespace/managed/hive/sales_by_state/ Found 2 items drwxr-xr-x - hive hive 0 2020-07-24 23:04 /warehouse/tablespace/managed/hive/sales_by_state/state=NY drwxr-xr-x - hive hive 0 2020-07-24 23:04 /warehouse/tablespace/managed/hive/sales_by_state/state=TX |

The third step is that, we should add the mount point configuration to the partition “state=CA” folder as follows:

<property> <name>fs.viewfs.mounttable.ns1.link./warehouse/tablespace/managed/hive/sales_by_state/state=CA</name> <value>o3fs://bucket.volume.ozone1/warehouse/tablespace/managed/hive/sales_by_state/state=CA</value> </property> |

Note that after adding the mount link, please restart the processes so that the mount links will be loaded. We have plans to auto load the configurations, but that’s in the future work.

From now onwards, any call from application(Hive) coming with the path /warehouse/tablespace/managed/hive/sales_by_state/state=CA, the path always will be resolved to o3fs://bucket.volume.ozone1/warehouse/tablespace/managed/hive/sales_by_state/state=CA, so that the call will be delegated to the Ozone cluster.

However, the previous delete step can also be postponed until we add the mount links or you can postpone until you get the confidence on mount linked data. Please note once you add a mount link, the base cluster “state=CA” folder will be shaded by the mount link target as you added the mount link to that folder. To delete this shaded folder “state=CA” from the base cluster, we need some special way to access it to avoid going through the mount file system.

[root@umag-1 ~]# sudo -u hdfs hdfs dfs -rmr -D fs.hdfs.impl=org.apache.hadoop.hdfs.DistributedFileSystem /warehouse/tablespace/managed/hive/sales_by_state/state=CA |

After mount links are loaded, –ls command on root will show a merged view of the mount links and the fallback base cluster tree. In this case, linkFallback base cluster root is hdfs://ns1/

So, we can see “state=CA” also listed when we do –ls on it’s parent.

[root@umag-1 ~]# sudo -u hdfs hdfs dfs -ls /warehouse/tablespace/managed/hive/sales_by_state/ Found 3 items drwxrwxrwx - hdfs hdfs 0 2020-07-24 23:22 /warehouse/tablespace/managed/hive/sales_by_state/state=CA drwxr-xr-x - hive hive 0 2020-07-24 23:04 /warehouse/tablespace/managed/hive/sales_by_state/state=NY drwxr-xr-x - hive hive 0 2020-07-24 23:04 /warehouse/tablespace/managed/hive/sales_by_state/state=TX |

Now verify the data with the Hive queries. Let’s try to query the data from partition “state=CA”

0: jdbc:hive2://umag-1.umag.root.hwx.site:218> SELECT * FROM sales_by_state WHERE state='CA'; ……. INFO : Executing command(queryId=hive_20200724235032_bfcecdf3-3078-47f8-8810-ca0079747e30): SELECT * FROM sales_by_state WHERE state='CA' INFO : Completed executing command(queryId=hive_20200724235032_bfcecdf3-3078-47f8-8810-ca0079747e30); Time taken: 0.013 seconds INFO : OK +--------------------------+-------------------------+----------------------+-----------------------+ | sales_by_state.order_id | sales_by_state.user_id | sales_by_state.item | sales_by_state.state | +--------------------------+-------------------------+----------------------+-----------------------+ | 1234 | u1 | iphone7 | CA | | 2345 | u1 | ipad | CA | | 1345 | u1 | iphone7 | CA | | 3567 | u5 | iphone11 | CA | | 5891 | u3 | iphonexr | CA | | 5899 | u3 | iphonexr | CA | | 6990 | u2 | iphone11 | CA | +--------------------------+-------------------------+----------------------+-----------------------+ 7 rows selected (0.375 seconds) |

Great to see these results and we are able to query the data from Ozone transparently.

Let’s try to insert a row into the partition “state=CA”

0: jdbc:hive2://umag-1.umag.root.hwx.site:218> INSERT INTO sales_by_state PARTITION(state=‘CA’) VALUES(111111, ‘u999’, ’iphoneSE’); 0: jdbc:hive2://umag-1.umag.root.hwx.site:218> SELECT * from sales_by_state WHERE state='CA'; +---------------------------------+--------------------------------+----------------------------+-----------------------------+ | sales_by_state.order_id | sales_by_state.user_id | sales_by_state.item | sales_by_state.state | +---------------------------------+--------------------------------+----------------------------+-----------------------------+ | 1234 | u1 | iphone7 | CA | | 2345 | u1 | ipad | CA | | 1345 | u1 | iphone7 | CA | | 3567 | u5 | iphone11 | CA | | 5891 | u3 | iphonexr | CA | | 5899 | u3 | iphonexr | CA | | 6990 | u2 | iphone11 | CA | | 111111 | u999 | iphoneSE | CA | +---------------------------------+--------------------------------+-----------------------------+----------------------------+ 8 rows selected (9.913 seconds) |

We are able to successfully insert new data into the partition.

Conclusion

To conclude, the ViewDistributedFileSystem brings the mount functionality, where users can configure mount points with other remote file systems(including federated hdfs) within the hdfs uri paths. Applications continue to work with the same hdfs paths even though the paths are mounted to other compatible file systems, including remote hdfs, ozone, cloud file systems like s3 and azure. This will reduce the application migration effort significantly when they want to try different file systems. Central mount tabe configuration option makes the mount point configurations in sync across all clients.

This solution brings the global unified view across the different Apache Hadoop compatible file systems with client side configurable mount points.

Thanks a lot to Arpit Agarwal, Jitendra Pandey, Wei-Chiu Wang, Karthik Krishnamoorthy, Tom Deane for the peer reviewing this blog post.

References:

- Apache Hadoop ViewFS Guide: https://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-hdfs/ViewFs.html

- A blog by twitter eng (by gerashegalov): https://blog.twitter.com/engineering/en_us/a/2015/hadoop-filesystem-at-twitter.html

- ViewDistributed File System Design Doc (Uma Gangumalla, Sanjay Radia, Wei-Chiu Chuang) : https://issues.apache.org/jira/secure/attachment/13000994/ViewFSOverloadScheme%20-%20V1.0.pdf

- Apache Hadoop JIRA HDFS-15289: Allow viewfs mounts with HDFS/HCFS scheme and centralized mount table : https://issues.apache.org/jira/browse/HDFS-15289